Detection of objects from images or from video is no trivial task. We need to use some type of machine learning algorithm and train it to detect features and also identify misses and false positives. The haartraining algorithm does just this. It creates a series of haarclassifiers which ensure that non-features are quickly rejected as the object is identified.

This post will highlight the necessary steps required to build a haarclassifier for detection a hand or any object of interest. This post is sequel to my earlier post (OpenCV: Haartraining and all that jazz!) and has a lot more detail. In order to train the haarclassifier, it is suggested, that at least 1000 positive samples (images with the object of interest- hand in this case) and 2000 negative samples (any other image) is required.

As before for performing haartraining the following 3 steps have to be performed

1) Create samples (createsamples.cpp)

2) Haar Training (haartraining.cpp)

3) Performance testing of the classifier (performance.cpp)

In order to build the above 3 utilities please refer to my earlier post OpenCV: Haartraining and all that jazz!

The steps required for training a haarcascade to recognize on normal open palm is given below

Create Samples: This step can be further broken down into the following 3 steps

a) Creation of positive samples

b) Superimposing the positive sample on the negative sample

c) Merging of vector files of samples.

A) Creation of positive samples:

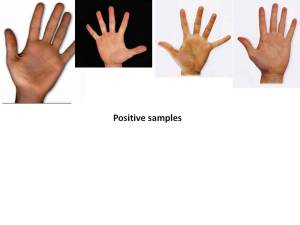

a)Get a series of images with objects of interest (positive samples):For this step take photos using the webcam (or camera) of the objects that you are interested. I had taken several snapshots of my hand ( I later simply downloaded images from Google images for because of the excessive clutter in the snaps taken).

b) Crop Images:In this step you need to crop the images such that it only contains the object of interest. You can use any photo editing tool of your choice and save all the positive images in a directory for e.g. ./hands

c) Mark the object:Now the image with the positive sample has to be marked. This will be used for creating the samples.

The tool that is to be used for marking is objectmarker.cpp. You can downloaded the source code for this from Achu Wilson’s blogNow build objectmarker.cpp with the include directories and libraries of OpenCV. Once you have successfully built objectmarker you are ready to mark the positive samples. Samples have to be marked because the description file for the positive samples file must be in the following format

[filename] [# of objects] [[x y width height] [… 2nd object] …]

This will be used for generating the positive training samples. This file is will be used with the createsamples utility to create positive training samples.

The command to use to run objectmarker is as follows

$ objectmarker <output file> <dir>

for e.g.

$objectmarker pos_desc.txt ./images

where dir is the directory containing the positive images

and output file will contain the positions of the objects marked as follows

pos_desc.txt

/images/hand1.bmp 1 0 0 246 50

/images/hand2.bmp 1 187 26 333 400

….

The use of the utility objectmarker is an art 😉 and you need to be trained to get the proper data. Make sure you get sensible widths and heights. There are times when you get -ve widths and -ve heights which are clearly wrong.

An alternative easy way is to open the jpg/bmp in a photo editor and check the width and height in pixels (188 x 200 say) and create the description file as

/images/hand1.bmp 1 0 0 188 200

Ideally the objectmarker should give you values close to this if the sample image file has only the object of interest (hand)

Create positive training samples

The createsamples utility can now be used for creating positive training sample files. The command is

createsamples -info pos_desc.txt -vec pos.vec -w 20 -h 20

This will create positive training samples with the object of interest, in our case the “hand”. Now, you should verify that the tool has done something sensible by checking the training samples generated. The command to use to display the positive training samples captured in the pos.vec is to run

createsamples -vec pos.vec -w 20 -h 20

If the objects have been marked accurately you should see a series of hands (positive samples). If the samples have not been marked correctly the positive training file will give incorrect results.

Create negative background training samples

This step is used to create training samples with one positive image superimposed against a set of negative background samples. The command to use is

createsamples -img ./image/hand_1.BMP -num 9 -bg bg.txt -vec neg1.vec -maxxangle 0.6 -maxyangle 0 -maxzangle 0.3 -maxidev 100 -bgcolor 0 -bgthresh 0 -w 20 -h 20

where bg.txt will contain the list of negative samples in the following format

./negative/airplane.jpg

./negative/baboon.jpg

./negative/cat.jpg

…

The positive hand images will be superimposed against the negative background in various angles of distortion.

All the negative training samples will be collected in the training file neg1.vec as above. As before you can verify that the createsamples utility has done something reasonable by executing

createsamples -vec neg1.vec -w 20 -h 20.

This should show a series of images of hands in various angles of distortion against the negative image background.

Creating several negative training samples with all positive samples

The createsamples utility takes one positive sample and superimposes it against the negative samples in bg.txt file. However we need to repeat this process for each of the positive sample (hand) that we have. Since this can be laborious process you can use the following shell script, I wrote, to repeat the negative training sample with every positive sample with create_neg_training.sh

create_neg_training.sh

#!/bin/bash

let j=1

for i in `cat hands.txt`

do

createsamples -img ./image/$i -num 9 -bg bg.txt -vec “neg_training$j.vec” -maxxangle 0.6 -maxyangle 0 -maxzangle 0.3 -maxidev 100 -bgcolor 0 -bgthresh 0 -w 20 -h 20

let j=j+1

done

where the positive samples are under ./image directory. For each positive hand sample a negative training sample file is create namely neg_training1.vec, neg_training2.vec etc.

Merging samples:

As seen above the createsamples utility superimposes only positive sample (hand) against a series of negative samples. Since we would like to repeat the utility for multiple positive samples there needs to be a way for merging all the training samples. A useful utility (mergevec.cpp) has been created and is available for download in Natoshi Seo’s blog

Download mergevec.cpp and build it along with (cvboost.cpp, cvhaarclassifier.cpp, cvhaartarining.cpp, cvsamples.cpp, cvcommon.cpp) with usual include files and the link libraries.

Once built it can be executed by executing

mergevec pos_neg.txt pos_neg.vec – w 20 -h 20

where pos_neg.txt will contain both the positive and negative training sample files as follows

pos_neg.txt

./vec/pos.vec

./vec/neg_training1.vec

./vec/neg_training2.vec

….

As before you can verify that the entire training file pos_neg.vec is sensible by executing

createsamples -vec pos_neg.vec -w 20 -h 20

Now the pos_neg.vec will contain all the training samples that are required for the haartraining process.

HaarTraining

The haartraining can be run with the training samples generated from the mergevec utility described above.

The command is

./haartrainer -data haarcascade -vec pos_neg.vec -bg bg.txt -nstages 20 -nsplits 2 -minhitrate 0.999 -maxfalsealarm 0.5 -npos 7 -nneg 9 -w 20 -h 20 -nonsym -mem 512 -mode ALL

Several posts have suggested that nstages should be ideally 20 and splits should be 2.

npos indicates the number of positive samples and -nneg the number of negative samples. In my case I had just used 7 positive and 9 negative samples. This step is extremely CPU intensive and can take several hours/days to complete. I had reduced the number of stages to 14. The haartraining utility will create a haarcascade directory and a haarcascade.xml.

Performance testing : The first way to test the integrity of the haarcascades is to run the performance utility described in my earlier post OpenCV:Haartraining and all that jazz! If you want to use the performance utility you should also create test samples which can be used for testing with the command

createsamples -img ./image/hand-1.bmp -num 10 -bg bg.txt -info test.dat -maxxangle 0.6 -maxyangle 0 -maxzangle 0.3 -maxidev 100 -bgcolor 0 -bgthresh 0

The test.dat can be used with the performance utility as follows

./performance -data haarcascade.xml -info test.dat -w 20 -h 20

I wanted something that would be more visually satisfying that seeing the output of the performance utility. This utility just spews out textual information of hits, misses.

So I decided to use the facedetect.c with the haarcascade trained by my positive and negative samples to check whether it was working. The test below describes using the facedetect.c for detecting the hand.

The code for facedetect.c can be downloaded from Willow Garage Wiki.

Compile and link the facedetect.c as handetect with the usual suspects. Once this builds successfully you can use

handdetect –-cascade=haarcascade.xml hands.jpg

where hands.jpg was my test image. If the handdetect does indeed detect the hand in the image it will l enclose the object detected with a rectangle. See the output below

While my haarcascade does detect 5 hands it does appear shifted. This could be partly because the number of positive and negative training samples used were 7 & 9 respectively which is very low. Also I used only 14 stages in the haartraining. As mentioned in the beginning there is a need for at least 1000 positive and 2000 negative samples which has to be used. Also haartraining should have 20 stages and 2 nsplits. Hopefully if you follow this you would have developed a fairly true haarcascade.

If you are adventurous enough you could run the above with the webcam as

handdetect –cascade=haarcascade.xml 0

where 0 indicates the webcam. Assuming that your haarcascade is perfect you should be able to track your hand in real time.

Haarpy training!

See also

– OpenCV: Haartraing and all that jazz!

– De-blurring revisited with Wiener filter using OpenCV

– Dabbling with Wiener filter using OpenCV

– Deblurring with OpenCV: Wiener filter reloaded

– Re-working the Lucy-Richardson Algorithm in OpenCV

You may also like

1. What’s up Watson? Using IBM Watson’s QAAPI with Bluemix, NodeExpress – Part 1

2. Bend it like Bluemix, MongoDB with autoscaling – Part 1

3. Informed choices through Machine Learning : Analyzing Kohli, Tendulkar and Dravid

4. A crime map of India in R: Crimes against women

Hello there

I have modified your sh script as follows

#!/bin/bash

let j=1

for i in `text2.txt`

do

opencv_createsamples -img /rawdata2/$i -num 9 -bg infofile2.txt -vec “neg_training$j.vec” -maxxangle 0.6 -maxyangle 0 -maxzangle 0.3 -maxidev 100 -bgcolor 0 -bgthresh 0 -w 20 -h 20

let j=j+1

done

I’m using cygwin and everytime I run the script I get the following error:

syntax error: invalid arithmetic operator (error token is ”

;/create_neg_training.sh: line 4: syntax error near unexpected token ‘$’do\r”

/create_neg_training.sh: line 4: ‘do

Thanks in advance.

LikeLike

JC, I think the issue is that you are having dos line terminators ‘\r’. Some of the suggestions I saw while googling was to use vim editor and call set :set ff={dos,unix,mac}. I think you can also use

dos2unix utility to convert your shell script to unix format before executing it. Good luck!

LikeLike

Thanks for the response.I created my own version in C++ and generated the vec files.

I’m about to run the haartraining utility now but I’m confused on what thing. Before, I would run the haartraining with positive samples in a vec file and negatives .bmp samples. I was using 300 positive .bmp pictures to generate the pos.vec and 700 negatives .bmp pictures specified by -bg infofile.txt. Since my results were too bad, I realized I needed the pos_neg.vec as you explained above.

I created multiple neg_trainx.vec files and merged them with my pos.vec file. For all of this, I used 300 positive images(.bmp) and 700 negatives (.bmp). In my loop in create_samples.cpp, the -num parameter is 9 for each image. So I generate 9 for 300 positives and have as a result 300 neg_trainx.vec.

I’m confused on how to run the haartraining. Should i specify -npos 300 or

-npos 300*9??

Thanks for your help

LikeLike

JC, you would use -npos 300 and -nneg 700 for the haartrainer

LikeLike

Hello Ganesh,

Thanks for the quick reply.

I again ran the haartrainer but the results are nowhere close to the ones you obtained with just 7 and 9 samples.

More questions…

I’m using objectmarker.exe to mark the object of interest in the .bmp files that I have. These files are not cropped. Do I need to also do so? This reference I have followed doesnt quite specify it.

Click to access OpenCV_ObjectDetection_HowTo.pdf

And lastly, what is the best way you recommend to keep the same aspect ratios when marking the object of interests? I’m starting to believe this might be the source of my bad results.

Thanks once again

LikeLike

JC, Yes you must crop your images with any tool of your choice. Secondly as I have mentioned ensure that you are getting reasonable results with object marker (it should be close to the image’s actual width & height) . An alternative way I have suggested is to just put the width and height of your image (with object of interest only) for each line of the positive samples text. You can see the width and height when you use any photoeditor.

LikeLike

Hey Ganesh,thanks for your help first and foremost.

I cropped all of the images and remarked them with objectmarker. The results with 300 and 700 were better but not by that much. I decided to go full scale and ran haartraining with 550 pos and 1100 negatives. Right now, its been loading the negatives for an entire day on stage 16.No percentage of loading completion is being shown.

I just want to make sure this is normal …also, what numbers do you recommend for positives/negatives samples? do you go by 1:2 ratio?

Thanks again

LikeLike

JC, Yes a 1:2 ratio is supposed to be good. I think with the 550:1100 samples the haartraining should take at least 2 days. Good luck!

LikeLike

hello ,, nice article

I have two questions

1- what do you mean by negative pictures ??

2- objectmaker code draws a rectangle around the object so why do we need to crop the object from image before ??

LikeLike

Thanks!

1. Negative samples are those that include the object of interest (e.g. hand)

2. It is safer to crop though objectmarker marks the objects.

LikeLike

Hello ,, thanks for reply ,,

I have a problem with objectmarker.cpp , the code used in

http://achuwilson.wordpress.com/2011/07/01/create-your-own-haar-classifier-for-detecting-objects-in-opencv/#more-31

the “B” key is not working ,,and so the data is not saved in the output file , I tried to find the problem but I couldn’t ,, Can You Help Me ?

LikeLike

It is Space-B. You have to press together if I remember right. Give it a try. Should work.

LikeLike

I tried it ,, it doesn’t work .. and this is how to run the code :

while running this code, each image in the given directory will open up. Now mark the edges of the object using the mouse buttons

then press then press “SPACE” to save the selected region, or any other key to discard it. Then use “B” to move to next image. the program automatically

quits at the end. press ESC at anytime to quit.

LikeLike

Hello Ganesh ,

Is their a prefered ratio between positive and negative images sizes ?

I mean if both , the cropped positive images and the negative images, have the same size will this cause unwanted results at the sampling stage ?

btw the key problem was about ASCII value :$ and it is solved

LikeLike

@ComputerEngG : I don’t think there is a size ratio that needs to be considered as long as you get the sizes right while cropping. Good to know you figured the objectmarker issue!

LikeLike

thanks a lot

LikeLike

Hello sir ,

I’m a student studying image processing and computer vision.i’m trying to develop an application on gesture recognition with opencv.. can u give me some pointers… i’m finding it so hard to understand certain notions of opencv

LikeLike

Suganya, You could buy the book “Learning OpenCV”. It is a great book and has good examples. Do let me know if you want any other inputs.

LikeLike

Sr.

I am computer engenering student and doing a work to gesture recogntion. I have 369 positives images that i took, and more 400 than i got in net. Do you want share your positives database?

Thanks so much

LikeLike

Daniel, You could one the Carnegie Mellon databases for your purpose – See http://www.cs.cmu.edu/~cil/v-images.html

LikeLike

Hello Ganesh , how are you ?

I’m working on haartraining step , but I have this error

” OpenCV Error: Bad flag (parameter or structure field) () in cvReleaseMat, file cxcore/cxarray.cpp, line 190

terminate called after throwing an instance of ‘cv::Exception’

Aborted ”

I tried to solve the problem as the following link explains but unfortunately the problem still unsolved !!

http://comments.gmane.org/gmane.comp.lib.opencv/35952

Do you have any idea ??

LikeLike

Hi, sharing the result would be a perfect step for beginners.

Can you please share the results of your work?

LikeLike

Samet- Thanks for the comments. Will definitely post proper results. Kind of tied up now.

LikeLike

Thanks for reply. Can you post your haar trained xml file?

LikeLike

BEST HAI SIR JI

LikeLike

How good is your cascade file. Could you post it?

LikeLike

Chris – My intention was to determine the complete process. The cascade file that I got was based on a few test samples only. – Ganesh

LikeLike

In previous comments you answered “Negative samples are those that include the object of interest (e.g. hand)”. Really?

Actually negatives are photographs that don’t contain object of interest according to http://opencv.itseez.com/trunk/doc/user_guide/ug_traincascade.html

Am I right?

LikeLike

Zminy- You are correct. It is a typo. I actually meant positive samples are those that include the object of interest. Thanks!

LikeLike

Hi Ganesh,

Can you please share the haarcascase.xml file.I need to create something on the web which will recognize palm using a webcam.

It would be really nice of you if you could share the xml file.

I would make sure that I give the due recognition and mention your name in the credits.

(BTW it would be open source and free)

Thanks

—

Neeraj Kumar

CSE

IITBHU

LikeLike

Neeraj – I did not do a rigorous training. My intent in this post was more to identify the steps involved. I am planning to do a fill fledged training soo,

Regards

Ganesh

LikeLike

Hi Ganesh,

Thats great.But until then can you post the file that you did with a lesser set of positive and negative specimens?

Thanks

—

Neeraj

LikeLike

@all, I found cascade.xml training file for hand(s) from CCV Hand project ccv-hand.googlecode.com . Check it out here http://ccv-hand.googlecode.com/svn-history/trunk/Final/ . I’ve tested it with a sample code from OpenCV 2.4.2 release — it doesn’t detect hand, unfortunately. It’s all here http://ccv-hand.googlecode.com/svn-history/trunk/Final/ — pretty heavy stuff.

LikeLike

Hi ganesh.. thanks 4 sharing a good article.

After detecting hand how can we recognize the guesture?

LikeLike

sir Can I do the same things using Python 2.7

LikeLike