Here is the 1st part of my video presentation on “Machine Learning, Data Science, NLP and Big Data – Part 2”

Category: linear regression

Revisiting crimes against women in India

Here I go again, raking the muck about crimes against women in India. My earlier post “A crime map of India in R: Crimes against women in India” garnered a lot of responses from readers. In fact one of the readers even volunteered to create the only choropleth map in that post. The data for this post is taken from http://data.gov.in. You can download the data from the link “Crimes against women in India”

I was so impressed by the choropleth map that I decided to do that for all crimes against women.(Wikipedia definition: A choropleth map is a thematic map in which areas are shaded or patterned in proportion to the measurement of the statistical variable being displayed on the map). Personally, I think pictures tell the story better. I am sure you will agree!

So here, I have it a Shiny app which will plot choropleth maps for a chosen crime in a given year.

You can try out my interactive Shiny app at Crimes against women in India

Checkout out my book on Amazon available in both Paperback ($9.99) and a Kindle version($6.99/Rs449/). (see ‘Practical Machine Learning with R and Python – Machine Learning in stereo‘)

The following technique can be used to determine the ‘goodness’ of a hypothesis or how well the hypothesis can fit the data and can also generalize to new examples not in the training set.

In the picture below are the details of ‘Rape” in the year 2015.

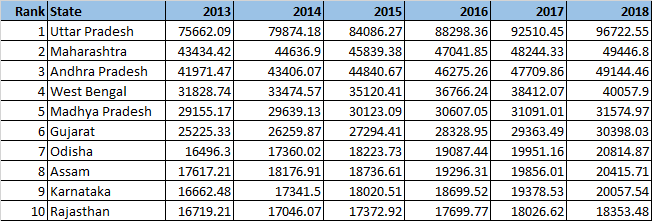

Interestingly the ‘Total Crime against women’ in 2001 shows the Top 5 as

1) Uttar Pradresh 2) Andhra Pradesh 3) Madhya Pradesh 4) Maharashtra 5) Rajasthan

But in 2015 West Bengal tops the list, as the real heavy weight in crimes against women. The new pecking order in 2015 for ‘Total Crimes against Women’ is

1) West Bengal 2) Andhra Pradesh 3) Uttar Pradesh 4) Rajasthan 5) Maharashtra

Similarly for rapes, West Bengal is nowhere in the top 5 list in 2001. In 2015, it is in second only to the national rape leader Madhya Pradesh. Also in 2001 West Bengal is not in the top 5 for any of 6 crime heads. But in 2015, West Bengal is in the top 5 of 6 crime heads. The emergence of West Bengal as the leader in Crimes against Women is due to the steep increase in crime rate over the years.Clearly the law and order situation in West Bengal is heading south.

In Dowry Deaths, UP, Bihar, MP, West Bengal lead the pack, and in that order in 2015.

The usual suspects for most crime categories are West Bengal, UP, MP, AP & Maharashtra.

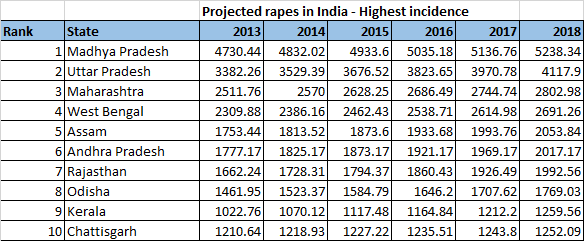

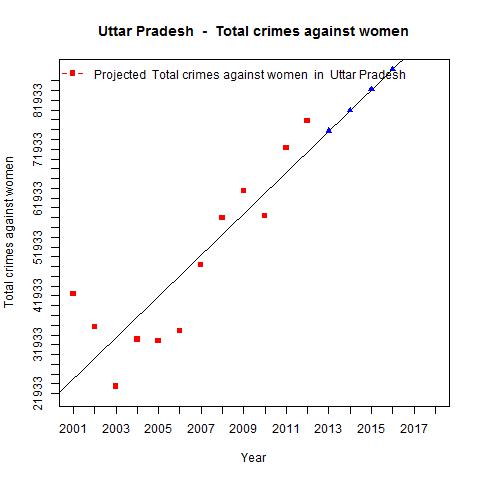

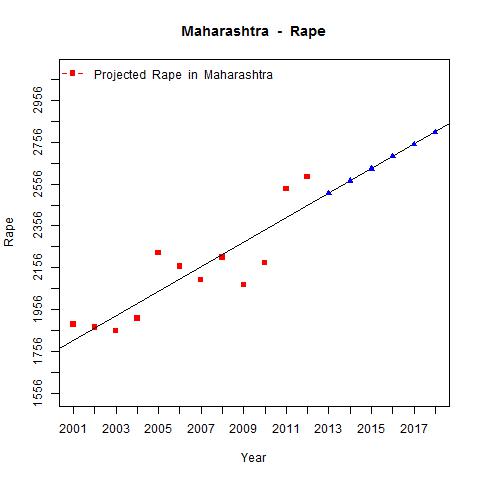

The state-wise crime charts plot the incidence of the crime (rape, dowry death, assault on women etc) over the years. Data for each state and for each crime was available from 2001-2013. The data for period 2014-2018 are projected using linear regression. The shaded portion in the plots indicate the 95% confidence level in the prediction (i.e in other words we can be 95% certain that the true mean of the crime rate in the projected years will lie within the shaded region)

Several interesting requests came from readers to my earlier post. Some of them were to to plot the crimes as function of population and per capita income of the State/Union Territory to see if the plots throw up new crime leaders. I have not got the relevant state-wise population distribution data yet. I intend to update this when I get my hands on this data.

I have included the crimes.csv which has been used to generate the visualization. However for the Shiny app I save this as .RData for better performance of the app.

You can clone/download the code for the Shiny app from GitHub at crimesAgainWomenIndia

Please checkout my Shiny app : Crimes against women

I also intend to add further interactivity to my visualizations in a future version. Watch this space. I’ll be back!

You may like

1. My book ‘Practical Machine Learning with R and Python’ on Amazon

2. Natural Language Processing: What would Shakespeare say?

3. Introducing cricketr! : An R package to analyze performances of cricketers

4. A peek into literacy in India: Statistical Learning with R

5. Informed choices through Machine Learning : Analyzing Kohli, Tendulkar and Dravid

6. Re-working the Lucy-Richardson Algorithm in OpenCV

7. What’s up Watson? Using IBM Watson’s QAAPI with Bluemix, NodeExpress – Part 1

8. Bend it like Bluemix, MongoDB with autoscaling – Part 2

9. TWS-4: Gossip protocol: Epidemics and rumors to the rescue

10. Thinking Web Scale (TWS-3): Map-Reduce – Bring compute to data

11. Simulating an Edge Shape in Android

cricketr plays the ODIs!

Published in R bloggers: cricketr plays the ODIs

Introduction

In this post my package ‘cricketr’ takes a swing at One Day Internationals(ODIs). Like test batsman who adapt to ODIs with some innovative strokes, the cricketr package has some additional functions and some modified functions to handle the high strike and economy rates in ODIs. As before I have chosen my top 4 ODI batsmen and top 4 ODI bowlers.

If you are passionate about cricket, and love analyzing cricket performances, then check out my racy book on cricket ‘Cricket analytics with cricketr and cricpy – Analytics harmony with R & Python’! This book discusses and shows how to use my R package ‘cricketr’ and my Python package ‘cricpy’ to analyze batsmen and bowlers in all formats of the game (Test, ODI and T20). The paperback is available on Amazon at $21.99 and the kindle version at $9.99/Rs 449/-. A must read for any cricket lover! Check it out!!

You can download the latest PDF version of the book at ‘Cricket analytics with cricketr and cricpy: Analytics harmony with R and Python-6th edition‘

Important note 1: The latest release of ‘cricketr’ now includes the ability to analyze performances of teams now!! See Cricketr adds team analytics to its repertoire!!!

Important note 2 : Cricketr can now do a more fine-grained analysis of players, see Cricketr learns new tricks : Performs fine-grained analysis of players

Important note 3: Do check out the python avatar of cricketr, ‘cricpy’ in my post ‘Introducing cricpy:A python package to analyze performances of cricketers”

Do check out my interactive Shiny app implementation using the cricketr package – Sixer – R package cricketr’s new Shiny avatar

You can also read this post at Rpubs as odi-cricketr. Dowload this report as a PDF file from odi-cricketr.pdf

Important note: Do check out my other posts using cricketr at cricketr-posts

Note: If you would like to do a similar analysis for a different set of batsman and bowlers, you can clone/download my skeleton cricketr template from Github (which is the R Markdown file I have used for the analysis below). You will only need to make appropriate changes for the players you are interested in. Just a familiarity with R and R Markdown only is needed.

Batsmen

- Virendar Sehwag (Ind)

- AB Devilliers (SA)

- Chris Gayle (WI)

- Glenn Maxwell (Aus)

Bowlers

- Mitchell Johnson (Aus)

- Lasith Malinga (SL)

- Dale Steyn (SA)

- Tim Southee (NZ)

I have sprinkled the plots with a few of my comments. Feel free to draw your conclusions! The analysis is included below

The profile for Virender Sehwag is 35263. This can be used to get the ODI data for Sehwag. For a batsman the type should be “batting” and for a bowler the type should be “bowling” and the function is getPlayerDataOD()

The package can be installed directly from CRAN

if (!require("cricketr")){

install.packages("cricketr",lib = "c:/test")

}

library(cricketr)or from Github

library(devtools)

install_github("tvganesh/cricketr")

library(cricketr)

The One day data for a particular player can be obtained with the getPlayerDataOD() function. To do you will need to go to ESPN CricInfo Player and type in the name of the player for e.g Virendar Sehwag, etc. This will bring up a page which have the profile number for the player e.g. for Virendar Sehwag this would be http://www.espncricinfo.com/india/content/player/35263.html. Hence, Sehwag’s profile is 35263. This can be used to get the data for Virat Sehwag as shown below

sehwag <- getPlayerDataOD(35263,dir="..",file="sehwag.csv",type="batting")Analyses of Batsmen

The following plots gives the analysis of the 4 ODI batsmen

- Virendar Sehwag (Ind) – Innings – 245, Runs = 8586, Average=35.05, Strike Rate= 104.33

- AB Devilliers (SA) – Innings – 179, Runs= 7941, Average=53.65, Strike Rate= 99.12

- Chris Gayle (WI) – Innings – 264, Runs= 9221, Average=37.65, Strike Rate= 85.11

- Glenn Maxwell (Aus) – Innings – 45, Runs= 1367, Average=35.02, Strike Rate= 126.69

Plot of 4s, 6s and the scoring rate in ODIs

The 3 charts below give the number of

- 4s vs Runs scored

- 6s vs Runs scored

- Balls faced vs Runs scored

A regression line is fitted in each of these plots for each of the ODI batsmen A. Virender Sehwag

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./sehwag.csv","Sehwag")

batsman6s("./sehwag.csv","Sehwag")

batsmanScoringRateODTT("./sehwag.csv","Sehwag")dev.off()## null device

## 1B. AB Devilliers

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./devilliers.csv","Devillier")

batsman6s("./devilliers.csv","Devillier")

batsmanScoringRateODTT("./devilliers.csv","Devillier")dev.off()## null device

## 1C. Chris Gayle

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./gayle.csv","Gayle")

batsman6s("./gayle.csv","Gayle")

batsmanScoringRateODTT("./gayle.csv","Gayle")dev.off()## null device

## 1D. Glenn Maxwell

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./maxwell.csv","Maxwell")

batsman6s("./maxwell.csv","Maxwell")

batsmanScoringRateODTT("./maxwell.csv","Maxwell")dev.off()## null device

## 1Relative Mean Strike Rate

In this first plot I plot the Mean Strike Rate of the batsmen. It can be seen that Maxwell has a awesome strike rate in ODIs. However we need to keep in mind that Maxwell has relatively much fewer (only 45 innings) innings. He is followed by Sehwag who(most innings- 245) also has an excellent strike rate till 100 runs and then we have Devilliers who roars ahead. This is also seen in the overall strike rate in above

par(mar=c(4,4,2,2))

frames <- list("./sehwag.csv","./devilliers.csv","gayle.csv","maxwell.csv")

names <- list("Sehwag","Devilliers","Gayle","Maxwell")

relativeBatsmanSRODTT(frames,names)Relative Runs Frequency Percentage

Sehwag leads in the percentage of runs in 10 run ranges upto 50 runs. Maxwell and Devilliers lead in 55-66 & 66-85 respectively.

frames <- list("./sehwag.csv","./devilliers.csv","gayle.csv","maxwell.csv")

names <- list("Sehwag","Devilliers","Gayle","Maxwell")

relativeRunsFreqPerfODTT(frames,names)Percentage of 4s,6s in the runs scored

The plot below shows the percentage of runs made by the batsmen by ways of 1s,2s,3s, 4s and 6s. It can be seen that Sehwag has the higheest percent of 4s (33.36%) in his overall runs in ODIs. Maxwell has the highest percentage of 6s (13.36%) in his ODI career. If we take the overall 4s+6s then Sehwag leads with (33.36 +5.95 = 39.31%),followed by Gayle (27.80+10.15=37.95%)

Percent 4’s,6’s in total runs scored

The plot below shows the contrib

frames <- list("./sehwag.csv","./devilliers.csv","gayle.csv","maxwell.csv")

names <- list("Sehwag","Devilliers","Gayle","Maxwell")

runs4s6s <-batsman4s6s(frames,names)print(runs4s6s)## Sehwag Devilliers Gayle Maxwell

## Runs(1s,2s,3s) 60.69 67.39 62.05 62.11

## 4s 33.36 24.28 27.80 24.53

## 6s 5.95 8.32 10.15 13.36 Runs forecast

The forecast for the batsman is shown below.

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

batsmanPerfForecast("./sehwag.csv","Sehwag")

batsmanPerfForecast("./devilliers.csv","Devilliers")

batsmanPerfForecast("./gayle.csv","Gayle")

batsmanPerfForecast("./maxwell.csv","Maxwell")dev.off()## null device

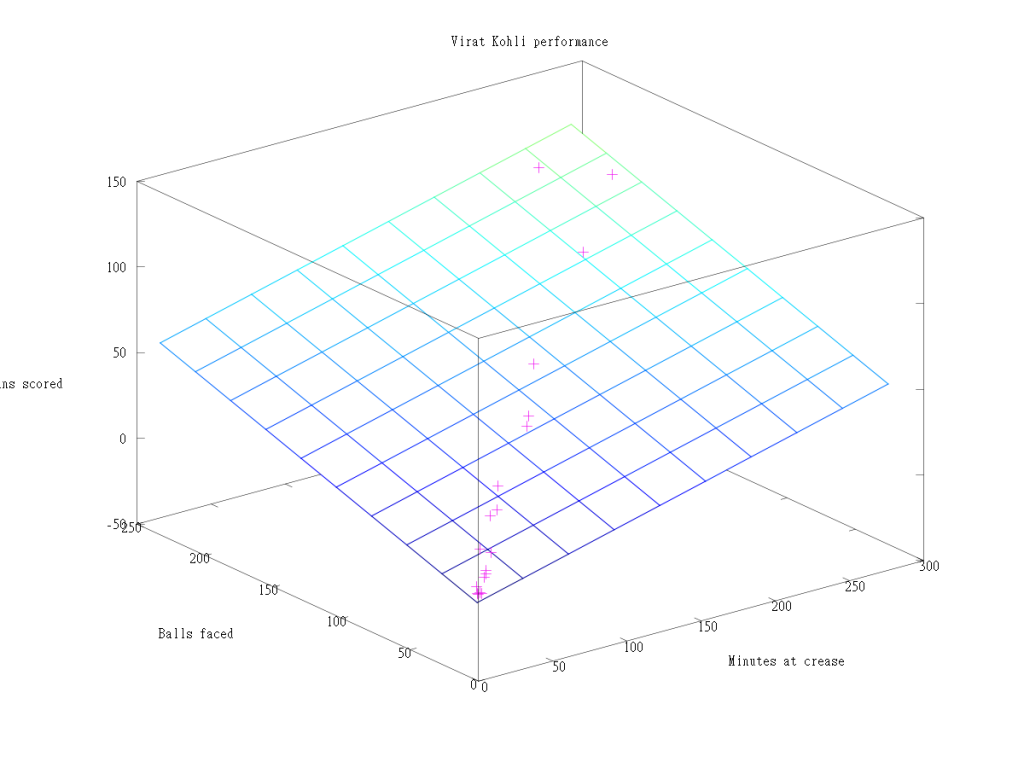

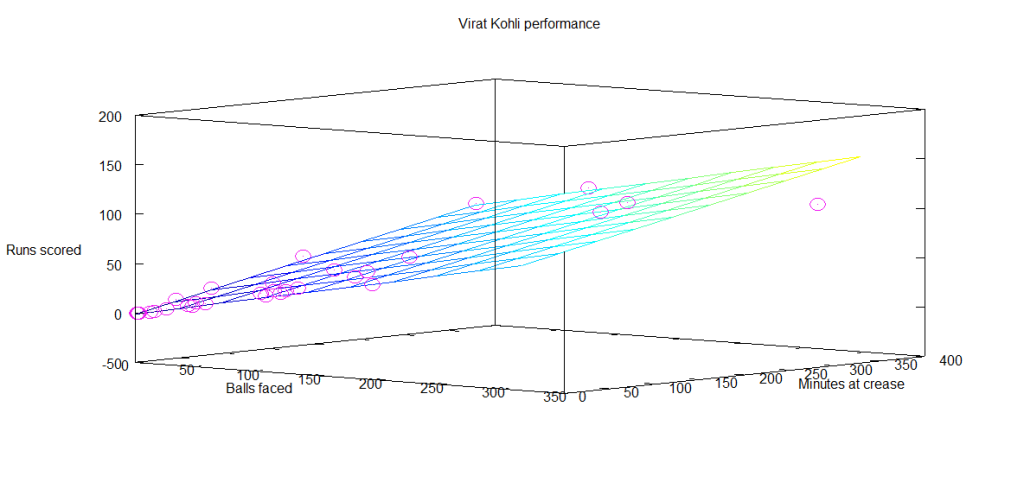

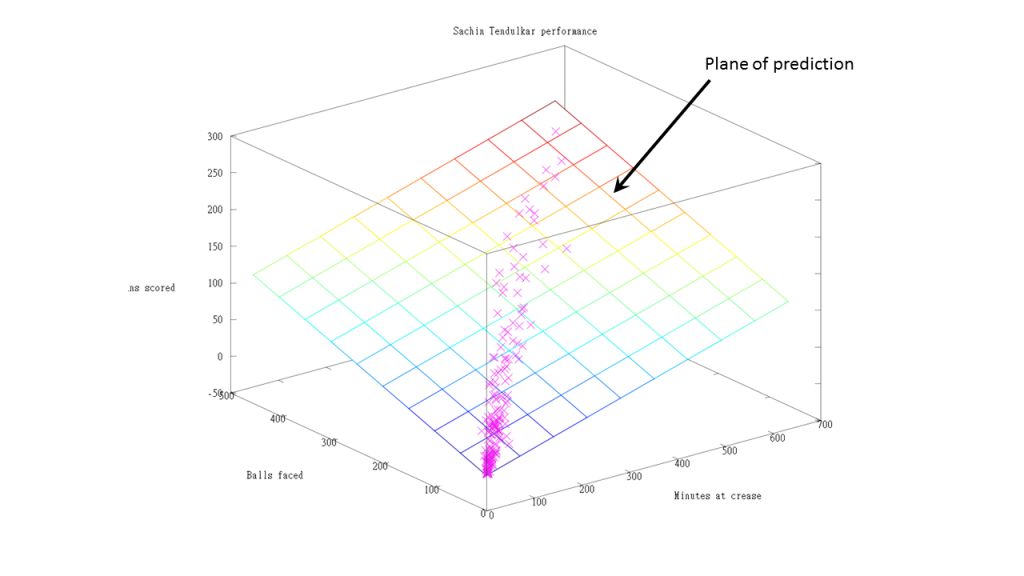

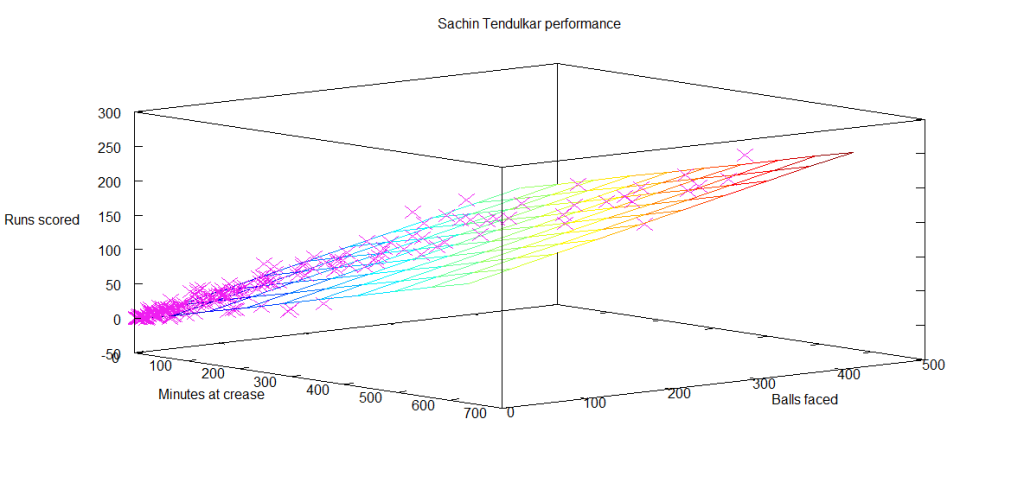

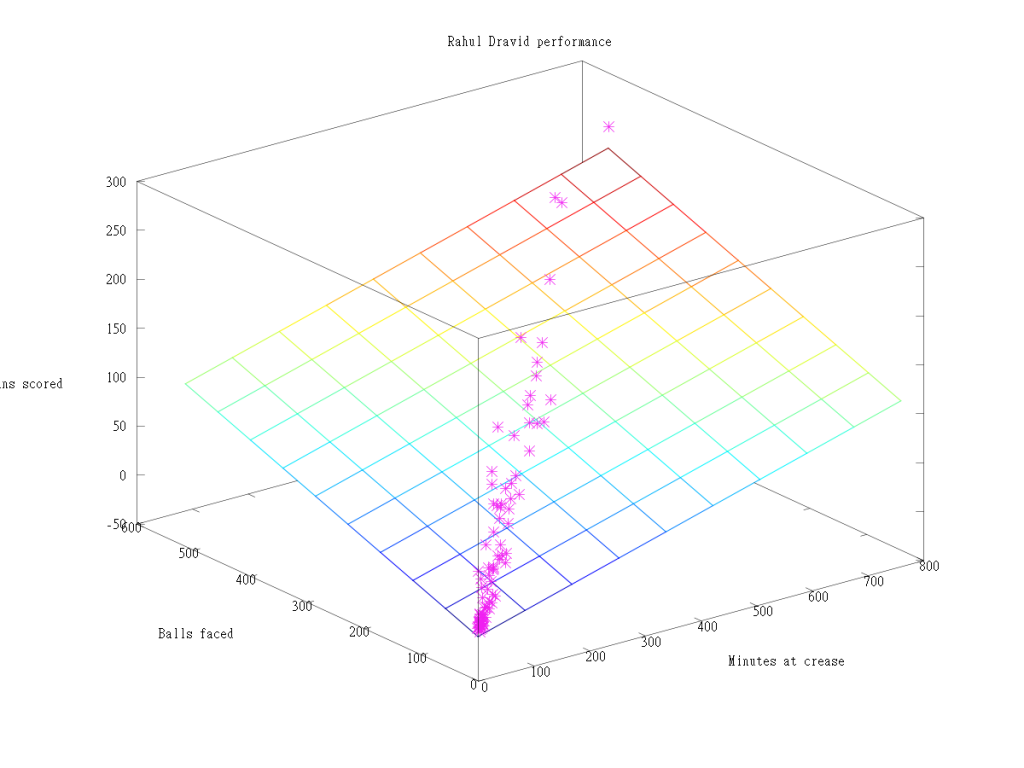

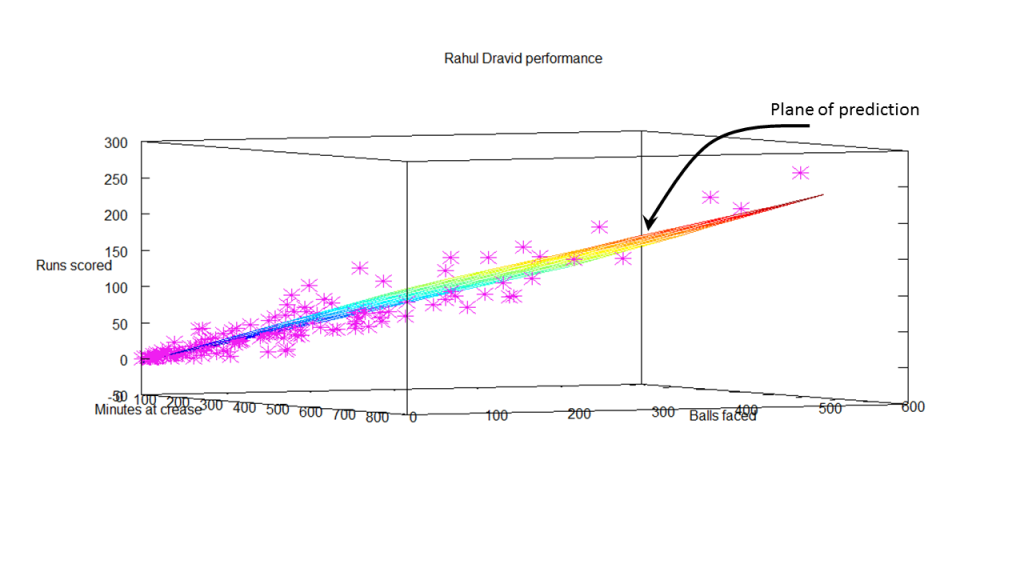

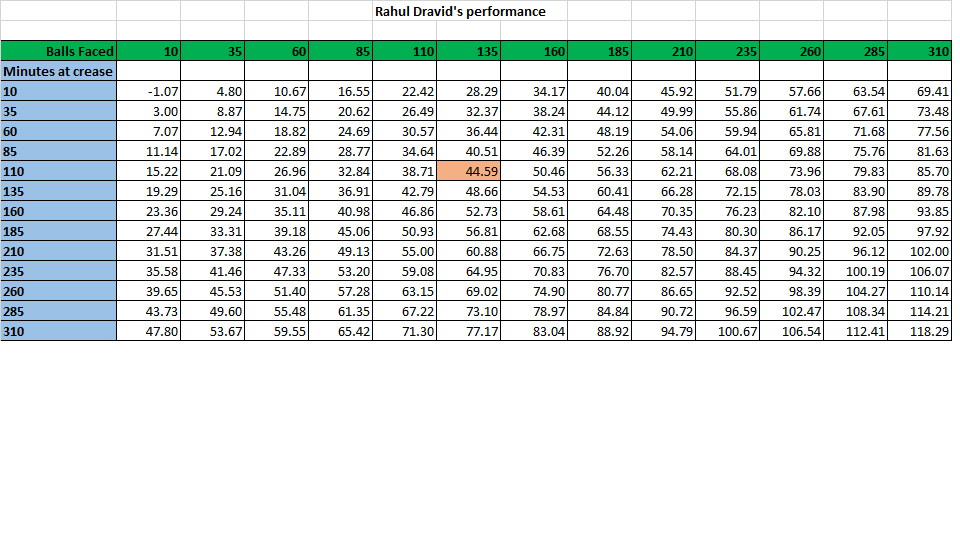

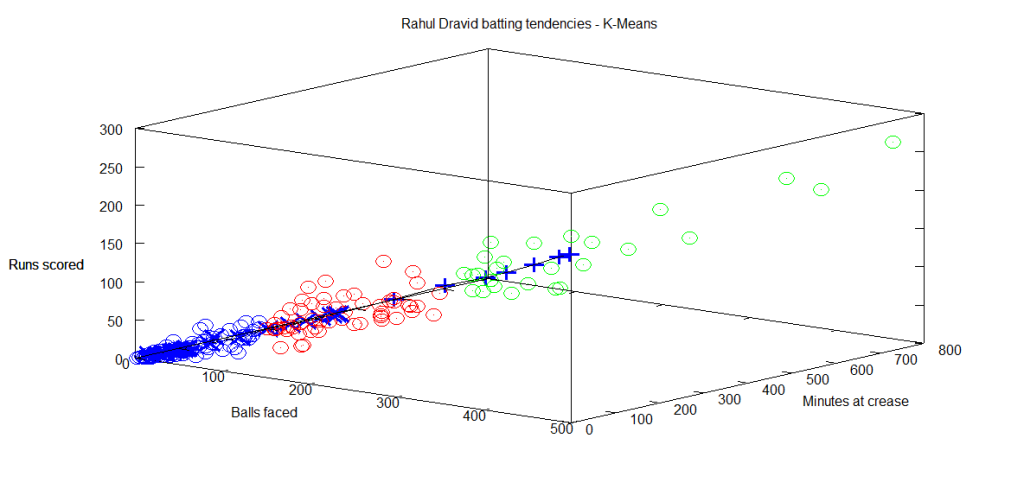

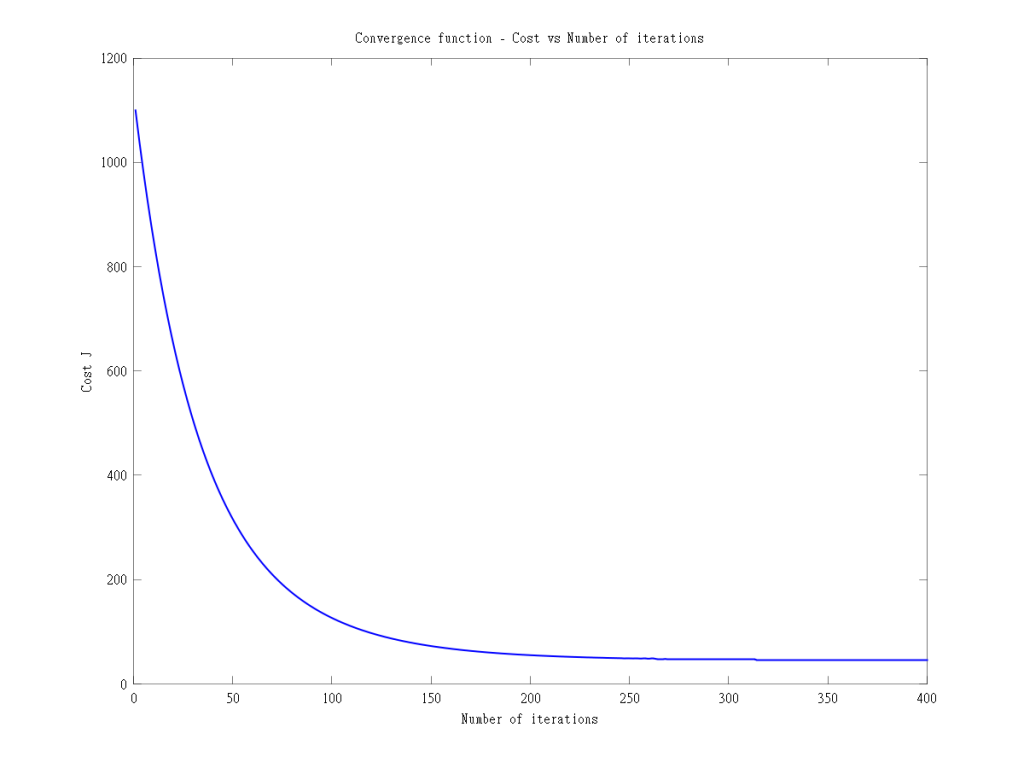

## 13D plot of Runs vs Balls Faced and Minutes at Crease

The plot is a scatter plot of Runs vs Balls faced and Minutes at Crease. A prediction plane is fitted

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

battingPerf3d("./sehwag.csv","V Sehwag")

battingPerf3d("./devilliers.csv","AB Devilliers")dev.off()## null device

## 1par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

battingPerf3d("./gayle.csv","C Gayle")

battingPerf3d("./maxwell.csv","G Maxwell")dev.off()## null device

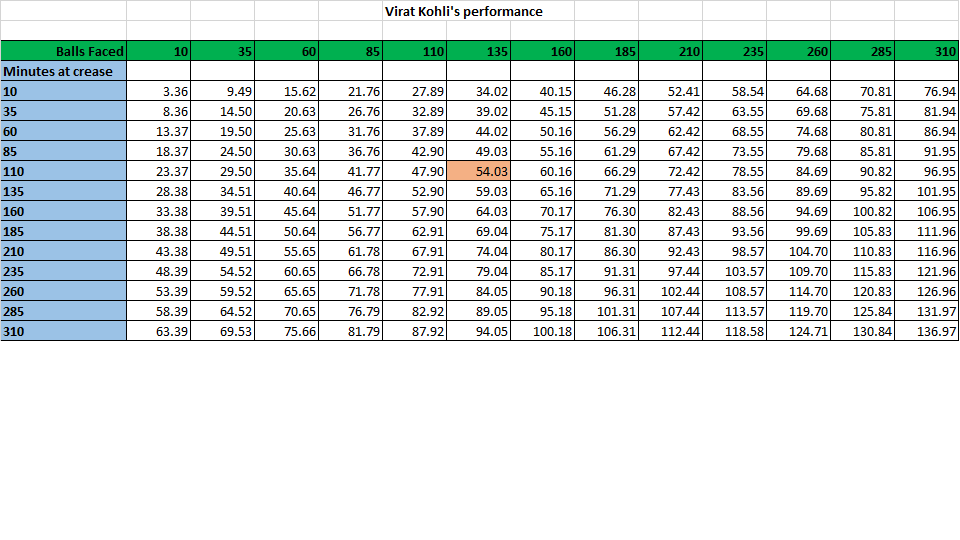

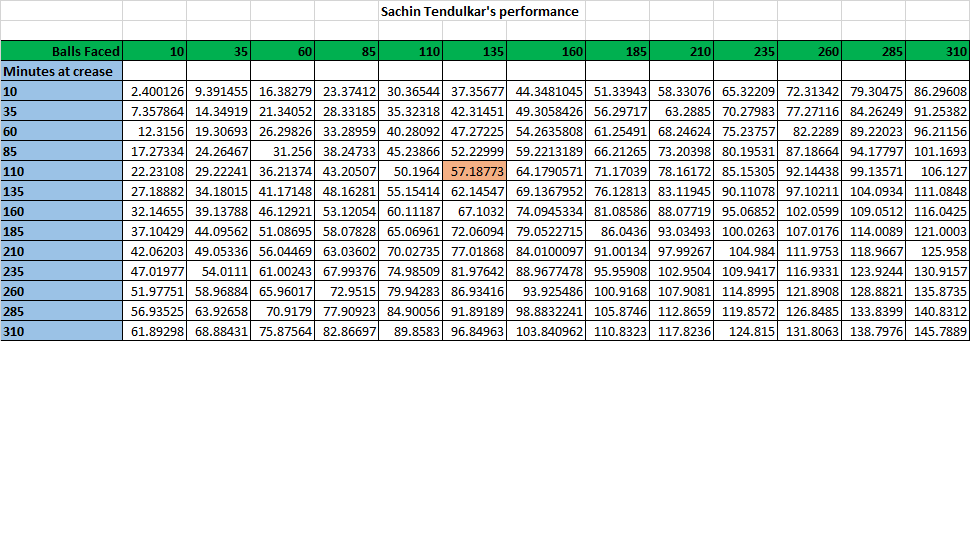

## 1Predicting Runs given Balls Faced and Minutes at Crease

A multi-variate regression plane is fitted between Runs and Balls faced +Minutes at crease.

BF <- seq( 10, 200,length=10)

Mins <- seq(30,220,length=10)

newDF <- data.frame(BF,Mins)

sehwag <- batsmanRunsPredict("./sehwag.csv","Sehwag",newdataframe=newDF)

devilliers <- batsmanRunsPredict("./devilliers.csv","Devilliers",newdataframe=newDF)

gayle <- batsmanRunsPredict("./gayle.csv","Gayle",newdataframe=newDF)

maxwell <- batsmanRunsPredict("./maxwell.csv","Maxwell",newdataframe=newDF)The fitted model is then used to predict the runs that the batsmen will score for a hypotheticial Balls faced and Minutes at crease. It can be seen that Maxwell sets a searing pace in the predicted runs for a given Balls Faced and Minutes at crease followed by Sehwag. But we have to keep in mind that Maxwell has only around 1/5th of the innings of Sehwag (45 to Sehwag’s 245 innings). They are followed by Devilliers and then finally Gayle

batsmen <-cbind(round(sehwag$Runs),round(devilliers$Runs),round(gayle$Runs),round(maxwell$Runs))

colnames(batsmen) <- c("Sehwag","Devilliers","Gayle","Maxwell")

newDF <- data.frame(round(newDF$BF),round(newDF$Mins))

colnames(newDF) <- c("BallsFaced","MinsAtCrease")

predictedRuns <- cbind(newDF,batsmen)

predictedRuns## BallsFaced MinsAtCrease Sehwag Devilliers Gayle Maxwell

## 1 10 30 11 12 11 18

## 2 31 51 33 32 28 43

## 3 52 72 55 52 46 67

## 4 73 93 77 71 63 92

## 5 94 114 100 91 81 117

## 6 116 136 122 111 98 141

## 7 137 157 144 130 116 166

## 8 158 178 167 150 133 191

## 9 179 199 189 170 151 215

## 10 200 220 211 190 168 240Highest runs likelihood

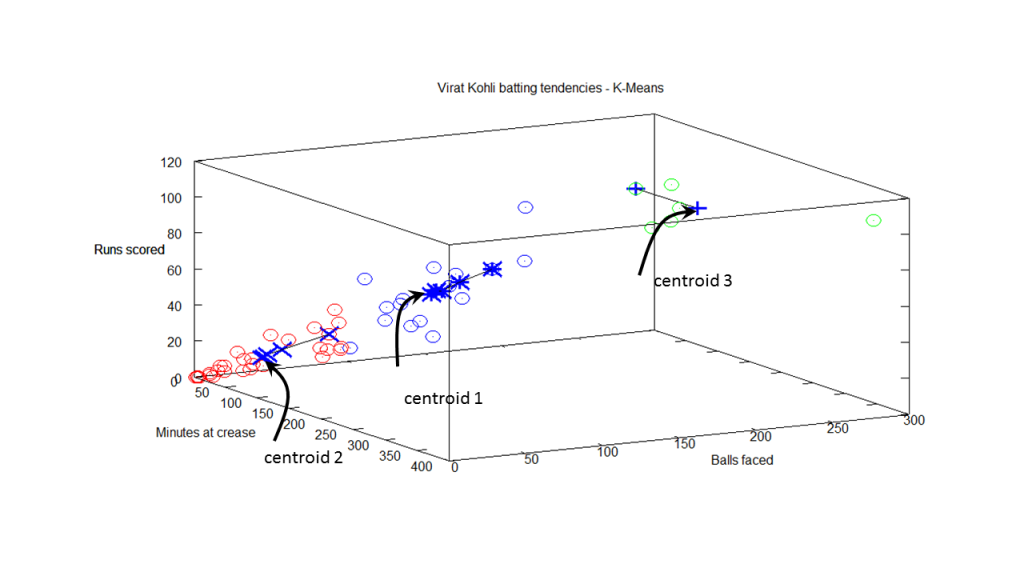

The plots below the runs likelihood of batsman. This uses K-Means It can be seen that Devilliers has almost 27.75% likelihood to make around 90+ runs. Gayle and Sehwag have 34% to make 40+ runs. A. Virender Sehwag

A. Virender Sehwag

batsmanRunsLikelihood("./sehwag.csv","Sehwag")## Summary of Sehwag 's runs scoring likelihood

## **************************************************

##

## There is a 35.22 % likelihood that Sehwag will make 46 Runs in 44 balls over 67 Minutes

## There is a 9.43 % likelihood that Sehwag will make 119 Runs in 106 balls over 158 Minutes

## There is a 55.35 % likelihood that Sehwag will make 12 Runs in 13 balls over 18 MinutesB. AB Devilliers

batsmanRunsLikelihood("./devilliers.csv","Devilliers")## Summary of Devilliers 's runs scoring likelihood

## **************************************************

##

## There is a 30.65 % likelihood that Devilliers will make 44 Runs in 43 balls over 60 Minutes

## There is a 29.84 % likelihood that Devilliers will make 91 Runs in 88 balls over 124 Minutes

## There is a 39.52 % likelihood that Devilliers will make 11 Runs in 15 balls over 21 MinutesC. Chris Gayle

batsmanRunsLikelihood("./gayle.csv","Gayle")## Summary of Gayle 's runs scoring likelihood

## **************************************************

##

## There is a 32.69 % likelihood that Gayle will make 47 Runs in 51 balls over 72 Minutes

## There is a 54.49 % likelihood that Gayle will make 10 Runs in 15 balls over 20 Minutes

## There is a 12.82 % likelihood that Gayle will make 109 Runs in 119 balls over 172 MinutesD. Glenn Maxwell

batsmanRunsLikelihood("./maxwell.csv","Maxwell")## Summary of Maxwell 's runs scoring likelihood

## **************************************************

##

## There is a 34.38 % likelihood that Maxwell will make 39 Runs in 29 balls over 35 Minutes

## There is a 15.62 % likelihood that Maxwell will make 89 Runs in 55 balls over 69 Minutes

## There is a 50 % likelihood that Maxwell will make 6 Runs in 7 balls over 9 MinutesAverage runs at ground and against opposition

A. Virender Sehwag

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

batsmanAvgRunsGround("./sehwag.csv","Sehwag")

batsmanAvgRunsOpposition("./sehwag.csv","Sehwag")dev.off()## null device

## 1B. AB Devilliers

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

batsmanAvgRunsGround("./devilliers.csv","Devilliers")

batsmanAvgRunsOpposition("./devilliers.csv","Devilliers")dev.off()## null device

## 1C. Chris Gayle

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

batsmanAvgRunsGround("./gayle.csv","Gayle")

batsmanAvgRunsOpposition("./gayle.csv","Gayle")dev.off()## null device

## 1D. Glenn Maxwell

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

batsmanAvgRunsGround("./maxwell.csv","Maxwell")

batsmanAvgRunsOpposition("./maxwell.csv","Maxwell")dev.off()## null device

## 1Moving Average of runs over career

The moving average for the 4 batsmen indicate the following

1. The moving average of Devilliers and Maxwell is on the way up.

2. Sehwag shows a slight downward trend from his 2nd peak in 2011

3. Gayle maintains a consistent 45 runs for the last few years

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

batsmanMovingAverage("./sehwag.csv","Sehwag")

batsmanMovingAverage("./devilliers.csv","Devilliers")

batsmanMovingAverage("./gayle.csv","Gayle")

batsmanMovingAverage("./maxwell.csv","Maxwell")dev.off()## null device

## 1Check batsmen in-form, out-of-form

- Maxwell, Devilliers, Sehwag are in-form. This is also evident from the moving average plot

- Gayle is out-of-form

checkBatsmanInForm("./sehwag.csv","Sehwag")## *******************************************************************************************

##

## Population size: 143 Mean of population: 33.76

## Sample size: 16 Mean of sample: 37.44 SD of sample: 55.15

##

## Null hypothesis H0 : Sehwag 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Sehwag 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Sehwag 's Form Status: In-Form because the p value: 0.603525 is greater than alpha= 0.05"

## *******************************************************************************************checkBatsmanInForm("./devilliers.csv","Devilliers")## *******************************************************************************************

##

## Population size: 111 Mean of population: 43.5

## Sample size: 13 Mean of sample: 57.62 SD of sample: 40.69

##

## Null hypothesis H0 : Devilliers 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Devilliers 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Devilliers 's Form Status: In-Form because the p value: 0.883541 is greater than alpha= 0.05"

## *******************************************************************************************checkBatsmanInForm("./gayle.csv","Gayle")## *******************************************************************************************

##

## Population size: 140 Mean of population: 37.1

## Sample size: 16 Mean of sample: 17.25 SD of sample: 20.25

##

## Null hypothesis H0 : Gayle 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Gayle 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Gayle 's Form Status: Out-of-Form because the p value: 0.000609 is less than alpha= 0.05"

## *******************************************************************************************checkBatsmanInForm("./maxwell.csv","Maxwell")## *******************************************************************************************

##

## Population size: 28 Mean of population: 25.25

## Sample size: 4 Mean of sample: 64.25 SD of sample: 36.97

##

## Null hypothesis H0 : Maxwell 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Maxwell 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Maxwell 's Form Status: In-Form because the p value: 0.948744 is greater than alpha= 0.05"

## *******************************************************************************************Analysis of bowlers

- Mitchell Johnson (Aus) – Innings-150, Wickets – 239, Econ Rate : 4.83

- Lasith Malinga (SL)- Innings-182, Wickets – 287, Econ Rate : 5.26

- Dale Steyn (SA)- Innings-103, Wickets – 162, Econ Rate : 4.81

- Tim Southee (NZ)- Innings-96, Wickets – 135, Econ Rate : 5.33

Malinga has the highest number of innings and wickets followed closely by Mitchell. Steyn and Southee have relatively fewer innings.

To get the bowler’s data use

malinga <- getPlayerDataOD(49758,dir=".",file="malinga.csv",type="bowling")Wicket Frequency percentage

This plot gives the percentage of wickets for each wickets (1,2,3…etc)

par(mfrow=c(1,4))

par(mar=c(4,4,2,2))

bowlerWktsFreqPercent("./mitchell.csv","J Mitchell")

bowlerWktsFreqPercent("./malinga.csv","Malinga")

bowlerWktsFreqPercent("./steyn.csv","Steyn")

bowlerWktsFreqPercent("./southee.csv","southee")dev.off()## null device

## 1Wickets Runs plot

The plot below gives a boxplot of the runs ranges for each of the wickets taken by the bowlers. M Johnson and Steyn are more economical than Malinga and Southee corroborating the figures above

par(mfrow=c(1,4))

par(mar=c(4,4,2,2))

bowlerWktsRunsPlot("./mitchell.csv","J Mitchell")

bowlerWktsRunsPlot("./malinga.csv","Malinga")

bowlerWktsRunsPlot("./steyn.csv","Steyn")

bowlerWktsRunsPlot("./southee.csv","southee")dev.off()## null device

## 1Average wickets in different grounds and opposition

A. Mitchell Johnson

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

bowlerAvgWktsGround("./mitchell.csv","J Mitchell")

bowlerAvgWktsOpposition("./mitchell.csv","J Mitchell")dev.off()## null device

## 1B. Lasith Malinga

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

bowlerAvgWktsGround("./malinga.csv","Malinga")

bowlerAvgWktsOpposition("./malinga.csv","Malinga")dev.off()## null device

## 1C. Dale Steyn

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

bowlerAvgWktsGround("./steyn.csv","Steyn")

bowlerAvgWktsOpposition("./steyn.csv","Steyn")dev.off()## null device

## 1D. Tim Southee

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

bowlerAvgWktsGround("./southee.csv","southee")

bowlerAvgWktsOpposition("./southee.csv","southee")dev.off()## null device

## 1Relative bowling performance

The plot below shows that Mitchell Johnson and Southee have more wickets in 3-4 wickets range while Steyn and Malinga in 1-2 wicket range

frames <- list("./mitchell.csv","./malinga.csv","steyn.csv","southee.csv")

names <- list("M Johnson","Malinga","Steyn","Southee")

relativeBowlingPerf(frames,names)Relative Economy Rate against wickets taken

Steyn had the best economy rate followed by M Johnson. Malinga and Southee have a poorer economy rate

frames <- list("./mitchell.csv","./malinga.csv","steyn.csv","southee.csv")

names <- list("M Johnson","Malinga","Steyn","Southee")

relativeBowlingERODTT(frames,names)Moving average of wickets over career

Johnson and Steyn career vs wicket graph is on the up-swing. Southee is maintaining a reasonable record while Malinga shows a decline in ODI performance

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

bowlerMovingAverage("./mitchell.csv","M Johnson")

bowlerMovingAverage("./malinga.csv","Malinga")

bowlerMovingAverage("./steyn.csv","Steyn")

bowlerMovingAverage("./southee.csv","Southee")dev.off()## null device

## 1Wickets forecast

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

bowlerPerfForecast("./mitchell.csv","M Johnson")

bowlerPerfForecast("./malinga.csv","Malinga")

bowlerPerfForecast("./steyn.csv","Steyn")

bowlerPerfForecast("./southee.csv","southee")dev.off()## null device

## 1Check bowler in-form, out-of-form

All the bowlers are shown to be still in-form

checkBowlerInForm("./mitchell.csv","J Mitchell")## *******************************************************************************************

##

## Population size: 135 Mean of population: 1.55

## Sample size: 15 Mean of sample: 2 SD of sample: 1.07

##

## Null hypothesis H0 : J Mitchell 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : J Mitchell 's sample average is below the 95% confidence

## interval of population average

##

## [1] "J Mitchell 's Form Status: In-Form because the p value: 0.937917 is greater than alpha= 0.05"

## *******************************************************************************************checkBowlerInForm("./malinga.csv","Malinga")## *******************************************************************************************

##

## Population size: 163 Mean of population: 1.58

## Sample size: 19 Mean of sample: 1.58 SD of sample: 1.22

##

## Null hypothesis H0 : Malinga 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Malinga 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Malinga 's Form Status: In-Form because the p value: 0.5 is greater than alpha= 0.05"

## *******************************************************************************************checkBowlerInForm("./steyn.csv","Steyn")## *******************************************************************************************

##

## Population size: 93 Mean of population: 1.59

## Sample size: 11 Mean of sample: 1.45 SD of sample: 0.69

##

## Null hypothesis H0 : Steyn 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Steyn 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Steyn 's Form Status: In-Form because the p value: 0.257438 is greater than alpha= 0.05"

## *******************************************************************************************checkBowlerInForm("./southee.csv","southee")## *******************************************************************************************

##

## Population size: 86 Mean of population: 1.48

## Sample size: 10 Mean of sample: 0.8 SD of sample: 1.14

##

## Null hypothesis H0 : southee 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : southee 's sample average is below the 95% confidence

## interval of population average

##

## [1] "southee 's Form Status: Out-of-Form because the p value: 0.044302 is less than alpha= 0.05"

## **********************************************************************************************************

Key findings

Here are some key conclusions ODI batsmen

- AB Devilliers has high frequency of runs in the 60-120 range and the highest average

- Sehwag has the most number of innings and good strike rate

- Maxwell has the best strike rate but it should be kept in mind that he has 1/5 of the innings of Sehwag. We need to see how he progress further

- Sehwag has the highest percentage of 4s in the runs scored, while Maxwell has the most 6s

- For a hypothetical Balls Faced and Minutes at creases Maxwell will score the most runs followed by Sehwag

- The moving average of indicates that the best is yet to come for Devilliers and Maxwell. Sehwag has a few more years in him while Gayle shows a decline in ODI performance and an out of form is indicated.

ODI bowlers

- Malinga has the highest played the highest innings and also has the highest wickets though he has poor economy rate

- M Johnson is the most effective in the 3-4 wicket range followed by Southee

- M Johnson and Steyn has the best overall economy rate followed by Malinga and Steyn 4 M Johnson and Steyn’s career is on the up-swing,Southee maintains a steady consistent performance, while Malinga shows a downward trend

Hasta la vista! I’ll be back!

Watch this space!

Also see my other posts in R

- Introducing cricketr! : An R package to analyze performances of cricketers

- cricketr digs the Ashes!

- A peek into literacy in India: Statistical Learning with R

- A crime map of India in R – Crimes against women

- Analyzing cricket’s batting legends – Through the mirage with R

- Mirror, mirror . the best batsman of them all?

You may also like

- A closer look at “Robot Horse on a Trot” in Android

- What’s up Watson? Using IBM Watson’s QAAPI with Bluemix, NodeExpress – Part 1

- Bend it like Bluemix, MongoDB with autoscaling – Part 2

- Informed choices through Machine Learning : Analyzing Kohli, Tendulkar and Dravid

- TWS-4: Gossip protocol: Epidemics and rumors to the rescue

- Deblurring with OpenCV:Weiner filter reloadedhttp://www.r-bloggers.com/cricketr-plays-the-odis/

cricketr digs the Ashes!

Published in R bloggers: cricketr digs the Ashes

Introduction

In some circles the Ashes is considered the ‘mother of all cricketing battles’. But, being a staunch supporter of all things Indian, cricket or otherwise, I have to say that the Ashes pales in comparison against a India-Pakistan match. After all, what are a few frowns and raised eyebrows at the Ashes in comparison to the seething emotions and reckless exuberance of Indian fans.

Anyway, the Ashes are an interesting duel and I have decided to do some cricketing analysis using my R package cricketr. For this analysis I have chosen the top 2 batsman and top 2 bowlers from both the Australian and English sides.

Batsmen

- Steven Smith (Aus) – Innings – 58 , Ave: 58.52, Strike Rate: 55.90

- David Warner (Aus) – Innings – 76, Ave: 46.86, Strike Rate: 73.88

- Alistair Cook (Eng) – Innings – 208 , Ave: 46.62, Strike Rate: 46.33

- J E Root (Eng) – Innings – 53, Ave: 54.02, Strike Rate: 51.30

Bowlers

- Mitchell Johnson (Aus) – Innings-131, Wickets – 299, Econ Rate : 3.28

- Peter Siddle (Aus) – Innings – 104 , Wickets- 192, Econ Rate : 2.95

- James Anderson (Eng) – Innings – 199 , Wickets- 406, Econ Rate : 3.05

- Stuart Broad (Eng) – Innings – 148 , Wickets- 296, Econ Rate : 3.08

It is my opinion if any 2 of the 4 in either team click then they will be able to swing the match in favor of their team.

I have interspersed the plots with a few comments. Feel free to draw your conclusions!

If you are passionate about cricket, and love analyzing cricket performances, then check out my racy book on cricket ‘Cricket analytics with cricketr and cricpy – Analytics harmony with R & Python’! This book discusses and shows how to use my R package ‘cricketr’ and my Python package ‘cricpy’ to analyze batsmen and bowlers in all formats of the game (Test, ODI and T20). The paperback is available on Amazon at $21.99 and the kindle version at $9.99/Rs 449/-. A must read for any cricket lover! Check it out!!

You can download the latest PDF version of the book at ‘Cricket analytics with cricketr and cricpy: Analytics harmony with R and Python-6th edition‘

cks), and $4.99/Rs 320 and $6.99/Rs448 respectively

Important note 1: The latest release of ‘cricketr’ now includes the ability to analyze performances of teams now!! See Cricketr adds team analytics to its repertoire!!!

Important note 2 : Cricketr can now do a more fine-grained analysis of players, see Cricketr learns new tricks : Performs fine-grained analysis of players

Important note 3: Do check out the python avatar of cricketr, ‘cricpy’ in my post ‘Introducing cricpy:A python package to analyze performances of cricketers”

The analysis is included below. Note: This post has also been hosted at Rpubs as cricketr digs the Ashes!

You can also download this analysis as a PDF file from cricketr digs the Ashes!

Do check out my interactive Shiny app implementation using the cricketr package – Sixer – R package cricketr’s new Shiny avatar

Note: If you would like to do a similar analysis for a different set of batsman and bowlers, you can clone/download my skeleton cricketr template from Github (which is the R Markdown file I have used for the analysis below). You will only need to make appropriate changes for the players you are interested in. Just a familiarity with R and R Markdown only is needed.

Important note: Do check out my other posts using cricketr at cricketr-posts

The package can be installed directly from CRAN

if (!require("cricketr")){

install.packages("cricketr",lib = "c:/test")

}

library(cricketr)or from Github

library(devtools)

install_github("tvganesh/cricketr")

library(cricketr)Analyses of Batsmen

The following plots gives the analysis of the 2 Australian and 2 English batsmen. It must be kept in mind that Cooks has more innings than all the rest put together. Smith has the best average, and Warner has the best strike rate

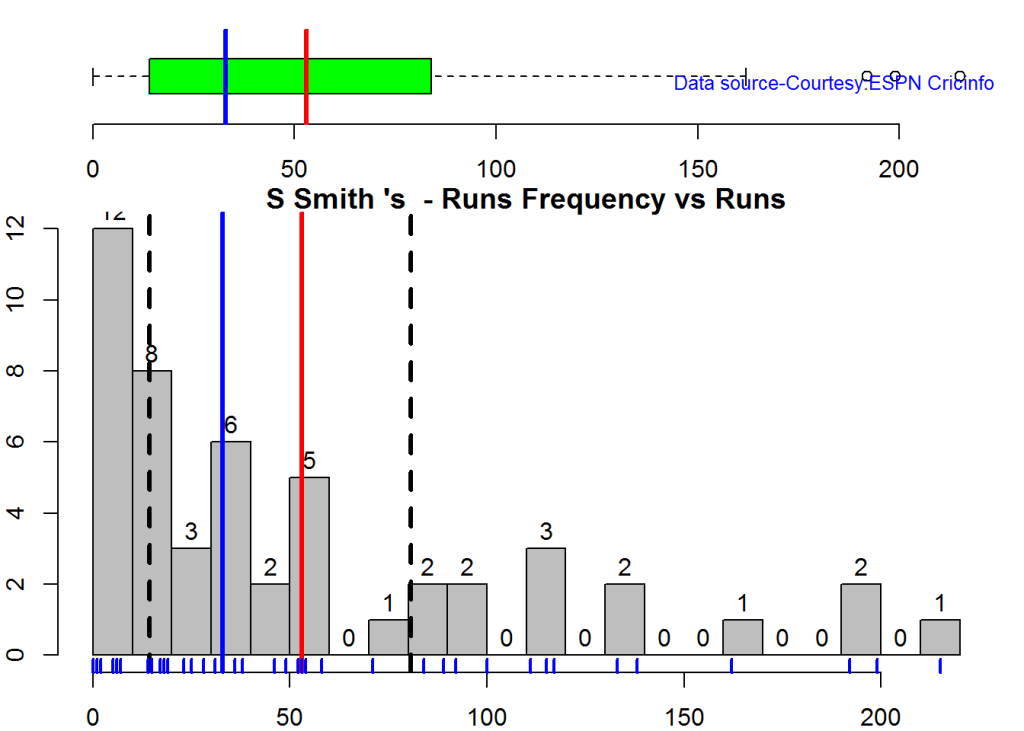

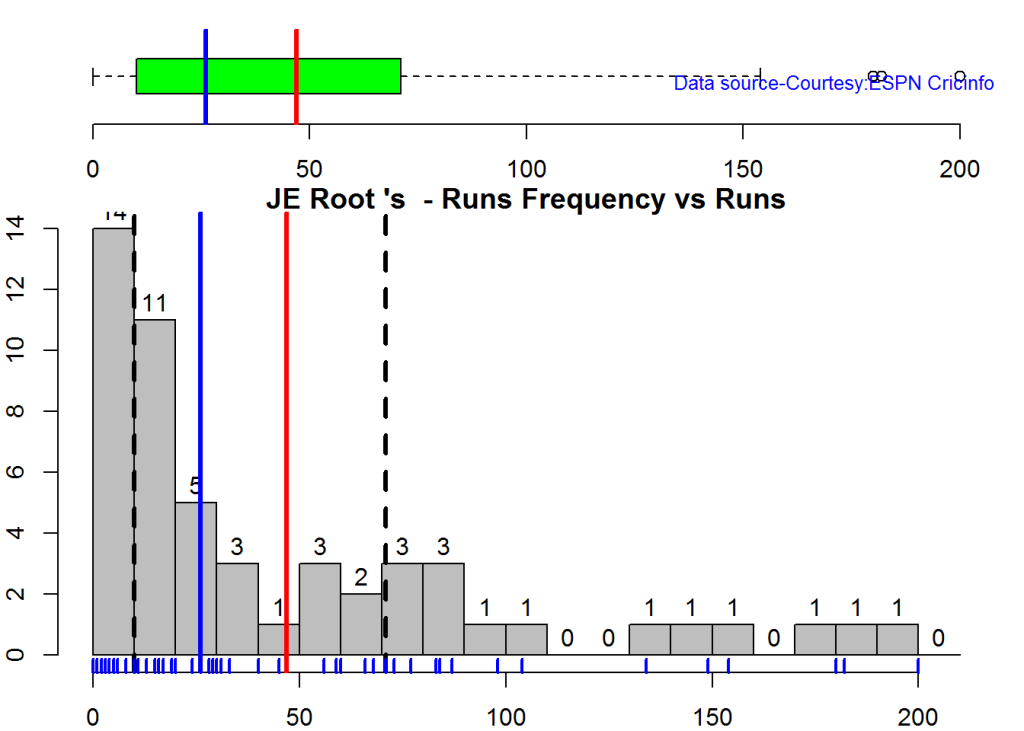

Box Histogram Plot

This plot shows a combined boxplot of the Runs ranges and a histogram of the Runs Frequency

batsmanPerfBoxHist("./smith.csv","S Smith")batsmanPerfBoxHist("./warner.csv","D Warner")batsmanPerfBoxHist("./cook.csv","A Cook")batsmanPerfBoxHist("./root.csv","JE Root")Plot os 4s, 6s and the type of dismissals

A. Steven Smith

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./smith.csv","S Smith")

batsman6s("./smith.csv","S Smith")

batsmanDismissals("./smith.csv","S Smith")dev.off()## null device

## 1B. David Warner

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./warner.csv","D Warner")

batsman6s("./warner.csv","D Warner")

batsmanDismissals("./warner.csv","D Warner")dev.off()## null device

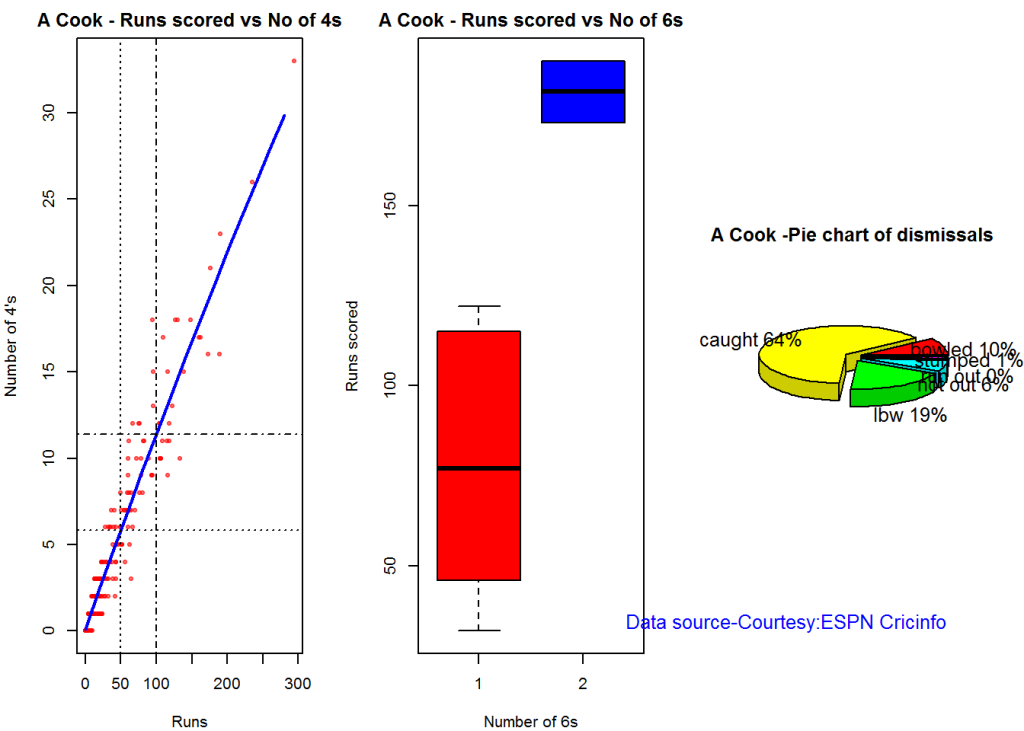

## 1C. Alistair Cook

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./cook.csv","A Cook")

batsman6s("./cook.csv","A Cook")

batsmanDismissals("./cook.csv","A Cook")dev.off()## null device

## 1D. J E Root

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./root.csv","JE Root")

batsman6s("./root.csv","JE Root")

batsmanDismissals("./root.csv","JE Root")dev.off()## null device

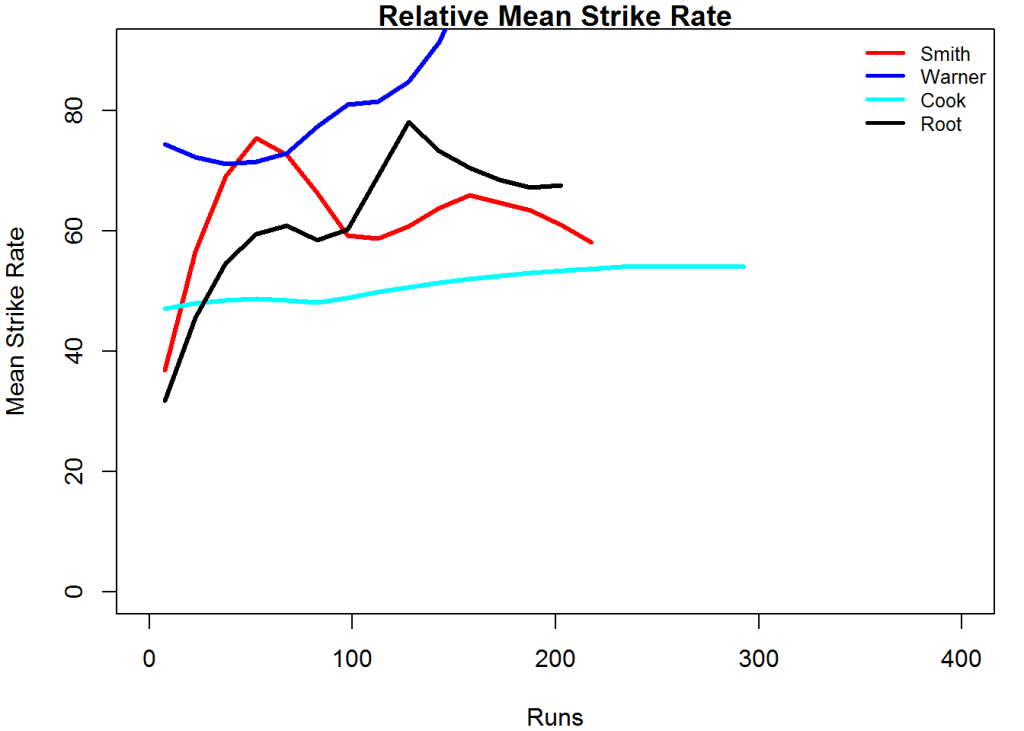

## 1Relative Mean Strike Rate

In this first plot I plot the Mean Strike Rate of the batsmen. It can be Warner’s has the best strike rate (hit outside the plot!) followed by Smith in the range 20-100. Root has a good strike rate above hundred runs. Cook maintains a good strike rate.

par(mar=c(4,4,2,2))

frames <- list("./smith.csv","./warner.csv","cook.csv","root.csv")

names <- list("Smith","Warner","Cook","Root")

relativeBatsmanSR(frames,names)Relative Runs Frequency Percentage

The plot below show the percentage contribution in each 10 runs bucket over the entire career.It can be seen that Smith pops up above the rest with remarkable regularity.COok is consistent over the entire range.

frames <- list("./smith.csv","./warner.csv","cook.csv","root.csv")

names <- list("Smith","Warner","Cook","Root")

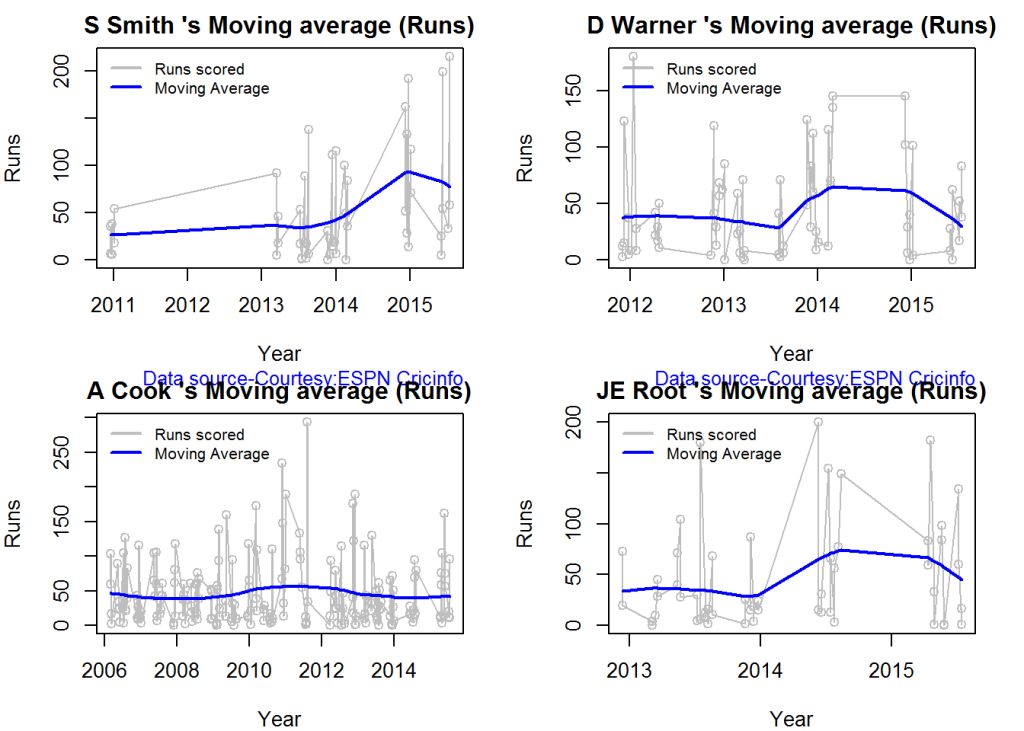

relativeRunsFreqPerf(frames,names)Moving Average of runs over career

The moving average for the 4 batsmen indicate the following 1. S Smith is the most promising. There is a marked spike in Performance. Cook maintains a steady pace and is consistent over the years averaging 50 over the years.

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

batsmanMovingAverage("./smith.csv","S Smith")

batsmanMovingAverage("./warner.csv","D Warner")

batsmanMovingAverage("./cook.csv","A Cook")

batsmanMovingAverage("./root.csv","JE Root")dev.off()## null device

## 1Runs forecast

The forecast for the batsman is shown below. As before Cooks’s performance is really consistent across the years and the forecast is good for the years ahead. In Cook’s case it can be seen that the forecasted and actual runs are reasonably accurate

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

batsmanPerfForecast("./smith.csv","S Smith")

batsmanPerfForecast("./warner.csv","D Warner")

batsmanPerfForecast("./cook.csv","A Cook")## Warning in HoltWinters(ts.train): optimization difficulties: ERROR:

## ABNORMAL_TERMINATION_IN_LNSRCHbatsmanPerfForecast("./root.csv","JE Root")dev.off()## null device

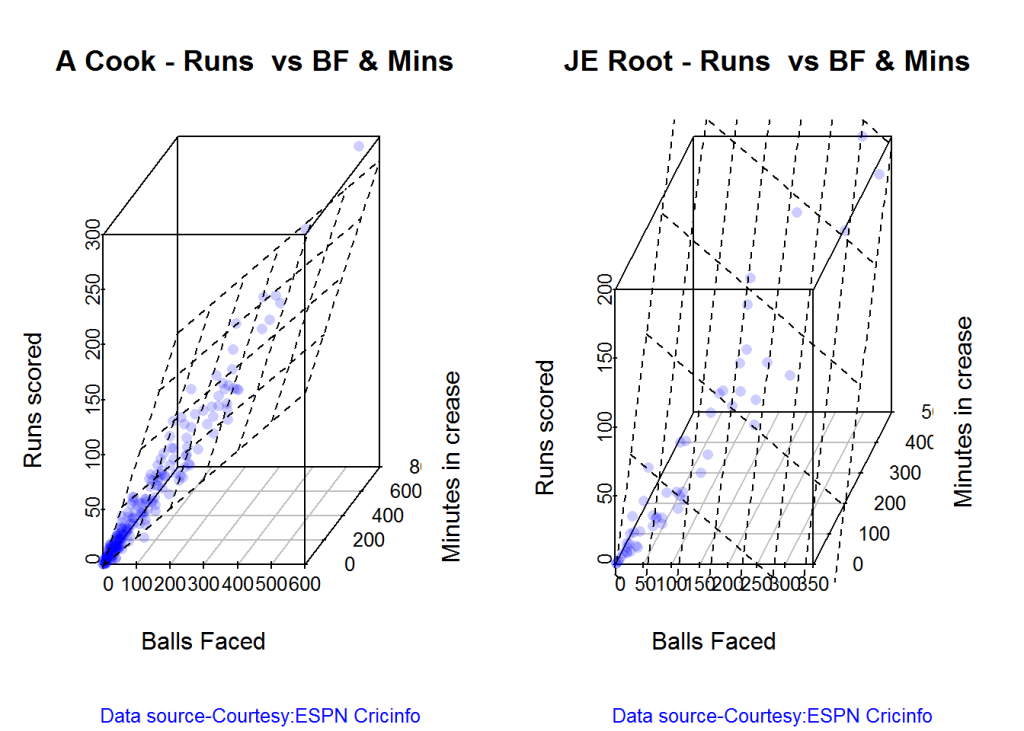

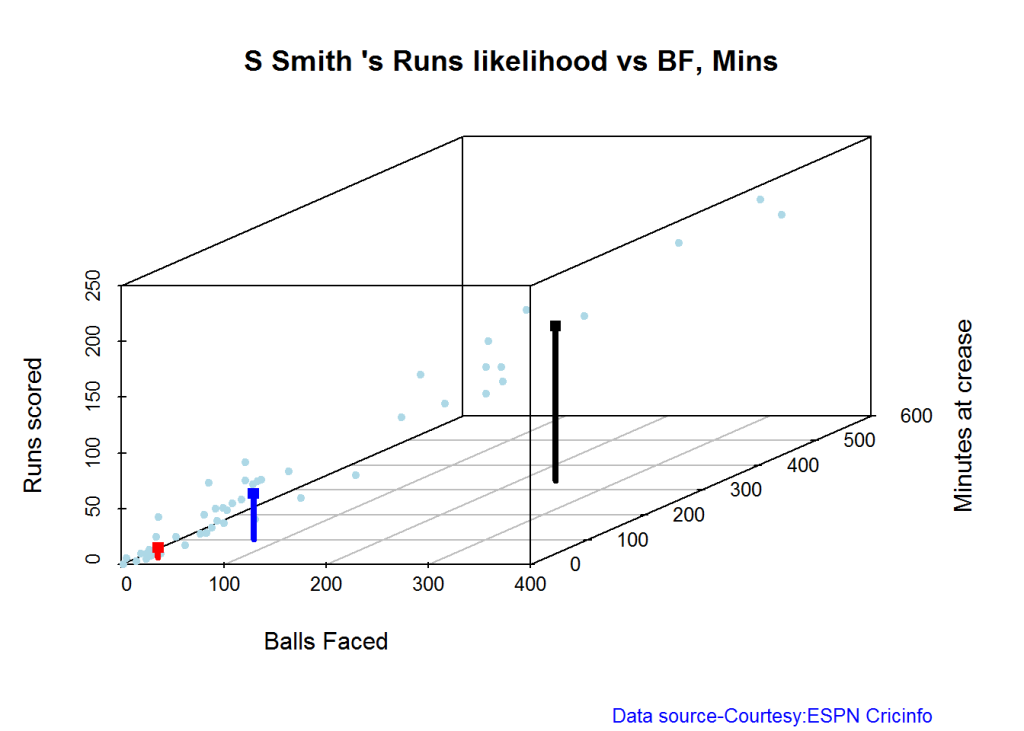

## 13D plot of Runs vs Balls Faced and Minutes at Crease

The plot is a scatter plot of Runs vs Balls faced and Minutes at Crease. A prediction plane is fitted

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

battingPerf3d("./smith.csv","S Smith")

battingPerf3d("./warner.csv","D Warner")par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

battingPerf3d("./cook.csv","A Cook")

battingPerf3d("./root.csv","JE Root")dev.off()## null device

## 1Predicting Runs given Balls Faced and Minutes at Crease

A multi-variate regression plane is fitted between Runs and Balls faced +Minutes at crease.

BF <- seq( 10, 400,length=15)

Mins <- seq(30,600,length=15)

newDF <- data.frame(BF,Mins)

smith <- batsmanRunsPredict("./smith.csv","S Smith",newdataframe=newDF)

warner <- batsmanRunsPredict("./warner.csv","D Warner",newdataframe=newDF)

cook <- batsmanRunsPredict("./cook.csv","A Cook",newdataframe=newDF)

root <- batsmanRunsPredict("./root.csv","JE Root",newdataframe=newDF)The fitted model is then used to predict the runs that the batsmen will score for a given Balls faced and Minutes at crease. It can be seen that Warner sets a searing pace in the predicted runs for a given Balls Faced and Minutes at crease while Smith and Root are neck to neck in the predicted runs

batsmen <-cbind(round(smith$Runs),round(warner$Runs),round(cook$Runs),round(root$Runs))

colnames(batsmen) <- c("Smith","Warner","Cook","Root")

newDF <- data.frame(round(newDF$BF),round(newDF$Mins))

colnames(newDF) <- c("BallsFaced","MinsAtCrease")

predictedRuns <- cbind(newDF,batsmen)

predictedRuns## BallsFaced MinsAtCrease Smith Warner Cook Root

## 1 10 30 9 12 6 9

## 2 38 71 25 33 20 25

## 3 66 111 42 53 33 42

## 4 94 152 58 73 47 59

## 5 121 193 75 93 60 75

## 6 149 234 91 114 74 92

## 7 177 274 108 134 88 109

## 8 205 315 124 154 101 125

## 9 233 356 141 174 115 142

## 10 261 396 158 195 128 159

## 11 289 437 174 215 142 175

## 12 316 478 191 235 155 192

## 13 344 519 207 255 169 208

## 14 372 559 224 276 182 225

## 15 400 600 240 296 196 242

Highest runs likelihood

The plots below the runs likelihood of batsman. This uses K-Means. It can be seen Smith has the best likelihood around 40% of scoring around 41 runs, followed by Root who has 28.3% likelihood of scoring around 81 runs

A. Steven Smith

batsmanRunsLikelihood("./smith.csv","S Smith")

## Summary of S Smith 's runs scoring likelihood

## **************************************************

##

## There is a 40 % likelihood that S Smith will make 41 Runs in 73 balls over 101 Minutes

## There is a 36 % likelihood that S Smith will make 9 Runs in 21 balls over 27 Minutes

## There is a 24 % likelihood that S Smith will make 139 Runs in 237 balls over 338 MinutesB. David Warner

batsmanRunsLikelihood("./warner.csv","D Warner")

## Summary of D Warner 's runs scoring likelihood

## **************************************************

##

## There is a 11.11 % likelihood that D Warner will make 134 Runs in 159 balls over 263 Minutes

## There is a 63.89 % likelihood that D Warner will make 17 Runs in 25 balls over 37 Minutes

## There is a 25 % likelihood that D Warner will make 73 Runs in 105 balls over 156 MinutesC. Alastair Cook

batsmanRunsLikelihood("./cook.csv","A Cook")

## Summary of A Cook 's runs scoring likelihood

## **************************************************

##

## There is a 27.72 % likelihood that A Cook will make 64 Runs in 140 balls over 195 Minutes

## There is a 59.9 % likelihood that A Cook will make 15 Runs in 32 balls over 46 Minutes

## There is a 12.38 % likelihood that A Cook will make 141 Runs in 300 balls over 420 MinutesD. J E Root

batsmanRunsLikelihood("./root.csv","JE Root")

## Summary of JE Root 's runs scoring likelihood

## **************************************************

##

## There is a 28.3 % likelihood that JE Root will make 81 Runs in 158 balls over 223 Minutes

## There is a 7.55 % likelihood that JE Root will make 179 Runs in 290 balls over 425 Minutes

## There is a 64.15 % likelihood that JE Root will make 16 Runs in 39 balls over 59 Minutes Average runs at ground and against opposition

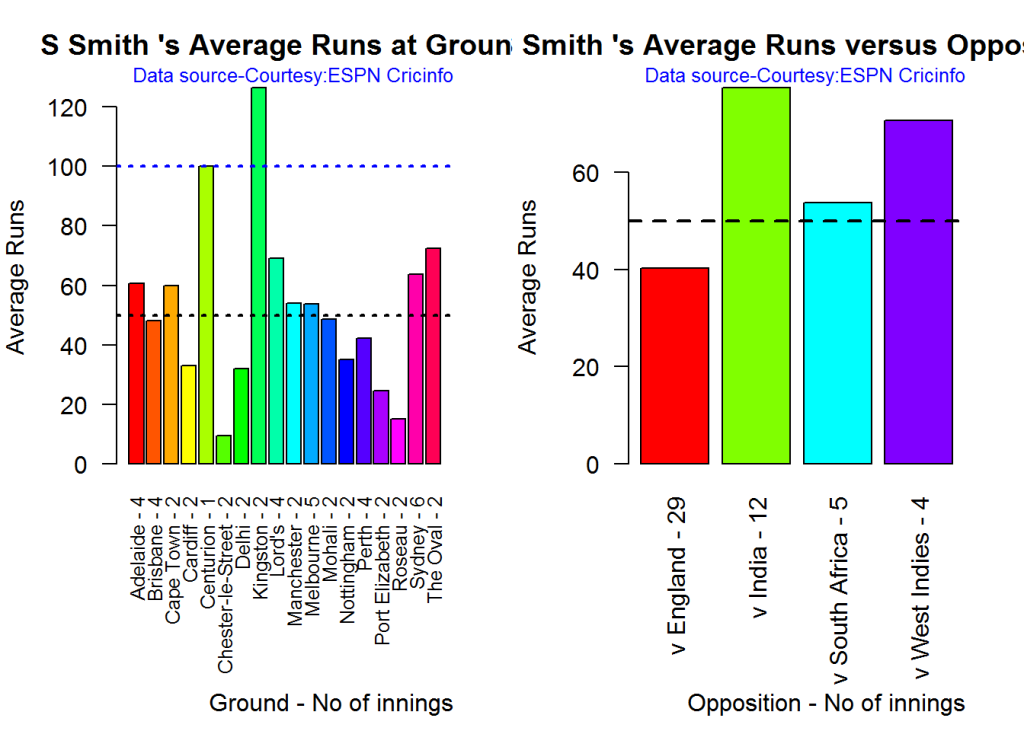

A. Steven Smith

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

batsmanAvgRunsGround("./smith.csv","S Smith")

batsmanAvgRunsOpposition("./smith.csv","S Smith")dev.off()## null device

## 1B. David Warner

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

batsmanAvgRunsGround("./warner.csv","D Warner")

batsmanAvgRunsOpposition("./warner.csv","D Warner")dev.off()## null device

## 1C. Alistair Cook

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

batsmanAvgRunsGround("./cook.csv","A Cook")

batsmanAvgRunsOpposition("./cook.csv","A Cook")dev.off()## null device

## 1D. J E Root

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

batsmanAvgRunsGround("./root.csv","JE Root")

batsmanAvgRunsOpposition("./root.csv","JE Root")dev.off()## null device

## 1Analysis of bowlers

- Mitchell Johnson (Aus) – Innings-131, Wickets – 299, Econ Rate : 3.28

- Peter Siddle (Aus) – Innings – 104 , Wickets- 192, Econ Rate : 2.95

- James Anderson (Eng) – Innings – 199 , Wickets- 406, Econ Rate : 3.05

- Stuart Broad (Eng) – Innings – 148 , Wickets- 296, Econ Rate : 3.08

Anderson has the highest number of inning and wickets followed closely by Broad and Mitchell who are in a neck to neck race with respect to wickets. Johnson is on the more expensive side though. Siddle has fewer innings but a good economy rate.

Wicket Frequency percentage

This plot gives the percentage of wickets for each wickets (1,2,3…etc)

par(mfrow=c(1,4))

par(mar=c(4,4,2,2))

bowlerWktsFreqPercent("./johnson.csv","Johnson")

bowlerWktsFreqPercent("./siddle.csv","Siddle")

bowlerWktsFreqPercent("./broad.csv","Broad")

bowlerWktsFreqPercent("./anderson.csv","Anderson")dev.off()## null device

## 1Wickets Runs plot

The plot below gives a boxplot of the runs ranges for each of the wickets taken by the bowlers

par(mfrow=c(1,4))

par(mar=c(4,4,2,2))

bowlerWktsRunsPlot("./johnson.csv","Johnson")

bowlerWktsRunsPlot("./siddle.csv","Siddle")

bowlerWktsRunsPlot("./broad.csv","Broad")

bowlerWktsRunsPlot("./anderson.csv","Anderson")dev.off()## null device

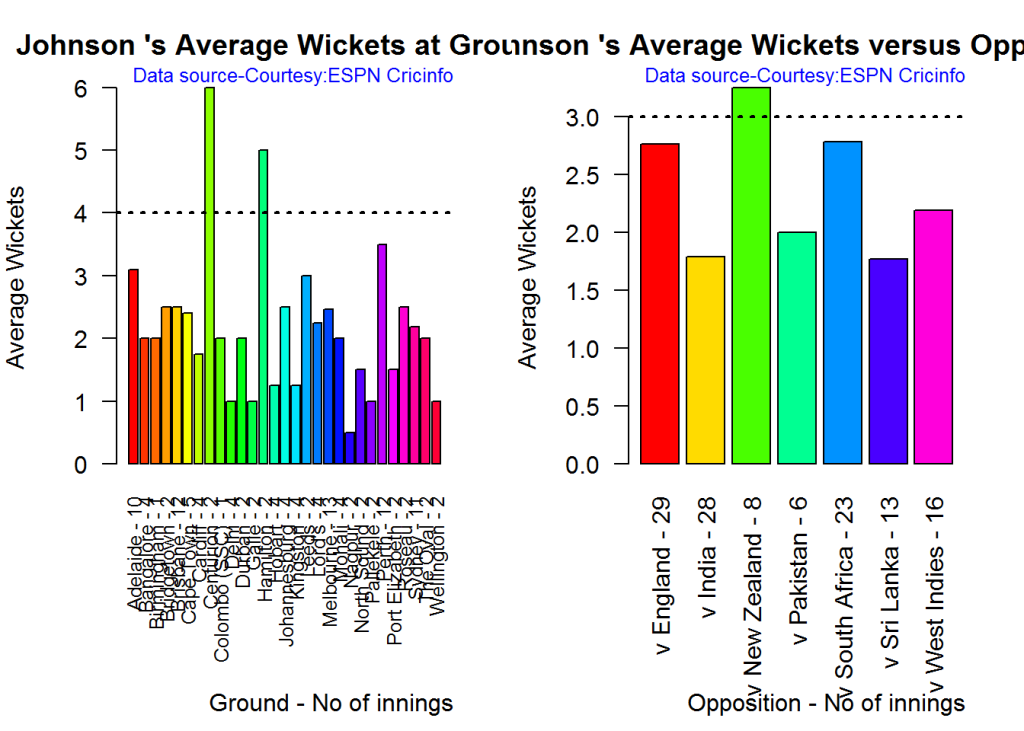

## 1Average wickets in different grounds and opposition

A. Mitchell Johnson

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

bowlerAvgWktsGround("./johnson.csv","Johnson")

bowlerAvgWktsOpposition("./johnson.csv","Johnson")dev.off()## null device

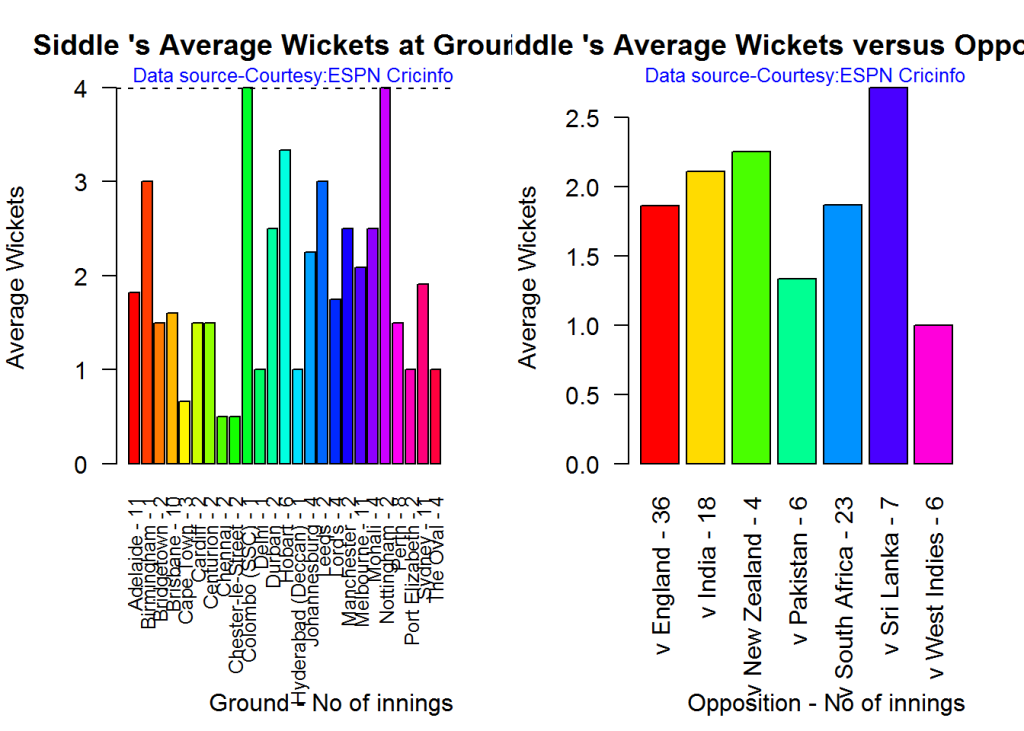

## 1B. Peter Siddle

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

bowlerAvgWktsGround("./siddle.csv","Siddle")

bowlerAvgWktsOpposition("./siddle.csv","Siddle")dev.off()## null device

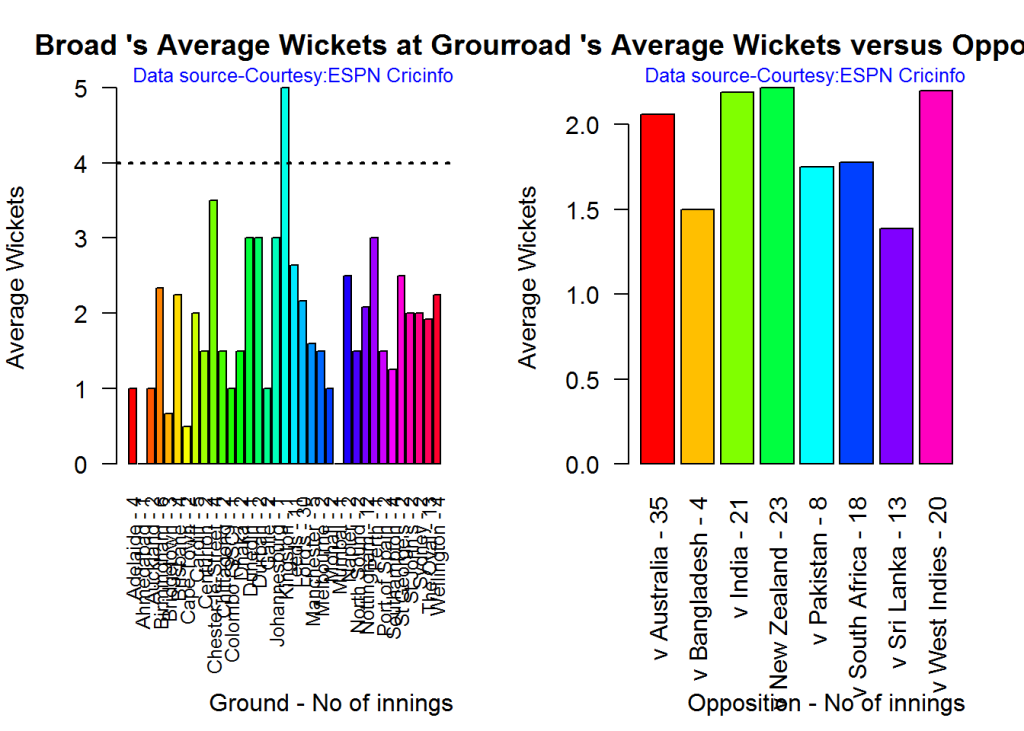

## 1C. Stuart Broad

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

bowlerAvgWktsGround("./broad.csv","Broad")

bowlerAvgWktsOpposition("./broad.csv","Broad")dev.off()## null device

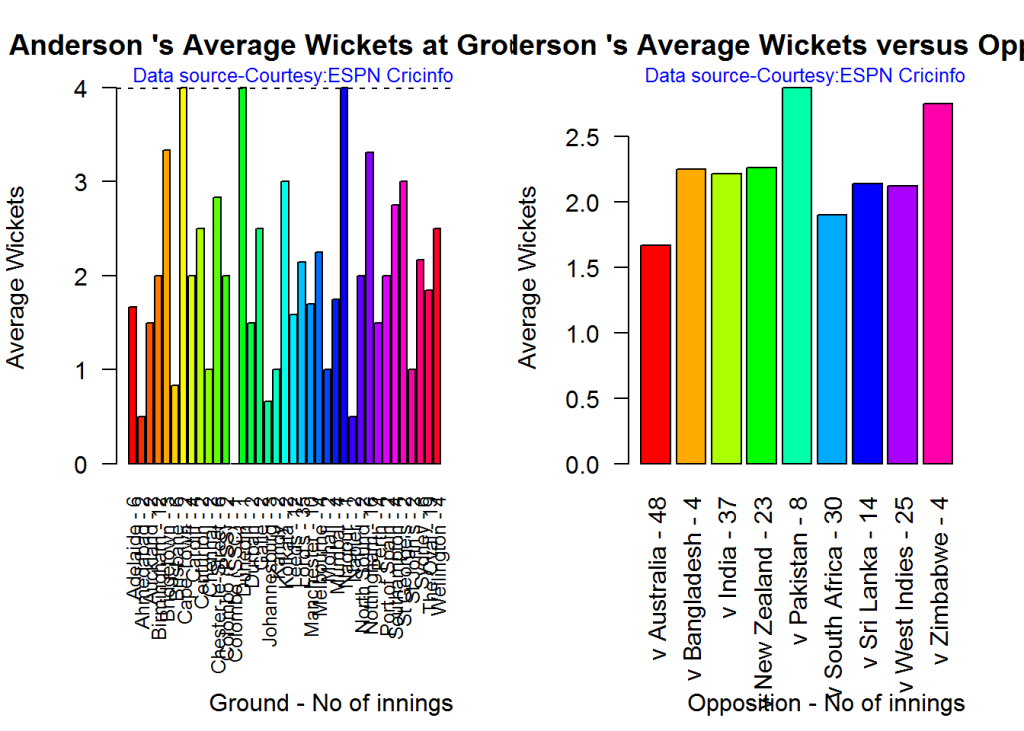

## 1D. James Anderson

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

bowlerAvgWktsGround("./anderson.csv","Anderson")

bowlerAvgWktsOpposition("./anderson.csv","Anderson")dev.off()## null device

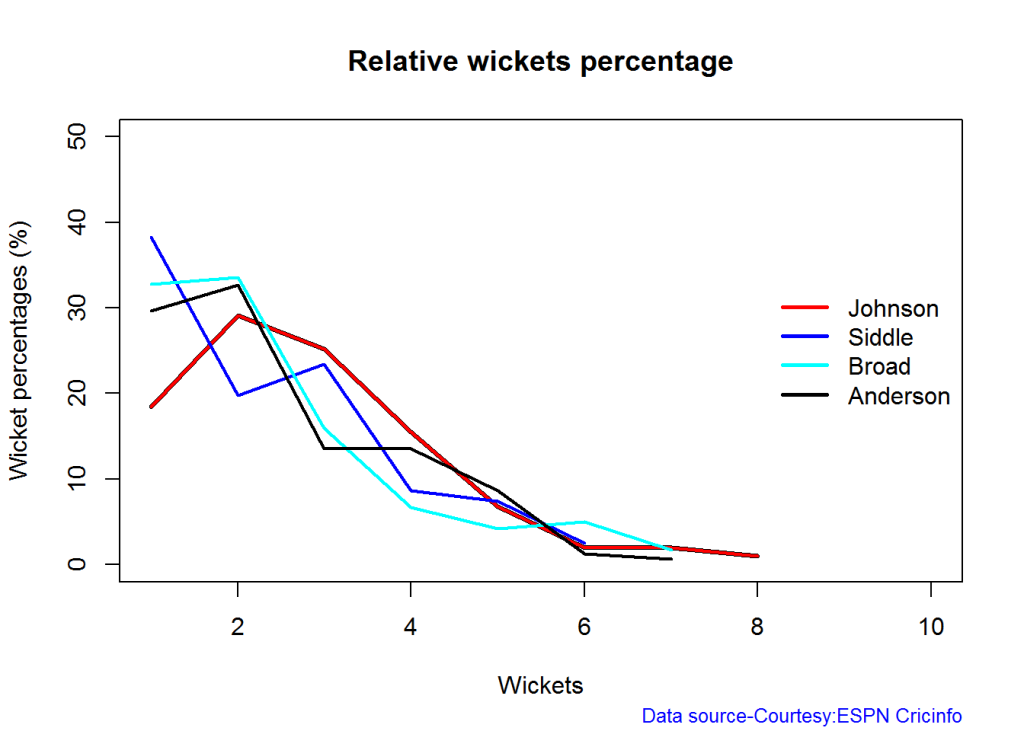

## 1Relative bowling performance

The plot below shows that Mitchell Johnson is the mopst effective bowler among the lot with a higher wickets in the 3-6 wicket range. Broad and Anderson seem to perform well in 2 wickets in comparison to Siddle but in 3 wickets Siddle is better than Broad and Anderson.

frames <- list("./johnson.csv","./siddle.csv","broad.csv","anderson.csv")

names <- list("Johnson","Siddle","Broad","Anderson")

relativeBowlingPerf(frames,names)Relative Economy Rate against wickets taken

Anderson followed by Siddle has the best economy rates. Johnson is fairly expensive in the 4-8 wicket range.

frames <- list("./johnson.csv","./siddle.csv","broad.csv","anderson.csv")

names <- list("Johnson","Siddle","Broad","Anderson")

relativeBowlingER(frames,names)Moving average of wickets over career

Johnson is on his second peak while Siddle is on the decline with respect to bowling. Broad and Anderson show improving performance over the years.

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

bowlerMovingAverage("./johnson.csv","Johnson")

bowlerMovingAverage("./siddle.csv","Siddle")

bowlerMovingAverage("./broad.csv","Broad")

bowlerMovingAverage("./anderson.csv","Anderson")dev.off()## null device

## 1Key findings

Here are some key conclusions

- Cook has the most number of innings and has been extremly consistent in his scores

- Warner has the best strike rate among the lot followed by Smith and Root

- The moving average shows a marked improvement over the years for Smith

- Johnson is the most effective bowler but is fairly expensive

- Anderson has the best economy rate followed by Siddle

- Johnson is at his second peak with respect to bowling while Broad and Anderson maintain a steady line and length in their career bowling performance

Also see my other posts in R

- Introducing cricketr! : An R package to analyze performances of cricketers

- Taking cricketr for a spin – Part 1

- A peek into literacy in India: Statistical Learning with R

- A crime map of India in R – Crimes against women

- Analyzing cricket’s batting legends – Through the mirage with R

- Masters of Spin: Unraveling the web with R

- Mirror, mirror . the best batsman of them all?

You may also like

- A crime map of India in R: Crimes against women

- What’s up Watson? Using IBM Watson’s QAAPI with Bluemix, NodeExpress – Part 1

- Bend it like Bluemix, MongoDB with autoscaling – Part 2

- Informed choices through Machine Learning : Analyzing Kohli, Tendulkar and Dravid

- Thinking Web Scale (TWS-3): Map-Reduce – Bring compute to data

- Deblurring with OpenCV:Weiner filter reloaded

Introducing cricketr! : An R package to analyze performances of cricketers

Yet all experience is an arch wherethro’

Gleams that untravell’d world whose margin fades

For ever and forever when I move.

How dull it is to pause, to make an end,

To rust unburnish’d, not to shine in use!

Ulysses by Alfred Tennyson

Introduction

This is an initial post in which I introduce a cricketing package ‘cricketr’ which I have created. This package was a natural culmination to my earlier posts on cricket and my finishing 10 modules of Data Science Specialization, from John Hopkins University at Coursera. The thought of creating this package struck me some time back, and I have finally been able to bring this to fruition.

So here it is. My R package ‘cricketr!!!’

If you are passionate about cricket, and love analyzing cricket performances, then check out my racy book on cricket ‘Cricket analytics with cricketr and cricpy – Analytics harmony with R & Python’! This book discusses and shows how to use my R package ‘cricketr’ and my Python package ‘cricpy’ to analyze batsmen and bowlers in all formats of the game (Test, ODI and T20). The paperback is available on Amazon at $21.99 and the kindle version at $9.99/Rs 449/-. A must read for any cricket lover! Check it out!!

You can download the latest PDF version of the book at ‘Cricket analytics with cricketr and cricpy: Analytics harmony with R and Python-6th edition‘

This package uses the statistics info available in ESPN Cricinfo Statsguru. The current version of this package can handle all formats of the game including Test, ODI and Twenty20 cricket.

You should be able to install the package from CRAN and use many of the functions available in the package. Please be mindful of ESPN Cricinfo Terms of Use

(Note: This page is also hosted as a GitHub page at cricketr and also at RPubs as cricketr: A R package for analyzing performances of cricketers

You can download this analysis as a PDF file from Introducing cricketr

Note: If you would like to do a similar analysis for a different set of batsman and bowlers, you can clone/download my skeleton cricketr template from Github (which is the R Markdown file I have used for the analysis below). You will only need to make appropriate changes for the players you are interested in. Just a familiarity with R and R Markdown only is needed.

You can clone the cricketr code from Github at cricketr

(Take a look at my short video tutorial on my R package cricketr on Youtube – R package cricketr – A short tutorial)

Do check out my interactive Shiny app implementation using the cricketr package – Sixer – R package cricketr’s new Shiny avatar

Please look at my recent post, which includes updates to this post, and 8 new functions added to the cricketr package “Re-introducing cricketr: An R package to analyze the performances of cricketers”

Important note 1: The latest release of ‘cricketr’ now includes the ability to analyze performances of teams now!! See Cricketr adds team analytics to its repertoire!!!

Important note 2 : Cricketr can now do a more fine-grained analysis of players, see Cricketr learns new tricks : Performs fine-grained analysis of players

Important note 3: Do check out the python avatar of cricketr, ‘cricpy’ in my post ‘Introducing cricpy:A python package to analyze performances of cricketers”

The cricketr package

The cricketr package has several functions that perform several different analyses on both batsman and bowlers. The package has functions that plot percentage frequency runs or wickets, runs likelihood for a batsman, relative run/strike rates of batsman and relative performance/economy rate for bowlers are available.

Other interesting functions include batting performance moving average, forecast and a function to check whether the batsman/bowler is in in-form or out-of-form.

The data for a particular player can be obtained with the getPlayerData() function from the package. To do this you will need to go to ESPN CricInfo Player and type in the name of the player for e.g Ricky Ponting, Sachin Tendulkar etc. This will bring up a page which have the profile number for the player e.g. for Sachin Tendulkar this would be http://www.espncricinfo.com/india/content/player/35320.html. Hence, Sachin’s profile is 35320. This can be used to get the data for Tendulkar as shown below

The cricketr package is now available from CRAN!!!. You should be able to install directly with

if (!require("cricketr")){

install.packages("cricketr",lib = "c:/test")

}

library(cricketr)

?getPlayerData##

## getPlayerData(profile, opposition='', host='', dir='./data', file='player001.csv', type='batting', homeOrAway=[1, 2], result=[1, 2, 4], create=True)

## Get the player data from ESPN Cricinfo based on specific inputs and store in a file in a given directory

##

## Description

##

## Get the player data given the profile of the batsman. The allowed inputs are home,away or both and won,lost or draw of matches. The data is stored in a .csv file in a directory specified. This function also returns a data frame of the player

##

## Usage

##

## getPlayerData(profile,opposition="",host="",dir="./data",file="player001.csv",

## type="batting", homeOrAway=c(1,2),result=c(1,2,4))

## Arguments

##

## profile

## This is the profile number of the player to get data. This can be obtained from http://www.espncricinfo.com/ci/content/player/index.html. Type the name of the player and click search. This will display the details of the player. Make a note of the profile ID. For e.g For Sachin Tendulkar this turns out to be http://www.espncricinfo.com/india/content/player/35320.html. Hence the profile for Sachin is 35320

## opposition

## The numerical value of the opposition country e.g.Australia,India, England etc. The values are Australia:2,Bangladesh:25,England:1,India:6,New Zealand:5,Pakistan:7,South Africa:3,Sri Lanka:8, West Indies:4, Zimbabwe:9

## host

## The numerical value of the host country e.g.Australia,India, England etc. The values are Australia:2,Bangladesh:25,England:1,India:6,New Zealand:5,Pakistan:7,South Africa:3,Sri Lanka:8, West Indies:4, Zimbabwe:9

## dir

## Name of the directory to store the player data into. If not specified the data is stored in a default directory "./data". Default="./data"

## file

## Name of the file to store the data into for e.g. tendulkar.csv. This can be used for subsequent functions. Default="player001.csv"

## type

## type of data required. This can be "batting" or "bowling"

## homeOrAway

## This is a vector with either 1,2 or both. 1 is for home 2 is for away

## result

## This is a vector that can take values 1,2,4. 1 - won match 2- lost match 4- draw

## Details

##

## More details can be found in my short video tutorial in Youtube https://www.youtube.com/watch?v=q9uMPFVsXsI

##

## Value

##

## Returns the player's dataframe

##

## Note

##

## Maintainer: Tinniam V Ganesh <tvganesh.85@gmail.com>

##

## Author(s)

##

## Tinniam V Ganesh

##

## References

##

## http://www.espncricinfo.com/ci/content/stats/index.html

## https://gigadom.wordpress.com/

##

## See Also

##

## getPlayerDataSp

##

## Examples

##

## ## Not run:

## # Both home and away. Result = won,lost and drawn

## tendulkar = getPlayerData(35320,dir=".", file="tendulkar1.csv",

## type="batting", homeOrAway=c(1,2),result=c(1,2,4))

##

## # Only away. Get data only for won and lost innings

## tendulkar = getPlayerData(35320,dir=".", file="tendulkar2.csv",

## type="batting",homeOrAway=c(2),result=c(1,2))

##

## # Get bowling data and store in file for future

## kumble = getPlayerData(30176,dir=".",file="kumble1.csv",

## type="bowling",homeOrAway=c(1),result=c(1,2))

##

## #Get the Tendulkar's Performance against Australia in Australia

## tendulkar = getPlayerData(35320, opposition = 2,host=2,dir=".",

## file="tendulkarVsAusInAus.csv",type="batting")The cricketr package includes some pre-packaged sample (.csv) files. You can use these sample to test functions as shown below

# Retrieve the file path of a data file installed with cricketr

pathToFile ,"Sachin Tendulkar")

Alternatively, the cricketr package can be installed from GitHub with

if (!require("cricketr")){

library(devtools)

install_github("tvganesh/cricketr")

}

library(cricketr)

The pre-packaged files can be accessed as shown above.

To get the data of any player use the function getPlayerData()

tendulkar <- getPlayerData(35320,dir="..",file="tendulkar.csv",type="batting",homeOrAway=c(1,2),

result=c(1,2,4))Important Note This needs to be done only once for a player. This function stores the player’s data in a CSV file (for e.g. tendulkar.csv as above) which can then be reused for all other functions. Once we have the data for the players many analyses can be done. This post will use the stored CSV file obtained with a prior getPlayerData for all subsequent analyses

Sachin Tendulkar’s performance – Basic Analyses

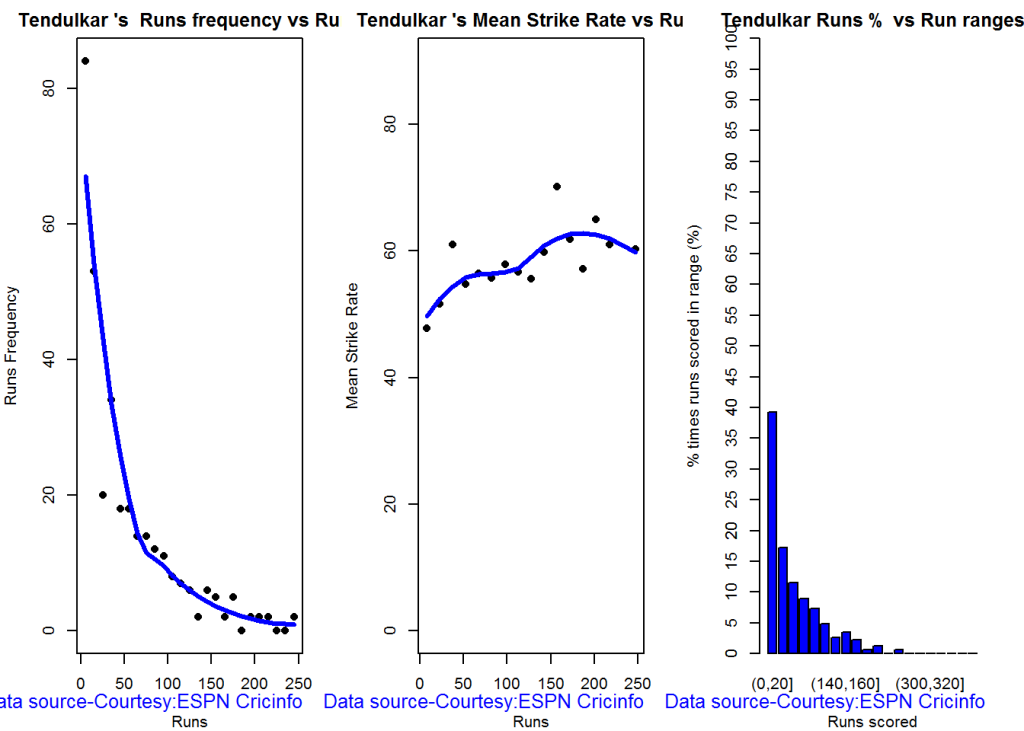

The 3 plots below provide the following for Tendulkar

- Frequency percentage of runs in each run range over the whole career

- Mean Strike Rate for runs scored in the given range

- A histogram of runs frequency percentages in runs ranges

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsmanRunsFreqPerf("./tendulkar.csv","Sachin Tendulkar")

batsmanMeanStrikeRate("./tendulkar.csv","Sachin Tendulkar")

batsmanRunsRanges("./tendulkar.csv","Sachin Tendulkar")dev.off()## null device

## 1

More analyses

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

batsman4s("./tendulkar.csv","Tendulkar")

batsman6s("./tendulkar.csv","Tendulkar")

batsmanDismissals("./tendulkar.csv","Tendulkar") 3D scatter plot and prediction plane

The plots below show the 3D scatter plot of Sachin’s Runs versus Balls Faced and Minutes at crease. A linear regression model is then fitted between Runs and Balls Faced + Minutes at crease

battingPerf3d("./tendulkar.csv","Sachin Tendulkar")Average runs at different venues

The plot below gives the average runs scored by Tendulkar at different grounds. The plot also displays the number of innings at each ground as a label at x-axis. It can be seen Tendulkar did great in Colombo (SSC), Melbourne ifor matches overseas and Mumbai, Mohali and Bangalore at home

batsmanAvgRunsGround("./tendulkar.csv","Sachin Tendulkar")

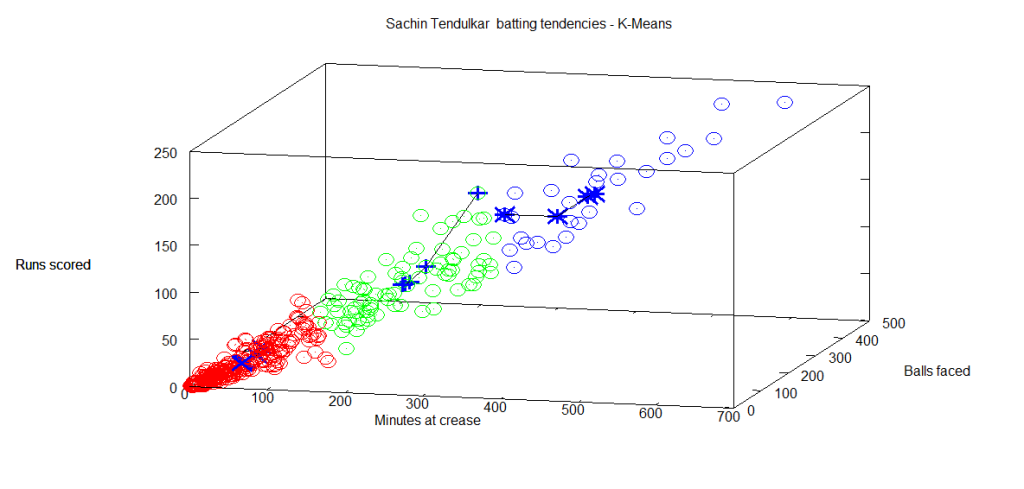

Highest Runs Likelihood

The plot below shows the Runs Likelihood for a batsman. For this the performance of Sachin is plotted as a 3D scatter plot with Runs versus Balls Faced + Minutes at crease using. K-Means. The centroids of 3 clusters are computed and plotted. In this plot. Sachin Tendulkar’s highest tendencies are computed and plotted using K-Means

batsmanRunsLikelihood("./tendulkar.csv","Sachin Tendulkar")## Summary of Sachin Tendulkar 's runs scoring likelihood

## **************************************************

##

## There is a 16.51 % likelihood that Sachin Tendulkar will make 139 Runs in 251 balls over 353 Minutes

## There is a 58.41 % likelihood that Sachin Tendulkar will make 16 Runs in 31 balls over 44 Minutes

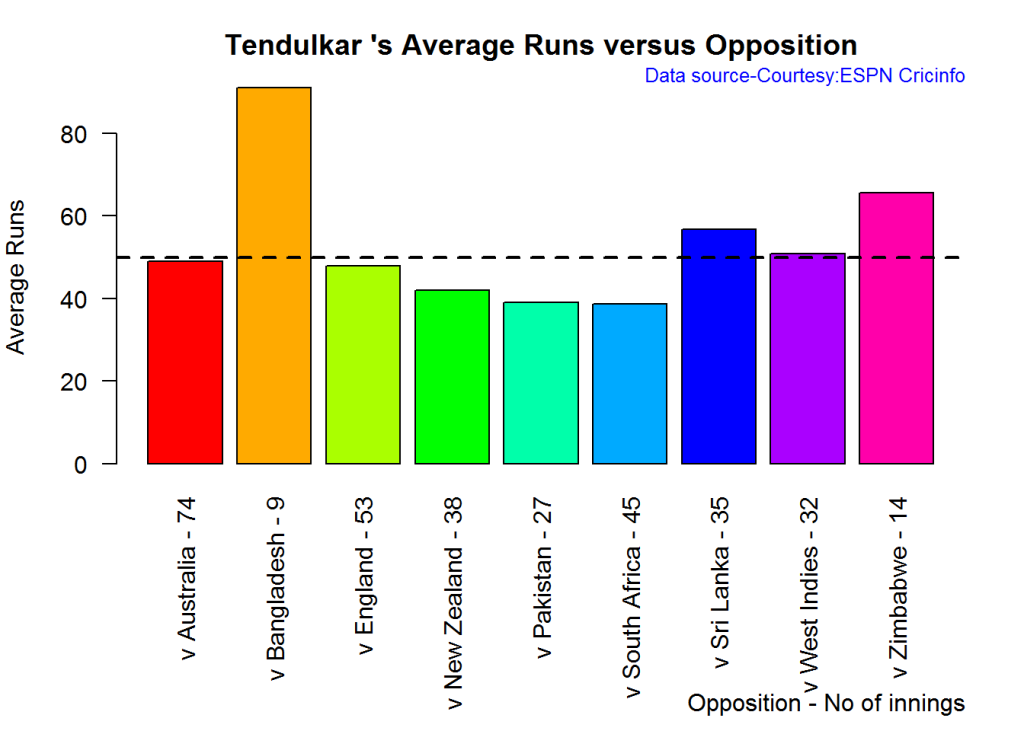

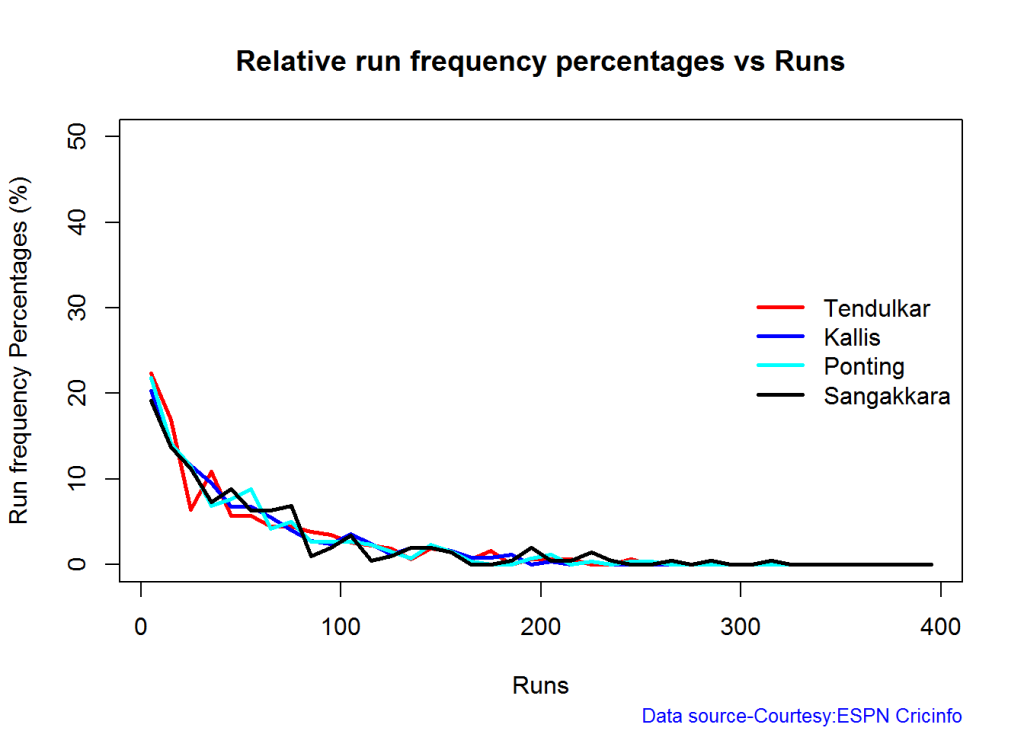

## There is a 25.08 % likelihood that Sachin Tendulkar will make 66 Runs in 122 balls over 167 MinutesA look at the Top 4 batsman – Tendulkar, Kallis, Ponting and Sangakkara

The batsmen with the most hundreds in test cricket are

- Sachin Tendulkar :Average:53.78,100’s – 51, 50’s – 68

- Jacques Kallis : Average: 55.47, 100’s – 45, 50’s – 58

- Ricky Ponting : Average: 51.85, 100’s – 41 , 50’s – 62

- Kumara Sangakarra: Average: 58.04 ,100’s – 38 , 50’s – 52

in that order.

The following plots take a closer at their performances. The box plots show the mean (red line) and median (blue line). The two ends of the boxplot display the 25th and 75th percentile.

Box Histogram Plot

This plot shows a combined boxplot of the Runs ranges and a histogram of the Runs Frequency. The calculated Mean differ from the stated means possibly because of data cleaning. Also not sure how the means were arrived at ESPN Cricinfo for e.g. when considering not out..

batsmanPerfBoxHist("./tendulkar.csv","Sachin Tendulkar")batsmanPerfBoxHist("./kallis.csv","Jacques Kallis")batsmanPerfBoxHist("./ponting.csv","Ricky Ponting")batsmanPerfBoxHist("./sangakkara.csv","K Sangakkara")Contribution to won and lost matches

The plot below shows the contribution of Tendulkar, Kallis, Ponting and Sangakarra in matches won and lost. The plots show the range of runs scored as a boxplot (25th & 75th percentile) and the mean scored. The total matches won and lost are also printed in the plot.

All the players have scored more in the matches they won than the matches they lost. Ricky Ponting is the only batsman who seems to have more matches won to his credit than others. This could also be because he was a member of strong Australian team

For the next 2 functions below you will have to use the getPlayerDataSp() function. I

have commented this as I already have these files

tendulkarsp par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

batsmanContributionWonLost("tendulkarsp.csv","Tendulkar")

batsmanContributionWonLost("kallissp.csv","Kallis")

batsmanContributionWonLost("pontingsp.csv","Ponting")

batsmanContributionWonLost("sangakkarasp.csv","Sangakarra")dev.off()## null device

## 1

Performance at home and overseas

From the plot below it can be seen

Tendulkar has more matches overseas than at home and his performance is consistent in all venues at home or abroad. Ponting has lesser innings than Tendulkar and has an equally good performance at home and overseas.Kallis and Sangakkara’s performance abroad is lower than the performance at home.

This function also requires the use of getPlayerDataSp() as shown above

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

batsmanPerfHomeAway("tendulkarsp.csv","Tendulkar")

batsmanPerfHomeAway("kallissp.csv","Kallis")

batsmanPerfHomeAway("pontingsp.csv","Ponting")

batsmanPerfHomeAway("sangakkarasp.csv","Sangakarra")

dev.off()

dev.off()## null device

## 1 Relative Mean Strike Rate plot

The plot below compares the Mean Strike Rate of the batsman for each of the runs ranges of 10 and plots them. The plot indicate the following Range 0 – 50 Runs – Ponting leads followed by Tendulkar Range 50 -100 Runs – Ponting followed by Sangakkara Range 100 – 150 – Ponting and then Tendulkar

frames <- list("./tendulkar.csv","./kallis.csv","ponting.csv","sangakkara.csv")

names <- list("Tendulkar","Kallis","Ponting","Sangakkara")

relativeBatsmanSR(frames,names)Relative Runs Frequency plot

The plot below gives the relative Runs Frequency Percetages for each 10 run bucket. The plot below show

Sangakkara leads followed by Ponting

frames <- list("./tendulkar.csv","./kallis.csv","ponting.csv","sangakkara.csv")

names <- list("Tendulkar","Kallis","Ponting","Sangakkara")

relativeRunsFreqPerf(frames,names)Moving Average of runs in career

Take a look at the Moving Average across the career of the Top 4. Clearly . Kallis and Sangakkara have a few more years of great batting ahead. They seem to average on 50. . Tendulkar and Ponting definitely show a slump in the later years

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

batsmanMovingAverage("./tendulkar.csv","Sachin Tendulkar")

batsmanMovingAverage("./kallis.csv","Jacques Kallis")

batsmanMovingAverage("./ponting.csv","Ricky Ponting")

batsmanMovingAverage("./sangakkara.csv","K Sangakkara")dev.off()## null device

## 1Future Runs forecast

Here are plots that forecast how the batsman will perform in future. In this case 90% of the career runs trend is uses as the training set. the remaining 10% is the test set.

A Holt-Winters forecating model is used to forecast future performance based on the 90% training set. The forecated runs trend is plotted. The test set is also plotted to see how close the forecast and the actual matches

Take a look at the runs forecasted for the batsman below.

- Tendulkar’s forecasted performance seems to tally with his actual performance with an average of 50

- Kallis the forecasted runs are higher than the actual runs he scored

- Ponting seems to have a good run in the future

- Sangakkara has a decent run in the future averaging 50 runs

par(mfrow=c(2,2))

par(mar=c(4,4,2,2))

batsmanPerfForecast("./tendulkar.csv","Sachin Tendulkar")

batsmanPerfForecast("./kallis.csv","Jacques Kallis")

batsmanPerfForecast("./ponting.csv","Ricky Ponting")

batsmanPerfForecast("./sangakkara.csv","K Sangakkara")dev.off()## null device

## 1Check Batsman In-Form or Out-of-Form

The below computation uses Null Hypothesis testing and p-value to determine if the batsman is in-form or out-of-form. For this 90% of the career runs is chosen as the population and the mean computed. The last 10% is chosen to be the sample set and the sample Mean and the sample Standard Deviation are caculated.

The Null Hypothesis (H0) assumes that the batsman continues to stay in-form where the sample mean is within 95% confidence interval of population mean The Alternative (Ha) assumes that the batsman is out of form the sample mean is beyond the 95% confidence interval of the population mean.

A significance value of 0.05 is chosen and p-value us computed If p-value >= .05 – Batsman In-Form If p-value < 0.05 – Batsman Out-of-Form

Note Ideally the p-value should be done for a population that follows the Normal Distribution. But the runs population is usually left skewed. So some correction may be needed. I will revisit this later

This is done for the Top 4 batsman

checkBatsmanInForm("./tendulkar.csv","Sachin Tendulkar")## *******************************************************************************************

##

## Population size: 294 Mean of population: 50.48

## Sample size: 33 Mean of sample: 32.42 SD of sample: 29.8

##

## Null hypothesis H0 : Sachin Tendulkar 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Sachin Tendulkar 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Sachin Tendulkar 's Form Status: Out-of-Form because the p value: 0.000713 is less than alpha= 0.05"

## *******************************************************************************************checkBatsmanInForm("./kallis.csv","Jacques Kallis")## *******************************************************************************************

##

## Population size: 240 Mean of population: 47.5

## Sample size: 27 Mean of sample: 47.11 SD of sample: 59.19

##

## Null hypothesis H0 : Jacques Kallis 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Jacques Kallis 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Jacques Kallis 's Form Status: In-Form because the p value: 0.48647 is greater than alpha= 0.05"

## *******************************************************************************************checkBatsmanInForm("./ponting.csv","Ricky Ponting")## *******************************************************************************************

##

## Population size: 251 Mean of population: 47.5

## Sample size: 28 Mean of sample: 36.25 SD of sample: 48.11

##

## Null hypothesis H0 : Ricky Ponting 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : Ricky Ponting 's sample average is below the 95% confidence

## interval of population average

##

## [1] "Ricky Ponting 's Form Status: In-Form because the p value: 0.113115 is greater than alpha= 0.05"

## *******************************************************************************************checkBatsmanInForm("./sangakkara.csv","K Sangakkara")## *******************************************************************************************

##

## Population size: 193 Mean of population: 51.92

## Sample size: 22 Mean of sample: 71.73 SD of sample: 82.87

##

## Null hypothesis H0 : K Sangakkara 's sample average is within 95% confidence interval

## of population average

## Alternative hypothesis Ha : K Sangakkara 's sample average is below the 95% confidence

## interval of population average

##

## [1] "K Sangakkara 's Form Status: In-Form because the p value: 0.862862 is greater than alpha= 0.05"

## *******************************************************************************************3D plot of Runs vs Balls Faced and Minutes at Crease

The plot is a scatter plot of Runs vs Balls faced and Minutes at Crease. A prediction plane is fitted

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

battingPerf3d("./tendulkar.csv","Tendulkar")

battingPerf3d("./kallis.csv","Kallis")

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

battingPerf3d("./ponting.csv","Ponting")

battingPerf3d("./sangakkara.csv","Sangakkara")

par(mfrow=c(1,2))

par(mar=c(4,4,2,2))

battingPerf3d("./ponting.csv","Ponting")

battingPerf3d("./sangakkara.csv","Sangakkara")

dev.off()

dev.off()## null device

## 1Predicting Runs given Balls Faced and Minutes at Crease

A multi-variate regression plane is fitted between Runs and Balls faced +Minutes at crease. A sample sequence of Balls Faced(BF) and Minutes at crease (Mins) is setup as shown below. The fitted model is used to predict the runs for these values

BF <- seq( 10, 400,length=15)

Mins <- seq(30,600,length=15)

newDF <- data.frame(BF,Mins)

tendulkar <- batsmanRunsPredict("./tendulkar.csv","Tendulkar",newdataframe=newDF)

kallis <- batsmanRunsPredict("./kallis.csv","Kallis",newdataframe=newDF)

ponting <- batsmanRunsPredict("./ponting.csv","Ponting",newdataframe=newDF)

sangakkara <- batsmanRunsPredict("./sangakkara.csv","Sangakkara",newdataframe=newDF)The fitted model is then used to predict the runs that the batsmen will score for a given Balls faced and Minutes at crease. It can be seen Ponting has the will score the highest for a given Balls Faced and Minutes at crease.

Ponting is followed by Tendulkar who has Sangakkara close on his heels and finally we have Kallis. This is intuitive as we have already seen that Ponting has a highest strike rate.

batsmen <-cbind(round(tendulkar$Runs),round(kallis$Runs),round(ponting$Runs),round(sangakkara$Runs))

colnames(batsmen) <- c("Tendulkar","Kallis","Ponting","Sangakkara")

newDF <- data.frame(round(newDF$BF),round(newDF$Mins))

colnames(newDF) <- c("BallsFaced","MinsAtCrease")

predictedRuns <- cbind(newDF,batsmen)

predictedRuns## BallsFaced MinsAtCrease Tendulkar Kallis Ponting Sangakkara

## 1 10 30 7 6 9 2

## 2 38 71 23 20 25 18

## 3 66 111 39 34 42 34

## 4 94 152 54 48 59 50

## 5 121 193 70 62 76 66

## 6 149 234 86 76 93 82

## 7 177 274 102 90 110 98

## 8 205 315 118 104 127 114

## 9 233 356 134 118 144 130

## 10 261 396 150 132 161 146

## 11 289 437 165 146 178 162

## 12 316 478 181 159 194 178

## 13 344 519 197 173 211 194

## 14 372 559 213 187 228 210

## 15 400 600 229 201 245 226 Checkout my book ‘Deep Learning from first principles Second Edition- In vectorized Python, R and Octave’. My book is available on Amazon as paperback ($18.99) and in kindle version($9.99/Rs449).

Checkout my book ‘Deep Learning from first principles Second Edition- In vectorized Python, R and Octave’. My book is available on Amazon as paperback ($18.99) and in kindle version($9.99/Rs449).

You may also like my companion book “Practical Machine Learning with R and Python:Second Edition- Machine Learning in stereo” available in Amazon in paperback($12.99) and Kindle($9.99/Rs449) versions.

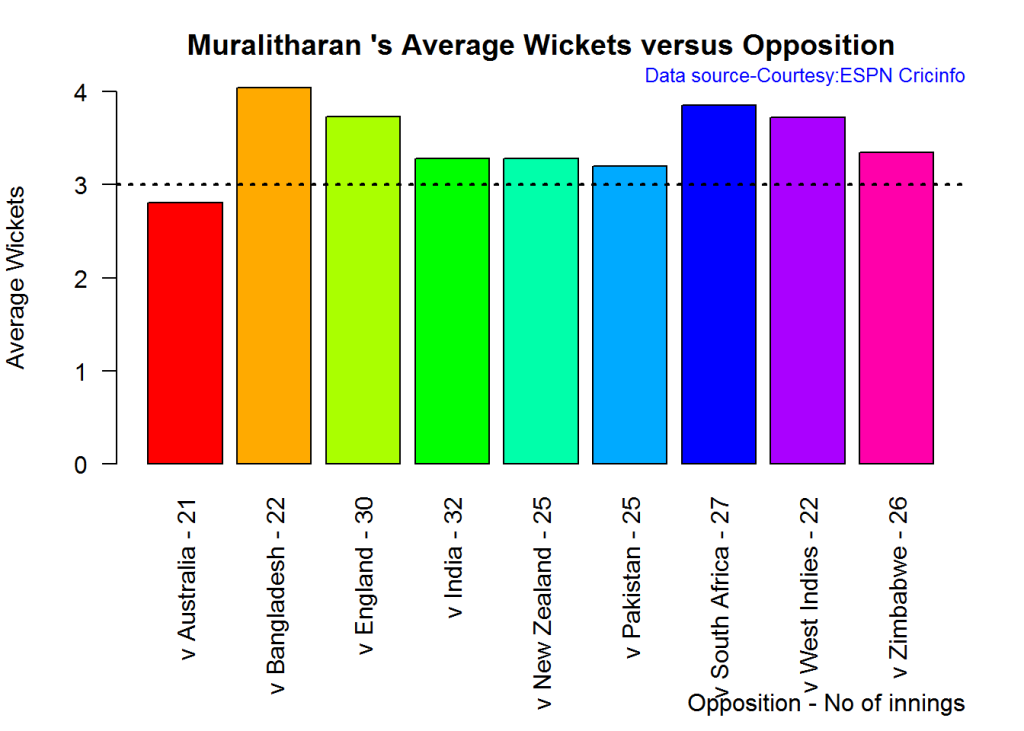

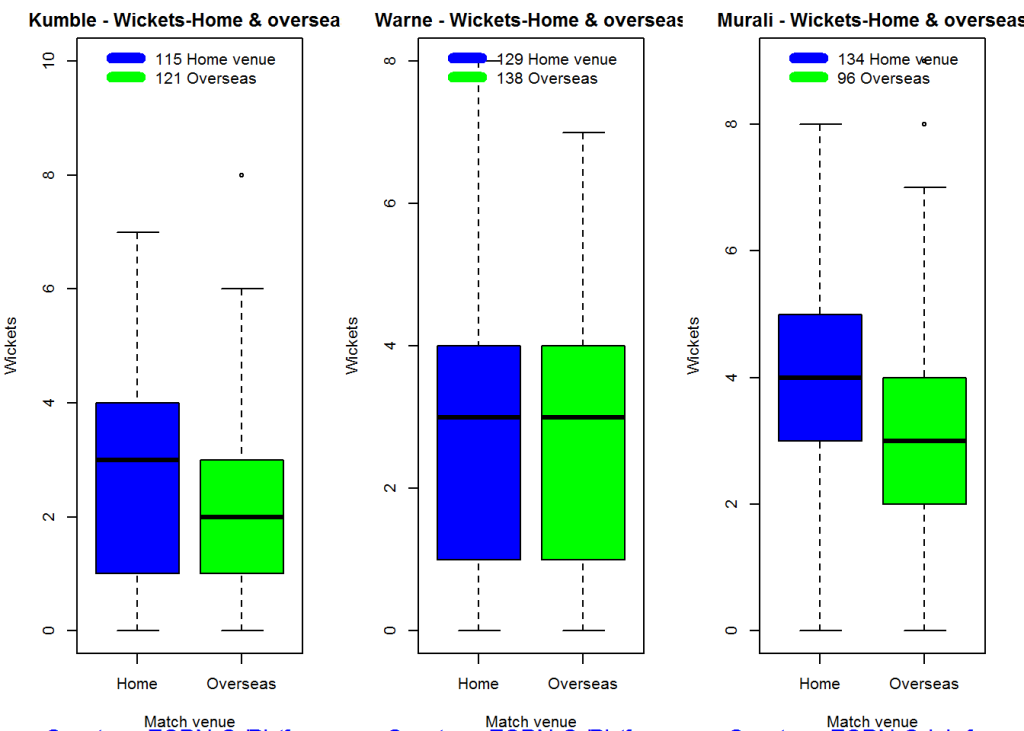

Analysis of Top 3 wicket takers

The top 3 wicket takes in test history are

1. M Muralitharan:Wickets: 800, Average = 22.72, Economy Rate – 2.47

2. Shane Warne: Wickets: 708, Average = 25.41, Economy Rate – 2.65

3. Anil Kumble: Wickets: 619, Average = 29.65, Economy Rate – 2.69

How do Anil Kumble, Shane Warne and M Muralitharan compare with one another with respect to wickets taken and the Economy Rate. The next set of plots compute and plot precisely these analyses.

Wicket Frequency Plot

This plot below computes the percentage frequency of number of wickets taken for e.g 1 wicket x%, 2 wickets y% etc and plots them as a continuous line

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

bowlerWktsFreqPercent("./kumble.csv","Anil Kumble")

bowlerWktsFreqPercent("./warne.csv","Shane Warne")

bowlerWktsFreqPercent("./murali.csv","M Muralitharan")dev.off()## null device

## 1

Wickets Runs plot

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

bowlerWktsRunsPlot("./kumble.csv","Kumble")

bowlerWktsRunsPlot("./warne.csv","Warne")

bowlerWktsRunsPlot("./murali.csv","Muralitharan")

dev.off()## null device

## 1Average wickets at different venues

The plot gives the average wickets taken by Muralitharan at different venues. Muralitharan has taken an average of 8 and 6 wickets at Oval & Wellington respectively in 2 different innings. His best performances are at Kandy and Colombo (SSC)

bowlerAvgWktsGround("./murali.csv","Muralitharan")

Relative Wickets Frequency Percentage

The Relative Wickets Percentage plot shows that M Muralitharan has a large percentage of wickets in the 3-8 wicket range

frames <- list("./kumble.csv","./murali.csv","warne.csv")

names <- list("Anil KUmble","M Muralitharan","Shane Warne")

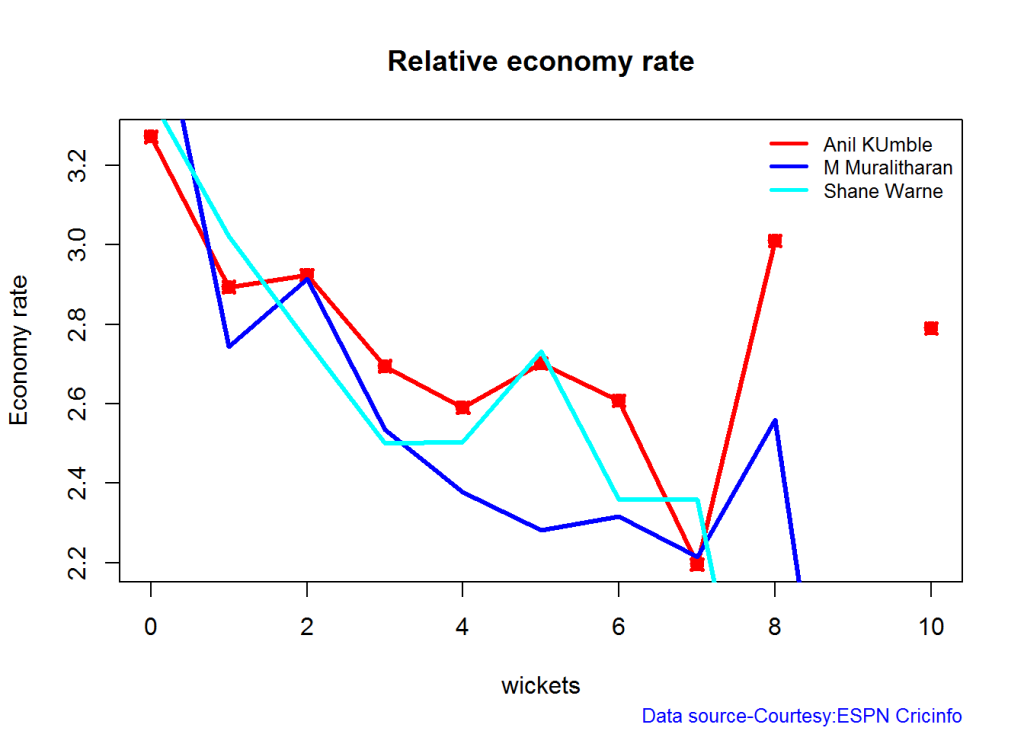

relativeBowlingPerf(frames,names)Relative Economy Rate against wickets taken

Clearly from the plot below it can be seen that Muralitharan has the best Economy Rate among the three

frames <- list("./kumble.csv","./murali.csv","warne.csv")

names <- list("Anil KUmble","M Muralitharan","Shane Warne")

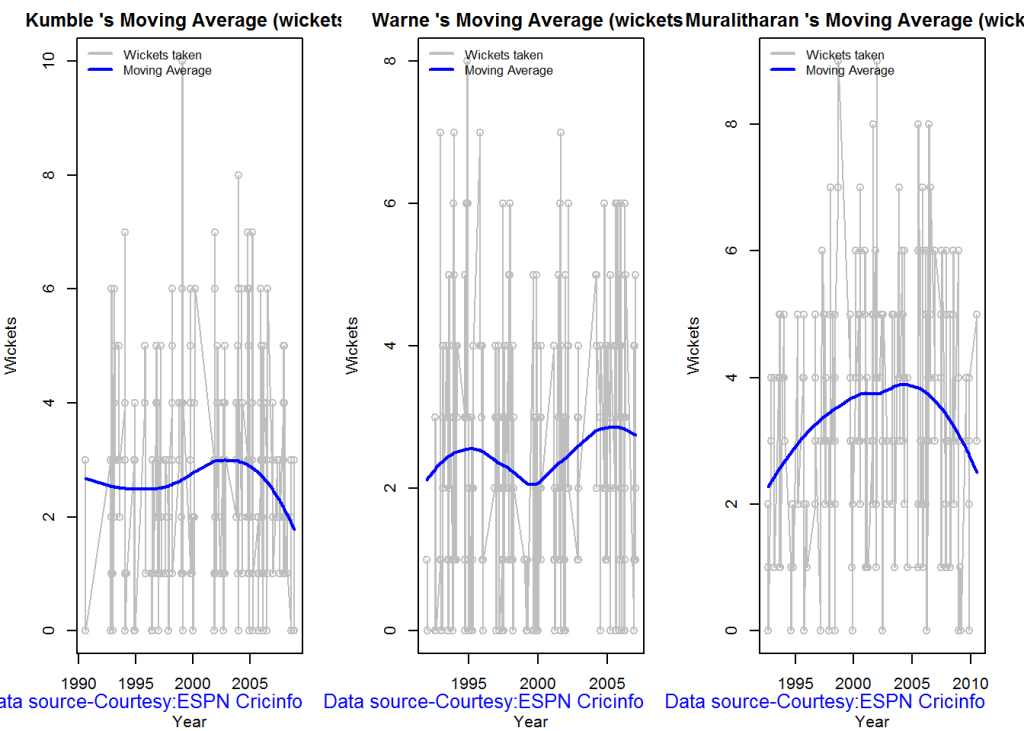

relativeBowlingER(frames,names)Wickets taken moving average

From th eplot below it can be see 1. Shane Warne’s performance at the time of his retirement was still at a peak of 3 wickets 2. M Muralitharan seems to have become ineffective over time with his peak years being 2004-2006 3. Anil Kumble also seems to slump down and become less effective.

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

bowlerMovingAverage("./kumble.csv","Anil Kumble")

bowlerMovingAverage("./warne.csv","Shane Warne")

bowlerMovingAverage("./murali.csv","M Muralitharan")dev.off()## null device

## 1

Future Wickets forecast

Here are plots that forecast how the bowler will perform in future. In this case 90% of the career wickets trend is used as the training set. the remaining 10% is the test set.

A Holt-Winters forecating model is used to forecast future performance based on the 90% training set. The forecated wickets trend is plotted. The test set is also plotted to see how close the forecast and the actual matches

Take a look at the wickets forecasted for the bowlers below. – Shane Warne and Muralitharan have a fairly consistent forecast – Kumble forecast shows a small dip

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

bowlerPerfForecast("./kumble.csv","Anil Kumble")

bowlerPerfForecast("./warne.csv","Shane Warne")

bowlerPerfForecast("./murali.csv","M Muralitharan")dev.off()## null device

## 1Contribution to matches won and lost

The plot below is extremely interesting

1. Kumble wickets range from 2 to 4 wickets in matches wons with a mean of 3

2. Warne wickets in won matches range from 1 to 4 with more matches won. Clearly there are other bowlers contributing to the wins, possibly the pacers

3. Muralitharan wickets range in winning matches is more than the other 2 and ranges ranges 3 to 5 and clearly had a hand (pun unintended) in Sri Lanka’s wins

As discussed above the next 2 charts require the use of getPlayerDataSp()

kumblesp par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

bowlerContributionWonLost("kumblesp.csv","Kumble")

bowlerContributionWonLost("warnesp.csv","Warne")

bowlerContributionWonLost("muralisp.csv","Murali")dev.off()## null device

## 1

Performance home and overseas

From the plot below it can be seen that Kumble & Warne have played more matches overseas than Muralitharan. Both Kumble and Warne show an average of 2 wickers overseas, Murali on the other hand has an average of 2.5 wickets overseas but a slightly less number of matches than Kumble & Warne

par(mfrow=c(1,3))

par(mar=c(4,4,2,2))

bowlerPerfHomeAway("kumblesp.csv","Kumble")

bowlerPerfHomeAway("warnesp.csv","Warne")

bowlerPerfHomeAway("muralisp.csv","Murali")

dev.off()## null device

## 1 Check for bowler in-form/out-of-form

The below computation uses Null Hypothesis testing and p-value to determine if the bowler is in-form or out-of-form. For this 90% of the career wickets is chosen as the population and the mean computed. The last 10% is chosen to be the sample set and the sample Mean and the sample Standard Deviation are caculated.

The Null Hypothesis (H0) assumes that the bowler continues to stay in-form where the sample mean is within 95% confidence interval of population mean The Alternative (Ha) assumes that the bowler is out of form the sample mean is beyond the 95% confidence interval of the population mean.

A significance value of 0.05 is chosen and p-value us computed If p-value >= .05 – Batsman In-Form If p-value < 0.05 – Batsman Out-of-Form

Note Ideally the p-value should be done for a population that follows the Normal Distribution. But the runs population is usually left skewed. So some correction may be needed. I will revisit this later

Note: The check for the form status of the bowlers indicate 1. That both Kumble and Muralitharan were out of form. This also shows in the moving average plot 2. Warne is still in great form and could have continued for a few more years. Too bad we didn’t see the magic later