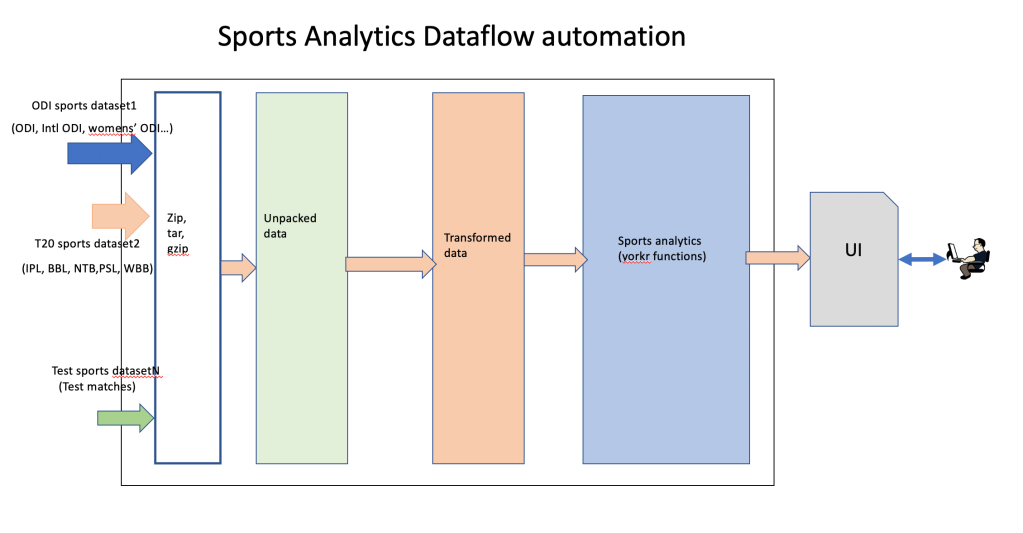

In this post, I construct an end-to-end Apache NiFi pipeline with my R package yorkr. This post is a mirror of my earlier post Big Data-5: kNiFing through cricket data with yorkpy based on my Python package yorkpy. The Apache NiFi Data Pipeilne flows all the way from the source, where the data is obtained, all the way to target analytics output. Apache NiFi was created to automate the flow of data between systems. NiFi dataflows enable the automated and managed flow of information between systems. This post automates the flow of data from Cricsheet, from where the zip file it is downloaded, unpacked, processed, transformed and finally T20 players are ranked.

This post uses the functions of my R package yorkr to rank IPL players. This is a example flow, of a typical Big Data pipeline where the data is ingested from many diverse source systems, transformed and then finally insights are generated. While I execute this NiFi example with my R package yorkr, in a typical Big Data pipeline where the data is huge, of the order of 100s of GB, we would be using the Hadoop ecosystem with Hive, HDFS Spark and so on. Since the data is taken from Cricsheet, which are few Megabytes, this approach would suffice. However if we hypothetically assume that there are several batches of cricket data that are being uploaded to the source, of different cricket matches happening all over the world, and the historical data exceeds several GBs, then we could use a similar Apache NiFi pattern to process the data and generate insights. If the data is was large and distributed across the Hadoop cluster , then we would need to use SparkR or SparklyR to process the data.

This is shown below pictorially

While this post displays the ranks of IPL batsmen, it is possible to create a cool dashboard using UI/UX technologies like AngularJS/ReactJS. Take a look at my post Big Data 6: The T20 Dance of Apache NiFi and yorkpy where I create a simple dashboard of multiple analytics

My R package yorkr can handle both men’s and women’s ODI, and all formats of T20 in Cricsheet namely Intl. T20 (men’s, women’s), IPL, BBL, Natwest T20, PSL, Women’s BBL etc. To know more details about yorkr see Revitalizing R package yorkr

The code can be forked from Github at yorkrWithApacheNiFi

You can take a look at the live demo of the NiFi pipeline at yorkr waltzes with Apache NiFi

Basic Flow

1. Overall flow

The overall NiFi flow contains 2 Process Groups a) DownloadAnd Unpack. b) Convert and Rank IPL batsmen. While it appears that the Process Groups are disconnected, they are not. The first process group downloads the T20 zip file, unpacks the. zip file and saves the YAML files in a specific folder. The second process group monitors this folder and starts processing as soon the YAML files are available. It processes the YAML converting it into dataframes before storing it as CSV file. The next processor then does the actual ranking of the batsmen before writing the output into IPLrank.txt

1.1 DownloadAndUnpack Process Group

This process group is shown below

1.1.1 GetT20Data

The GetT20Data Processor downloads the zip file given the URL

The ${T20data} variable points to the specific T20 format that needs to be downloaded. I have set this to https://cricsheet.org/downloads/ipl.zip. This could be set any other data set. In fact we could have parallel data flows for different T20/ Sports data sets and generate

1.1.2 SaveUnpackedData

This processor stores the YAML files in a predetermined folder, so that the data can be picked up by the 2nd Process Group for processing

1.2 ProcessAndRankT20Players Process Group

This is the second process group which converts the YAML files to pandas dataframes before storing them as. CSV files. The RankIPLPlayers will then read all the CSV files, stack them and then proceed to rank the IPL players. The Process Group is shown below

1.2.1 ListFile and FetchFile Processors

The left 2 Processors ListFile and FetchFile get all the YAML files from the folder and pass it to the next processor

1.2.2 convertYaml2DataFrame Processor

The convertYaml2DataFrame Processor uses the ExecuteStreamCommand which call Rscript. The Rscript invoked the yorkr function convertYaml2DataframeT20() as shown below

I also use a 16 concurrent tasks to convert 16 different flowfiles at once

library(yorkr) args<-commandArgs(TRUE) convertYaml2RDataframeT20(args[1], args[2], args[3])

1.2.3 MergeContent Processor

This processor’s only job is to trigger the rankIPLPlayers when all the FlowFiles have merged into 1 file.

1.2.4 RankT20Players

This processor is an ExecuteStreamCommand Processor that executes a Rscript which invokes a yorrkr function rankIPLT20Batsmen()

library(yorkr) args<-commandArgs(TRUE) rankIPLBatsmen(args[1],args[2],args[3])

1.2.5 OutputRankofT20Player Processor

This processor writes the generated rank to an output file.

1.3 Final Ranking of IPL T20 players

The Nodejs based web server picks up this file and displays on the web page the final ranks (the code is based on a good youtube for reading from file)

[1] "Chennai Super Kings" [1] "Deccan Chargers" [1] "Delhi Daredevils" [1] "Kings XI Punjab" [1] "Kochi Tuskers Kerala" [1] "Kolkata Knight Riders" [1] "Mumbai Indians" [1] "Pune Warriors" [1] "Rajasthan Royals" [1] "Royal Challengers Bangalore" [1] "Sunrisers Hyderabad" [1] "Gujarat Lions" [1] "Rising Pune Supergiants" [1] "Chennai Super Kings-BattingDetails.RData" [1] "Deccan Chargers-BattingDetails.RData" [1] "Delhi Daredevils-BattingDetails.RData" [1] "Kings XI Punjab-BattingDetails.RData" [1] "Kochi Tuskers Kerala-BattingDetails.RData" [1] "Kolkata Knight Riders-BattingDetails.RData" [1] "Mumbai Indians-BattingDetails.RData" [1] "Pune Warriors-BattingDetails.RData" [1] "Rajasthan Royals-BattingDetails.RData" [1] "Royal Challengers Bangalore-BattingDetails.RData" [1] "Sunrisers Hyderabad-BattingDetails.RData" [1] "Gujarat Lions-BattingDetails.RData" [1] "Rising Pune Supergiants-BattingDetails.RData" # A tibble: 429 x 4 batsman matches meanRuns meanSR <chr> <int> <dbl> <dbl> 1 DA Warner 130 37.9 128. 2 LMP Simmons 29 37.2 106. 3 CH Gayle 125 36.2 134. 4 HM Amla 16 36.1 108. 5 ML Hayden 30 35.9 129. 6 SE Marsh 67 35.9 120. 7 RR Pant 39 35.3 135. 8 MEK Hussey 59 33.8 105. 9 KL Rahul 59 33.5 128. 10 MN van Wyk 5 33.4 112. # … with 419 more rows

Conclusion

This post demonstrated an end-to-end pipeline with Apache NiFi and R package yorkr. You can this pipeline and generated different analytics using the various functions of yorkr and display them on a dashboard.

Hope you enjoyed with post!

See also

1. The mechanics of Convolutional Neural Networks in Tensorflow and Keras

2. Deep Learning from first principles in Python, R and Octave – Part 7

3. Fun simulation of a Chain in Android

4. Natural language processing: What would Shakespeare say?

5. TWS-4: Gossip protocol: Epidemics and rumors to the rescue

6. Cricketr learns new tricks : Performs fine-grained analysis of players

7. Introducing QCSimulator: A 5-qubit quantum computing simulator in R

8. Practical Machine Learning with R and Python – Part 5

9. Cricpy adds team analytics to its arsenal!!

To see posts click Index of posts

Then felt I like some watcher of the skies

Then felt I like some watcher of the skies

1.1 Read NASA Web server logs

Read the logs files from NASA for the months Jul 95 and Aug 95