The initial work in the ‘Backpropagation Algorithm’ started in the 1980’s and led to an explosion of interest in Neural Networks and the application of backpropagation

The ‘Backpropagation’ algorithm computes the minimum of an error function with respect to the weights in the Neural Network. It uses the method of gradient descent. The combination of weights in a multi-layered neural network, which minimizes the error/cost function is considered to be a solution of the learning problem.

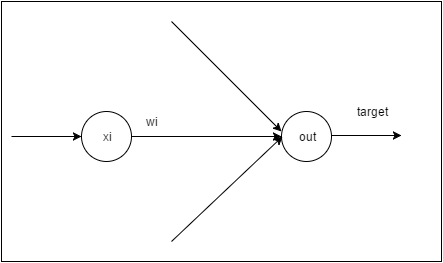

In the Neural Network above

Checkout my book ‘Deep Learning from first principles: Second Edition – In vectorized Python, R and Octave’. My book starts with the implementation of a simple 2-layer Neural Network and works its way to a generic L-Layer Deep Learning Network, with all the bells and whistles. The derivations have been discussed in detail. The code has been extensively commented and included in its entirety in the Appendix sections. My book is available on Amazon as paperback ($18.99) and in kindle version($9.99/Rs449).

Checkout my book ‘Deep Learning from first principles: Second Edition – In vectorized Python, R and Octave’. My book starts with the implementation of a simple 2-layer Neural Network and works its way to a generic L-Layer Deep Learning Network, with all the bells and whistles. The derivations have been discussed in detail. The code has been extensively commented and included in its entirety in the Appendix sections. My book is available on Amazon as paperback ($18.99) and in kindle version($9.99/Rs449).

Perceptrons and single layered neural networks can only classify, if the sample space is linearly separable. For non-linear decision boundaries, a multi layered neural network with backpropagation is required to generate more complex boundaries.The backpropagation algorithm, computes the minimum of the error function in weight space using the method of gradient descent. This computation of the gradient, requires the activation function to be both differentiable and continuous. Hence the sigmoid or logistic function is typically chosen as the activation function at every layer.

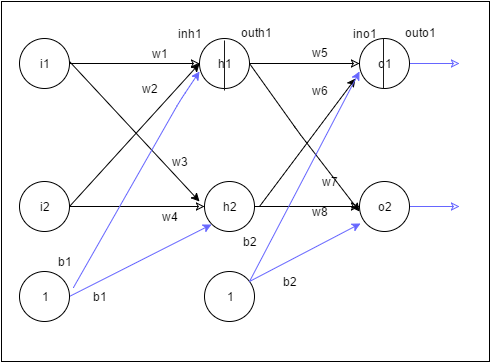

This post looks at a 3 layer neural network with 1 input, 1 hidden and 1 output. To a large extent this post is based on Matt Mazur’s detailed “A step by step backpropagation example“, and Prof Hinton’s “Neural Networks for Machine Learning” at Coursera and a few other sources.

While Matt Mazur’s post uses example values, I generate the formulas for the gradient derivatives for each weight in the hidden and input layers. I intend to implement a vector version of backpropagation in Octave, R and Python. So this post is a prequel to that.

The 3 layer neural network is as below

Some basic derivations which are used in backpropagation

Chain rule of differentiation

Let y=f(u)

and u=g(x) then

An important result

Let then

Using the chain rule of differentiation we get

Therefore -(A)

1) Feed forward network

The net output at the 1st hidden layer

The sigmoid/logistic function function is used to generate the activation outputs for each hidden layer. The sigmoid is chosen because it is continuous and also has a continuous derivative

The net output at the output layer

Total error

2)The backwards pass

In the backward pass we need to compute how the squared error changes with changing weight. i.e we compute for each weight

. This is shown below

A squared error is assumed

Error gradient with

Since

Now considering the 2nd term in (B)

Using result (A)

The 3rd term in (B)

Having computed , we now perform gradient descent, by computing a new weight, assuming a learning rate

If we do this for we would get

3)Hidden layer

We now compute how the total error changes for a change in weight

– (C)

Using

we get

-(D)

Considering the 1st term in (C)

Now

which gives the following

– (E)

– (F)

Combining (D), (E) & (F) we get

This can be represented as

With this derivative a new value of is computed

Hence there are 2 important results

At the output layer we have

a)

At each hidden layer we compute

b)

Backpropagation, was very successful in the early years, but the algorithm does have its problems for e.g the issue of the ‘vanishing’ and ‘exploding’ gradient. Yet it is a very key development in Neural Networks, and the issues with the backprop gradients have been addressed through techniques such as the momentum method and adaptive learning rate etc.

In this post. I derive the weights at the output layer and the hidden layer. As I already mentioned above, I intend to implement a vector version of the backpropagation algorithm in Octave, R and Python in the days to come.

Watch this space! I’ll be back

P.S. If you find any typos/errors, do let me know!

References

1. Neural Networks for Machine Learning by Prof Geoffrey Hinton

2. A Step by Step Backpropagation Example by Matt Mazur

3. The Backpropagation algorithm by R Rojas

4. Backpropagation Learning Artificial Neural Networks David S Touretzky

5. Artificial Intelligence, Prof Sudeshna Sarkar, NPTEL

Also see my other posts

1. Introducing QCSimulator: A 5-qubit quantum computing simulator in R

2. Design Principles of Scalable, Distributed Systems

3. A method for optimal bandwidth usage by auctioning available bandwidth using the OpenFlow protocol

4. De-blurring revisited with Wiener filter using OpenCV

5. GooglyPlus: yorkr analyzes IPL players, teams, matches with plots and tables

6. Re-introducing cricketr! : An R package to analyze performances of cricketers

To see all my posts go to ‘Index of Posts‘