This post takes off from my previous post Bend it like Bluemix, MongoDB using Auto-scale – Part 1! In this post I generate traffic using Multi-Mechanize a performance test framework and check out the auto-scaling on Bluemix, besides also doing some rudimentary check on the latency and throughput for this test application. In this particular post I generate concurrent threads which insert documents into MongoDB.

Note: As mentioned in my earlier post this is more of a prototype and the typical situation when architecting cloud applications. Clearly I have not optimized my cloud app (bluemixMongo) for maximum efficiency. Also this a simple 2 tier application with a rudimentary Web interface and a NoSQL DB at This is more of a Proof of Concept (PoC) for the auto-scaling service on Bluemix.

As earlier mentioned the bluemixMongo app is a modification of my earlier post Spicing up a IBM Bluemix cloud app with MongoDB and NodeExpress. The bluemixMongo cloud app that was used for this auto-scaling test can be forked from Devops at bluemixMongo or from GitHib at bluemix-mongo-autoscale. The Multi-mechanize config file, scripts and results can be found at GitHub in multi-mechanize

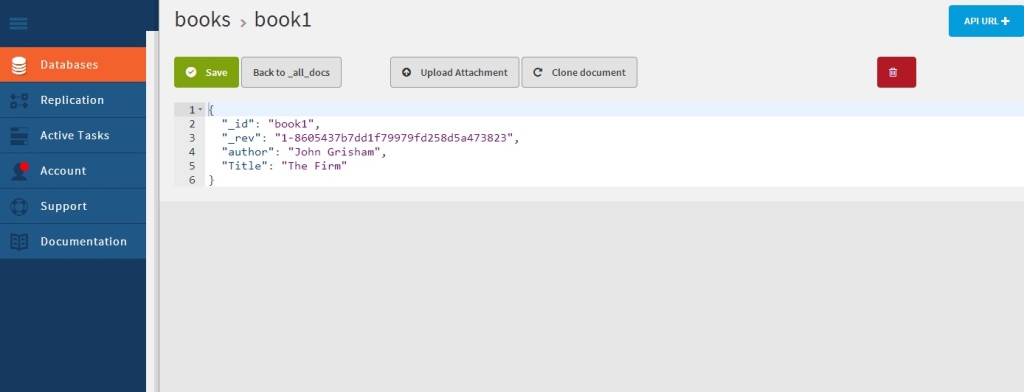

The document to be inserted into MongoDB consists of 3 fields – Firstname, Lastname and Mobile. To simulate the insertion of records into MongoDB I created a Multi-Mechanize script that will generate random combination of letters and numbers for the First and Last names and a random 9 digit number for the mobile. The code for this script is shown below

1. The snippet below measure the latency for loading the ‘New User’ page

v_user.py

def run(self):

# create a Browser instance

br = mechanize.Browser()

# don"t bother with robots.txt

br.set_handle_robots(False)

print("Rendering new user")

br.addheaders = [("User-agent", "Mozilla/5.0Compatible")]

# start the timer

start_timer = time.time()

# submit the request

resp = br.open("http://bluemixmongo.mybluemix.net/newuser")

#resp = br.open("http://localhost:3000/newuser")

resp.read()

# stop the timer

latency = time.time() - start_timer

# store the custom timer

self.custom_timers["Load Add User Page"] = latency

# think-time

time.sleep(2)

The script also measures the time taken to submit the form containing the Firstname, Lastname and Mobile

# select first (zero-based) form on page

br.select_form(nr=0)

# Create random Firstname

a = (''.join(random.choice(string.ascii_uppercase) for i in range(5)))

b = (''.join(random.choice(string.digits) for i in range(5)))

firstname = a + b

# Create random Lastname

a = (''.join(random.choice(string.ascii_uppercase) for i in range(5)))

b = (''.join(random.choice(string.digits) for i in range(5)))

lastname = a + b

# Create a random mobile number

mobile = (''.join(random.choice(string.digits) for i in range(9)))

# set form field

br.form["firstname"] = firstname

br.form["lastname"] = lastname

br.form["mobile"] = mobile

# start the timer

start_timer = time.time()

# submit the form

resp = br.submit()

print("Submitted.")

resp.read()

# stop the timer

latency = time.time() - start_timer

# store the custom timer

self.custom_timers["Add User"] = latency

2. The config.cfg file is setup to generate 2 asynchronous thread pools of 10 threads for about 400 seconds

config.cfg

run_time = 400

rampup = 0

results_ts_interval = 10

progress_bar = on

console_logging = off

xml_report = off

[user_group-1]

threads = 10

script = v_user.py

[user_group-2]

threads = 10

script = v_user.py

3. The code to add a new user in the app (adduser.js) uses the ‘async’ Node module to enforce sequential processing.

adduser.js

async.series([

function(callback)

{

collection = db.collection('phonebook', function(error, response) {

if( error ) {

return; // Return immediately

}

else {

console.log("Connected to phonebook");

}

});

callback(null, 'one');

},

function(callback)

// Insert the record into the DB

collection.insert({

"FirstName" : FirstName,

"LastName" : LastName,

"Mobile" : Mobile

}, function (err, doc) {

if (err) {

// If it failed, return error

res.send("There was a problem adding the information to the database.");

}

else {

// If it worked, redirect to userlist - Display users

res.location("userlist");

// And forward to success page

res.redirect("userlist")

}

});

collection.find().toArray(function(err, items) {

console.log("**************************>>>>>>>Length =" + items.length);

db.close(); // Make sure that the open DB connection is close

});

callback(null, 'two');

}

]);

4. To checkout auto-scaling the instance memory was kept at 128 MB. Also the scale-up policy was memory based and based on the memory of the instance exceeding 55% of 128 MB for 120 secs. The scale up based on CPU utilization was to happen when the utilization exceed 80% for 300 secs.

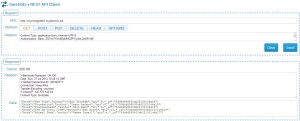

5. Check the auto-scaling policy

6. Initially as seen there is just a single instance

7. At around 48% of the script with around 623 transactions the instance is increased by 1. Note that the available memory is decreased by 640 MB – 128 MB = 512 MB.

8. At around 1324 transactions another instance is added

Note: Bear in mind

a) The memory threshold was artificially brought down to 55% of 128 MB.b) The app itself is not optimized for maximum efficiency

9. The Metric Statistics tab for the Autoscaling service shows this memory breach and the trigger for autoscaling

10. The Scaling history Tab for the Auto-scaling service displays the scale-up and scale-down and the policy rules based on which the scaling happened

11. If you go to the results folder for the Multi-mechanize tool the response and throughput are captured.

The multi-mechanize commands are executed as follows

To create a new project

multimech-newproject.exe adduser

This will create 2 folders a) results b) test_scripts and the file c) config.cfg. The v_user.py needs to be updated as required

To run the script

multimech-run.exe adduser

12.The results are shown below

a) Load Add User page (Latency)

b) Load Add User (Throughput)

c)Load Add User (Latency)

d) Load Add User (Throughput)

The detailed results can be seen at GitHub at multi-mechanize

13. Check the Monitoring and Analytics Page

a) Availability

b) Performance monitoring

So once the auto-scaling happens the application can be fine-tuned and for performance. Obviously one could do it the other way around too.

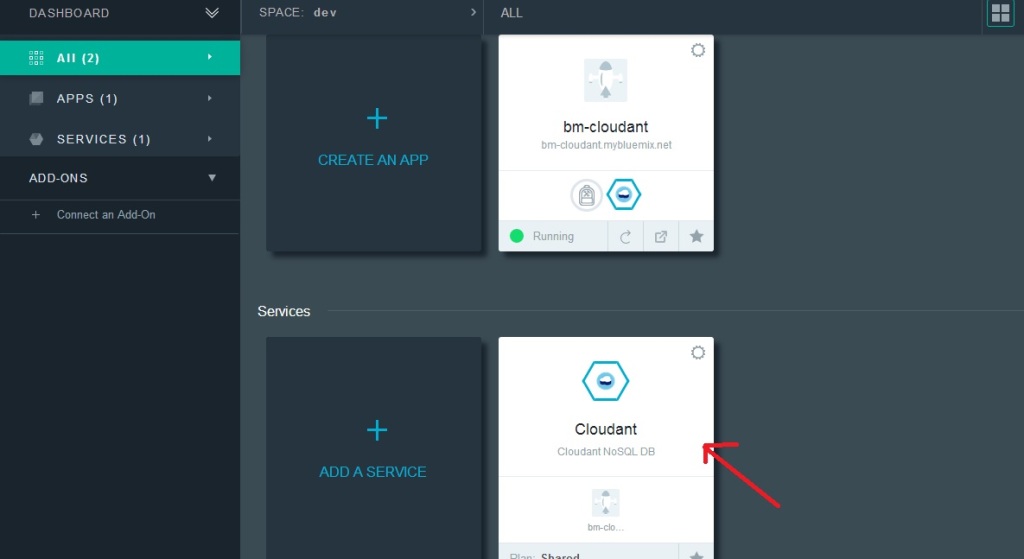

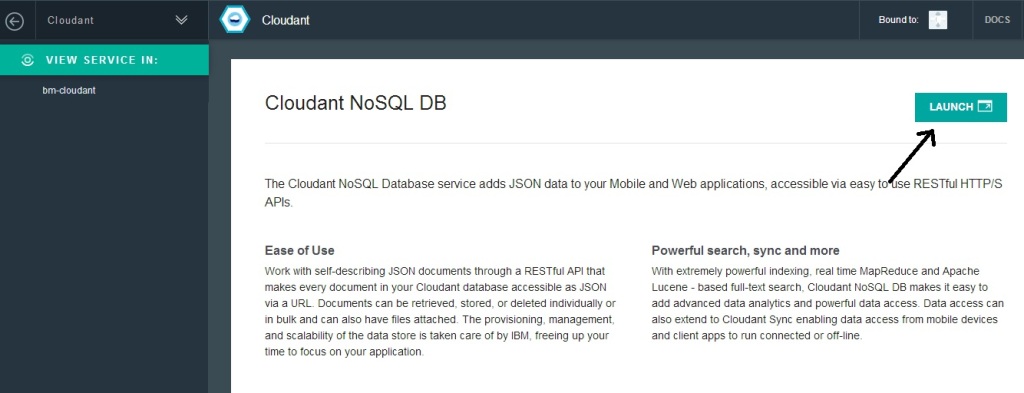

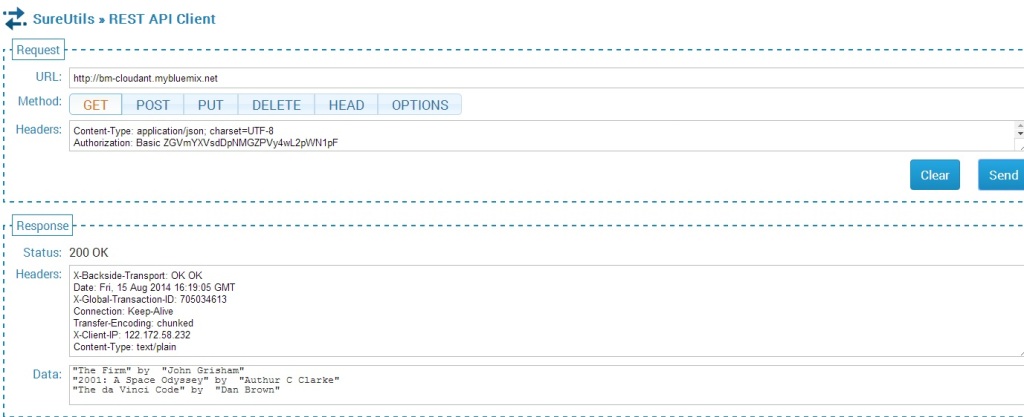

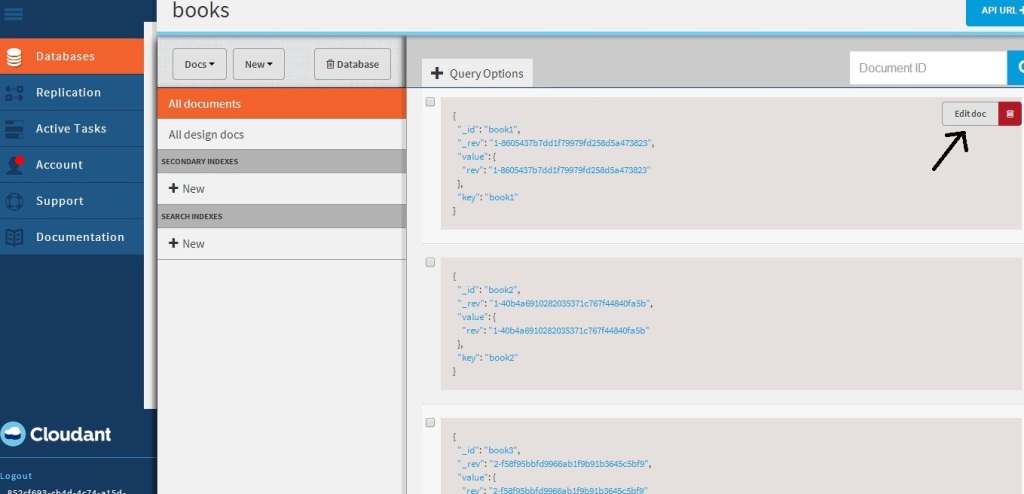

As can be seen adding NoSQL Databases like MongoDB, Redis, Cloudant DB etc. Setting up the auto-scaling policy is also painless as seen above.

Of course the real challenge in cloud applications is to make them distributed and scalable while keeping the applications themselves lean and mean!

See also

Also see

1. Bend it like Bluemix, MongoDB with autoscaling – Part 1

3. Bend it like Bluemix, MongoDB with autoscaling – Part 3

You may like :

a) Latency, throughput implications for the cloud

b) The many faces of latency

c) Brewing a potion with Bluemix, PostgreSQL & Node.js in the cloud

d) A Bluemix recipe with MongoDB and Node.js

e)Spicing up IBM Bluemix with MongoDB and NodeExpress

f) Rock N’ Roll with Bluemix, Cloudant & NodeExpress

a) Latency, throughput implications for the cloud

c) Design principles of scalable, distributed systems

Disclaimer: This article represents the author’s viewpoint only and doesn’t necessarily represent IBM’s positions, strategies or opinions