Published as a tutorial in IBM Cloudant – Bluemix tutorial and demos

Here is an interesting Cloud medley based on IBM’s Bluemix PaaS platform, Cloudant DB and Node.js. This application creates a Webserver using Node.js and uses REST APIs to perform CRUD operations on a Cloudant DB. Cloudant DB is a NoSQL Database as a service (DBaaS) that can handle a wide variety of data types like JSON, full text and geo-spatial data. The documents are stored, indexed and distributed across a elastic datastore spanning racks, datacenters and perform replication of data across datacenters.Cloudant allows one to work with self-describing JSON documents through RESTful APIs making every document in the Cloudant database accessible as JSON via a URL.

This application on Bluemix uses REST APIs to perform the operations of inserting, updating, deleting and listing documents on the Cloudant DB. The code can be forked from Devops at bluemix-cloudant. The code can also be clone from GitHub at bluemix-cloudant.

1) Once the code is forked the application can be deployed on to Bluemix using

cf login -a https://api.ng.bluemix.net

cf push bm-cloudant -p . -m 512M

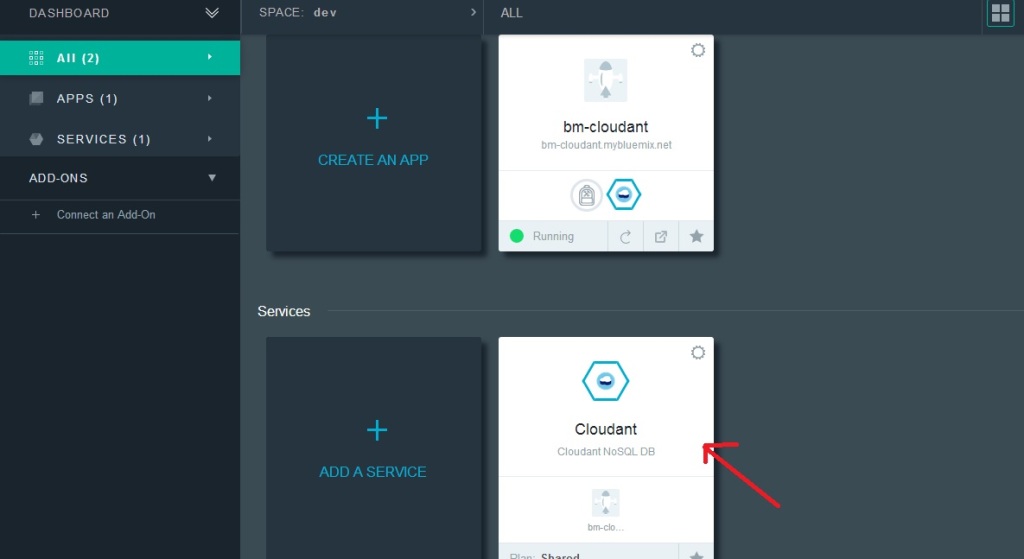

2) After this is successful go to the Bluemix dashboard and add the Cloudant DB service. The CRUD operations can be performed by invoking REST API calls using an appropriate REST client like SureUtils ot Postman in the browser of your choice.

Here are the details of the Bluemix-Cloudant application

3) Once the Cloudant DB service has been added to the Web started Node.js application we need to parse the process.env variable to obtain the URL of the Cloudant DB and the port and host to be used for the Web server.

The Node.js Webserver is started based on the port and host values obtained from process.env

require('http').createServer(function(req, res) {

//Set up the DB connection

if (process.env.VCAP_SERVICES) {

// Running on Bluemix. Parse for the port and host that we've been assigned.

var env = JSON.parse(process.env.VCAP_SERVICES);

var host = process.env.VCAP_APP_HOST;

var port = process.env.VCAP_APP_PORT;

....

}

....

// Perform CRUD operations through REST APIs

// Insert document

if(req.method == 'POST') {

insert_records(req,res);

}

// List documents

else if(req.method == 'GET') {

list_records(req,res);

}

// Update a document

else if(req.method == 'PUT') {

update_records(req,res);

}

// Delete a document

else if(req.method == 'DELETE') {

delete_record(req,res);

}

}).listen(port, host);

2) Access to the Cloudant DB Access to Cloudant DB is obtained as follows

if (process.env.VCAP_SERVICES) {

// Running on Bluemix. Parse the port and host that we've been assigned.

var env = JSON.parse(process.env.VCAP_SERVICES);

var host = process.env.VCAP_APP_HOST;

var port = process.env.VCAP_APP_PORT;

console.log('VCAP_SERVICES: %s', process.env.VCAP_SERVICES);

// Also parse Cloudant settings.

var cloudant = env['cloudantNoSQLDB'][0]['credentials'];

}

var db = new pouchdb('books'),

remote =cloudant.url + '/books';

opts = {

continuous: true

};

// Replicate the DB to remote

console.log(remote);

db.replicate.to(remote, opts);

db.replicate.from(remote, opts);

Access to the Cloudant DB is through the cloudant.url shown above

3) Once the access to the DB is setup we can perform CRUD operations. There are many options for the backend DB. In this application I have PouchDB.

4) Inserting a document: To insert documents into the Cloudant DB based on Pouch DB we need to do the following

var insert_records = function(req, res) {

//Parse the process.env for the port and host that we've been assigned

if (process.env.VCAP_SERVICES) {

// Running on Bluemix. Parse the port and host that we've been assigned.

var env = JSON.parse(process.env.VCAP_SERVICES);

var host = process.env.VCAP_APP_HOST;

var port = process.env.VCAP_APP_PORT;

console.log('VCAP_SERVICES: %s', process.env.VCAP_SERVICES);

// Also parse Cloudant settings.

var cloudant = env['cloudantNoSQLDB'][0]['credentials'];

}

var db = new pouchdb('books'),

remote =cloudant.url + '/books';

opts = {

continuous: true

};

// Replicate the DB to remote

console.log(remote);

db.replicate.to(remote, opts);

db.replicate.from(remote, opts);

// Put 3 documents into the DB

db.put({

author: 'John Grisham',

Title : 'The Firm'

}, 'book1', function (err, response) {

console.log(err || response);

});

...

...

res.writeHead(200, {'Content-Type': 'text/plain'});

res.write("3 documents is inserted");

res.end();

}; // End insert_records

The nice part about Cloudant DB is that you can access your database through the URL. The steps are shown below. Once your application is running. Click on your application. You should see the screen as below.

Click on Cloudant as shown by the arrow.

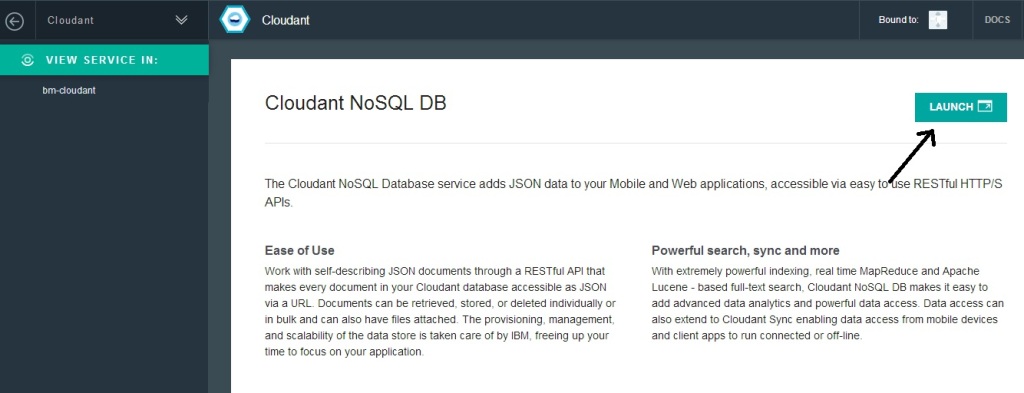

Next click on the “Launch’ icon

This should bring up the Cloudant dashboard. The database will be empty.

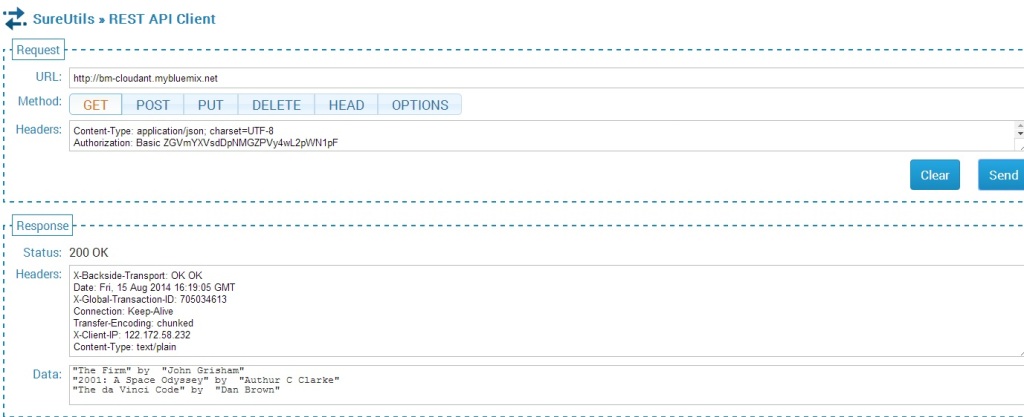

If you use a REST API Client to send a POST API call then the Application will insert 3 documents.

The documents inserted can be seen by sending the GET REST API call.

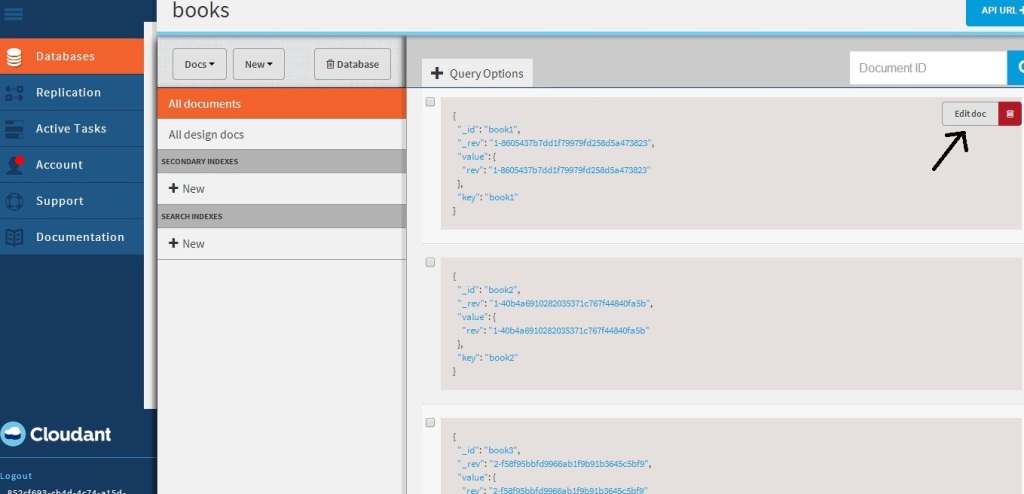

The nice part of Cloudant DB is that you can use the URL to see your database. If you refresh your screen you should see the “books” database added. Clicking this database you should see the 3 documents that have been added

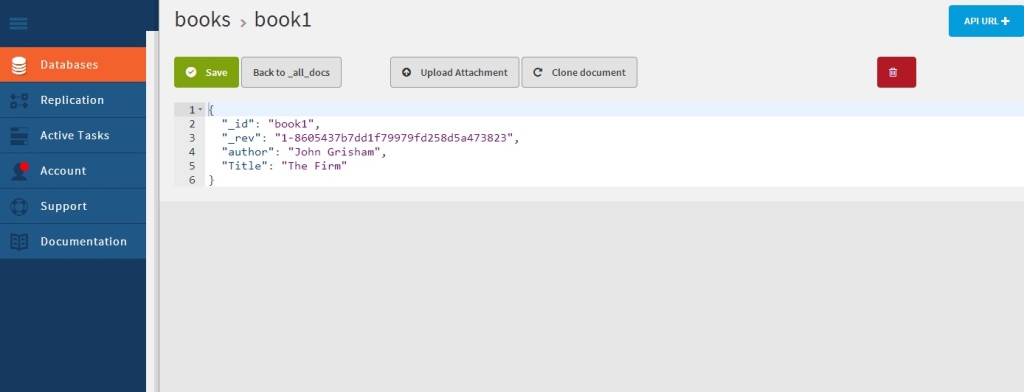

If you click “Edit doc” you should see the details of the document

5) Updating a document

The process to update a document in the database is shown below

// Update book3

db.get('book3', function(err, response) {

console.log(response);

return db.put({

_id: 'book3',

_rev: response._rev,

author: response.author,

Title : 'The da Vinci Code',

});

}, function(err, response) {

if (err) {

console.log("error " + err);

} else {

console.log("Success " + response);

}

});

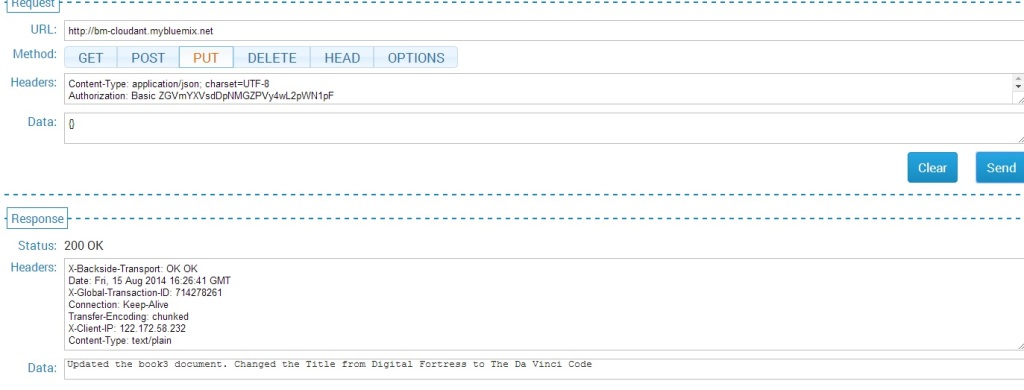

This is performed with a PUT REST API call

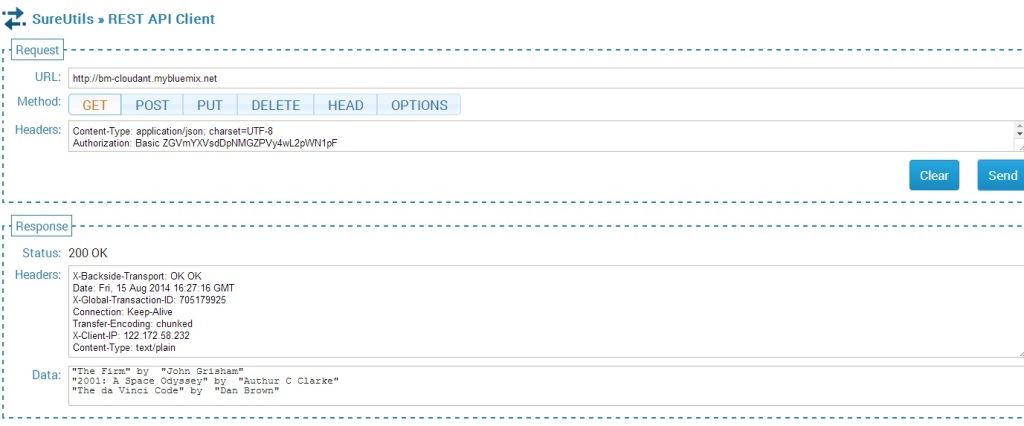

The updated list is shown below

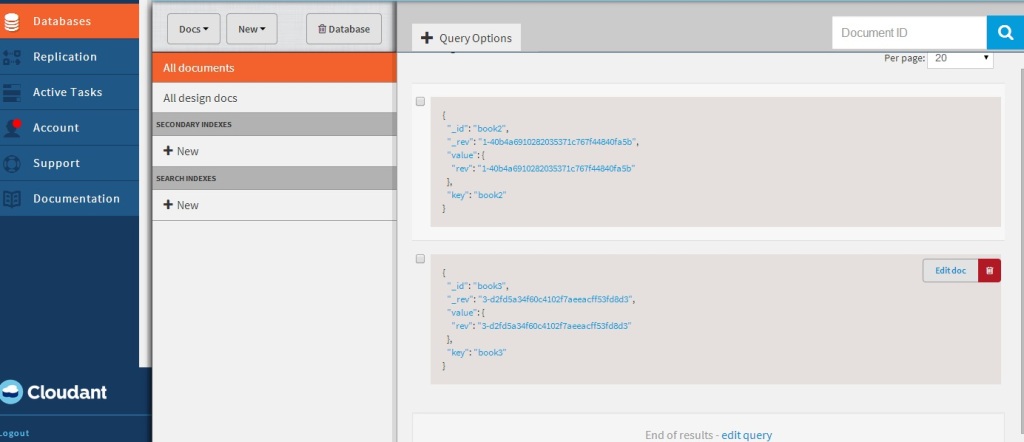

This can be further verified in the Cloudant DB dashboard for book3.

6) Deleting a document

The code to delete a document in PouchDB is shown below

//Deleting document book1

db.get('book1', function(err, doc) {

db.remove(doc, function(err, response) {

console.log(err || response);

});

});

The REST calls to delete a document and the result are shown below

Checking the Cloudant dashboard we see that only book2 & book3 are present and book1 has been deleted

7) Displaying documents in the database

The code for displaying the list of documents is shown below

var docs = db.allDocs(function(err, response) {

val = response.total_rows;

var details = "";

j=0;

for(i=0; i < val; i++) {

db.get(response.rows[i].id, function (err,doc){

j++;

details= details + JSON.stringify(doc.Title) + " by " + JSON.stringify(doc.author) + "\n";

// Kludge because of Node.js asynchronous handling. To be fixed - T V Ganesh

if(j == val) {

res.writeHead(200, {'Content-Type': 'text/plain'});

res.write(details);

res.end();

console.log(details);

}

}); // End db.get

} //End for

}); // End db.allDocs

If you happened to notice, I had to use a kludge to work around Node.js’ idiosyncracy of handling asynchronous calls. I was fooled by the remarkable similarity of Node.js & hence javascript to C language that I thought functions within functions would work sequentially. However I had undergo much grief trying to get Node.js to work sequentially. I wanted to avoid the ‘async’ module but was unsuccessful with trying to code callbacks. So the kludge! I will work this out eventually but this workaround will have to do for now!

As always you can use the “Files and Logs” in the Bluemix dashboard to get any output that are written to stdout.

Note: As always I can’t tell how useful the command

'cf logs <application name> -- recent is for debugging.

Hope you enjoyed this Cloud Medley of Bluemix, Cloudant and Node.js!

Disclaimer: This article represents the author’s viewpoint only and doesn’t necessarily represent IBM’s positions, strategies or opinions

You may also like

1. Brewing a potion with Bluemix, PostgreSQL & Node.js in the cloud

2. A Bluemix recipe with MongoDB and Node.js

3. Spicing up IBM Bluemix with MongoDB and NodeExpress

4. Rock N’ Roll with Bluemix, Cloudant & NodeExpress

Find me on Google+