“Ten little Indian boys went out to dine,

One choked his little self and then there were nine

Nine little Indian boys sat up very late;

One overslept himself and then there were eight…”

From the poem “Ten Little Indians”

You don’t need to be particularly observant to notice that the telecom landscape over the last decade and a half is full of dead organizations, bloodshed and gore. Organizations have been slain by ruthless times and bigger ones have devoured the weaker, fallen ones. Telecom titans have vanished, giants have been reduced to dwarfs.

Some telecom companies have merged in a deadly embrace trying to beat the market forces only to capitulate to its inexorable death march.

The period from the early 1980s to the late 1990’s were the glorious periods for telecommunication. Digital switches (1972-1982), ISDN (1988), international calling, trunk protocols, mobile (~1991), 2G, 2.5G, and 3G moved in succession, one after another.

Advancement came after advancement. The future had never looked so bright for telecom companies.

The late 1990’s were heady years, not just for telecom companies, but to all technology companies. Stock prices soared. Many stocks were over-valued. This was mainly due to what was described as the ‘irrational exuberance’ of the stock market.

Lucent, Alcatel, Ericsson, Nortel Networks, Nokia, Siemens, Telecordia all ruled supreme.

1997-2000. then the inevitable happened. There was the infamous dot-com bust of the 2000 which sent reduced many technology stocks to penny stocks. Telecom company stocks went into a major tail spin. Stock prices of telecom organizations plummeted. This situation, many felt, was further exacerbated by the fact that nothing important or earth shattering was forth-coming from the telecom. In other words, there was no ‘killer app’ from the telecommunication domain.

From 2000 onwards 3G, HSDPA, LTE etc. have all come and gone by. But the markets were largely unimpressed. This was also the period of the downward slide for telecom. The last decade and a half has been extra-ordinarily violent. Technology units of dying organizations have been cannibalized by the more successful ones.

Stellar organizations collapsed, others transformed into ‘white dwarfs’, still others shattered with the ferocity of a super nova.

Here is a short recap of the major events.

- 2006 – After a couple of unsuccessful attempts Alcatel and Lucent finally decide to merge

- 2006 – Nokia marries Siemens in a 20 billion Euro deal. N

- 2009-10 – Ericsson purchases Nortel’s CDMA and LTE business for $1.13 billion

- 2009-10 – Nortel implodes

- 2010 – Motorola sells networking unit to Nokia for $1.2 Billion

- 2011 – Internet giant Google mops up Motorola’s handset division for $12.5 billion, largely for the patents

- 2012 – Ericsson closes a deal with Telcordia for $1.15 billion

- 2013 – Nokia sells its handset division to Microsoft after facing a serious beating from smartphones

- 2015 – Nokia agrees to a $16.6 billion takeover of Alcatel Lucent

And so the story continues like the rhyme in Agatha Christie’s mystery novel

“And then there were none”

“Ten little Indian boys went out to dine,

One choked his little self and then there were nine…”

The Telecom companies continue their search for the elusive ‘killer app’ as progress comes in small increments – 3G, 3.5G, 3.75G, 4G, and 5G etc.

Personally I think the future of Telecom companies, lies in its ability to embrace the latest technologies of Cloud Computing, Big Data, Software Defined Networks, and Software Defined Datacenters and re-invent themselves. Rather than looking for some elusive ‘killer app’ they have to re-enter the technology scene with a Big Bang

As I referred to in one of my earlier posts “Architecting a cloud Based IP Multimedia System” the proverbial pot at the end of the rainbow may be in

- Virtualizing IP Multimedia Switches (IMS) namely the CSCFs (P-CSCF, S-CSCF, I-CSCF etc.),

- Using the features of the cloud like Software Defined Storage (SDS) , Load balancers and auto-scaling to elastically scale-up or scale down the CSCF instances to handle varying ‘call traffic’

- Having equipment manufacturers (Nokia, Ericsson, and Huawei) will have to use innovating pricing models with the carriers like AT&T, MCI, Airtel or Vodafone. Instead of a one-time cost for hardware and software, the equipment manufacturers will need to charge based on usage or call traffic (utility charging). This will be a win-win for both the equipment manufacturer and carrier

- Using SDN to provide the necessary virtualized pipes between users with the necessary policies for advanced services like video-chat, white-boarding, real-time gaming etc.

- Using Big Data and Hadoop to analyze Call Detail Records (CDRs) and provide advanced services to customers like differential rates for calls etc

Clearly there will be challenges in this virtualized view of things. Telecom equipment is renowned for its 5 9’s availability. The challenge will be achieving this resiliency, high availability and fault-tolerance with cloud servers. How can WAN latencies be mitigated? How to can SDN provide the QoS required for voice, video and data traffic in IMS?

IMS has many interesting services where video calls from laptops can be transferred as data calls to mobile phones and vice versa, from mobile networks to WiFi and so on.

Many hurdles will have to be crossed. But this is, in my opinion, will be the path forward.

While the last decade and a half have been bad for the telecom industry, I personally feel we are on the verge on the next big breakthrough in telecom in the next year or two. Telecom will rise like the phoenix from its ashes in the next couple of years

Also see

1. A crime map of India in R: Crimes against women

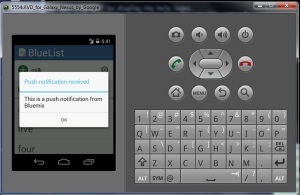

2. What’s up Watson? Using IBM Watson’s QAAPI with Bluemix, NodeExpress – Part 1

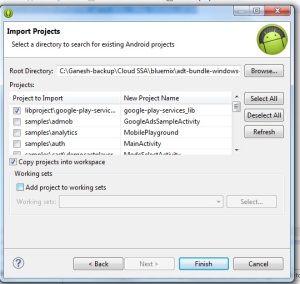

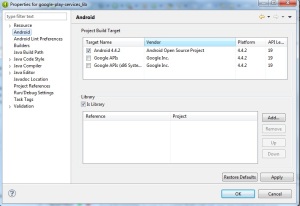

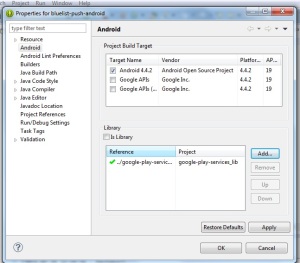

3. Bend it like Bluemix, MongoDB with autoscaling – Part 2

4. Informed choices through Machine Learning : Analyzing Kohli, Tendulkar and Dravid

5. Thinking Web Scale (TWS-3): Map-Reduce – Bring compute to data

6. Deblurring with OpenCV:Weiner filter reloaded